Chuah

TIDAL: Temporally Interleaved Diffusion and Action Loop for High-Frequency VLA Control

Jan 21, 2026Abstract:Large-scale Vision-Language-Action (VLA) models offer semantic generalization but suffer from high inference latency, limiting them to low-frequency batch-and-execute paradigm. This frequency mismatch creates an execution blind spot, causing failures in dynamic environments where targets move during the open-loop execution window. We propose TIDAL (Temporally Interleaved Diffusion and Action Loop), a hierarchical framework that decouples semantic reasoning from high-frequency actuation. TIDAL operates as a backbone-agnostic module for diffusion-based VLAs, using a dual-frequency architecture to redistribute the computational budget. Specifically, a low-frequency macro-intent loop caches semantic embeddings, while a high-frequency micro-control loop interleaves single-step flow integration with execution. This design enables approximately 9 Hz control updates on edge hardware (vs. approximately 2.4 Hz baselines) without increasing marginal overhead. To handle the resulting latency shift, we introduce a temporally misaligned training strategy where the policy learns predictive compensation using stale semantic intent alongside real-time proprioception. Additionally, we address the insensitivity of static vision encoders to velocity by incorporating a differential motion predictor. TIDAL is architectural, making it orthogonal to system-level optimizations. Experiments show a 2x performance gain over open-loop baselines in dynamic interception tasks. Despite a marginal regression in static success rates, our approach yields a 4x increase in feedback frequency and extends the effective horizon of semantic embeddings beyond the native action chunk size. Under non-paused inference protocols, TIDAL remains robust where standard baselines fail due to latency.

Tactile Aware Dynamic Obstacle Avoidance in Crowded Environment with Deep Reinforcement Learning

Jun 19, 2024Abstract:Mobile robots operating in crowded environments require the ability to navigate among humans and surrounding obstacles efficiently while adhering to safety standards and socially compliant mannerisms. This scale of the robot navigation problem may be classified as both a local path planning and trajectory optimization problem. This work presents an array of force sensors that act as a tactile layer to complement the use of a LiDAR for the purpose of inducing awareness of contact with any surrounding objects within immediate vicinity of a mobile robot undetected by LiDARs. By incorporating the tactile layer, the robot can take more risks in its movements and possibly go right up to an obstacle or wall, and gently squeeze past it. In addition, we built up a simulation platform via Pybullet which integrates Robot Operating System (ROS) and reinforcement learning (RL) together. A touch-aware neural network model was trained on it to create an RL-based local path planner for dynamic obstacle avoidance. Our proposed method was demonstrated successfully on an omni-directional mobile robot who was able to navigate in a crowded environment with high agility and versatility in movement, while not being overly sensitive to nearby obstacles-not-in-contact.

Perceptive Locomotion with Controllable Pace and Natural Gait Transitions Over Uneven Terrains

Jan 30, 2023

Abstract:This work developed a learning framework for perceptive legged locomotion that combines visual feedback, proprioceptive information, and active gait regulation of foot-ground contacts. The perception requires only one forward-facing camera to obtain the heightmap, and the active regulation of gait paces and traveling velocity are realized through our formulation of CPG-based high-level imitation of foot-ground contacts. Through this framework, an end-user has the ability to command task-level inputs to control different walking speeds and gait frequencies according to the traversal of different terrains, which enables more reliable negotiation with encountered obstacles. The results demonstrated that the learned perceptive locomotion policy followed task-level control inputs with intended behaviors, and was robust in presence of unseen terrains and external force perturbations. A video demonstration can be found at https://youtu.be/OTzlWzDfAe8, and the codebase at https://github.com/jennyzzt/perceptual-locomotion.

Navigation with Tactile Sensor for Natural Human-Robot Interaction

Nov 23, 2022

Abstract:Tactile sensors have been introduced to a wide range of robotic tasks such as robot manipulation to mimic the sense of human touch. However, there has only been a few works that integrate tactile sensing into robot navigation. This paper describes a navigation system which allows robots to operate in crowded human-dense environments and behave with socially acceptable reactions by utilizing semantic and force information collected by embedded tactile sensors, RGB-D camera and LiDAR. Compliance control is implemented based on artificial potential fields considering not only laser scan but also force reading from tactile sensors which promises a fast and reliable response to any possible collision. In contrast to cameras, LiDAR and other non-contact sensors, tactile sensors can directly interact with humans and can be used to accept social cues akin to natural human behavior under the same situation. Furthermore, leveraging semantic segmentation from vision module, the robot is able to identify and, therefore assign varying social cost to different groups of humans enabling for socially conscious path planning. At the end of this paper, the proposed control strategy was validated successfully by testing several scenarios on an omni-directional robot in real world.

Real-time Digital Double Framework to Predict Collapsible Terrains for Legged Robots

Sep 20, 2022

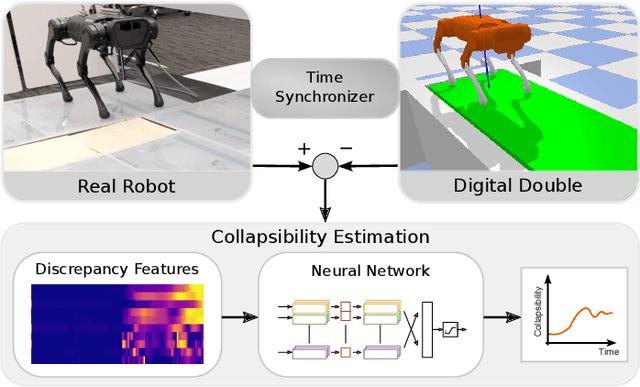

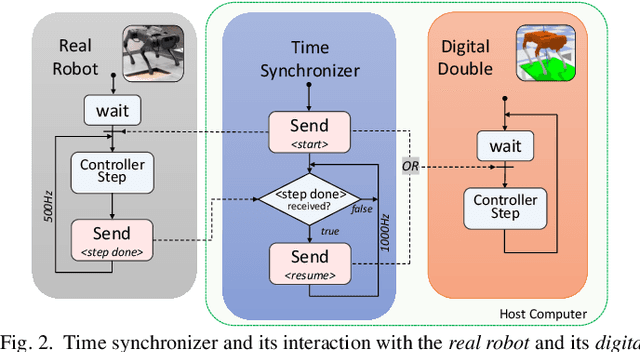

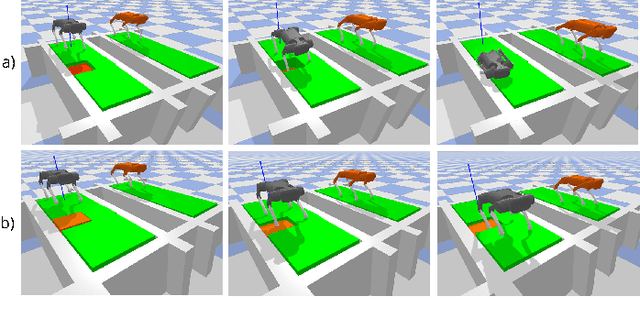

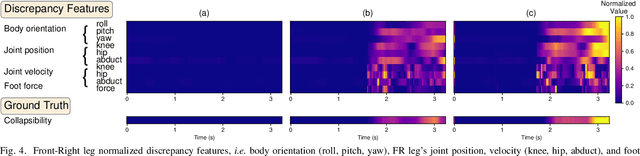

Abstract:Inspired by the digital twinning systems, a novel real-time digital double framework is developed to enhance robot perception of the terrain conditions. Based on the very same physical model and motion control, this work exploits the use of such simulated digital double synchronized with a real robot to capture and extract discrepancy information between the two systems, which provides high dimensional cues in multiple physical quantities to represent differences between the modelled and the real world. Soft, non-rigid terrains cause common failures in legged locomotion, whereby visual perception solely is insufficient in estimating such physical properties of terrains. We used digital double to develop the estimation of the collapsibility, which addressed this issue through physical interactions during dynamic walking. The discrepancy in sensory measurements between the real robot and its digital double are used as input of a learning-based algorithm for terrain collapsibility analysis. Although trained only in simulation, the learned model can perform collapsibility estimation successfully in both simulation and real world. Our evaluation of results showed the generalization to different scenarios and the advantages of the digital double to reliably detect nuances in ground conditions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge