Christoforos Anagnostopoulos

Adaptive regularization for Lasso models in the context of non-stationary data streams

Dec 14, 2017

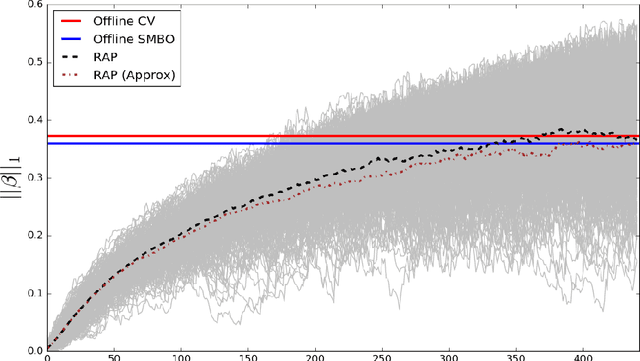

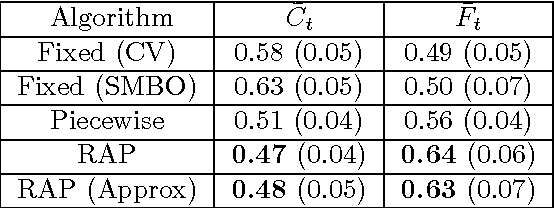

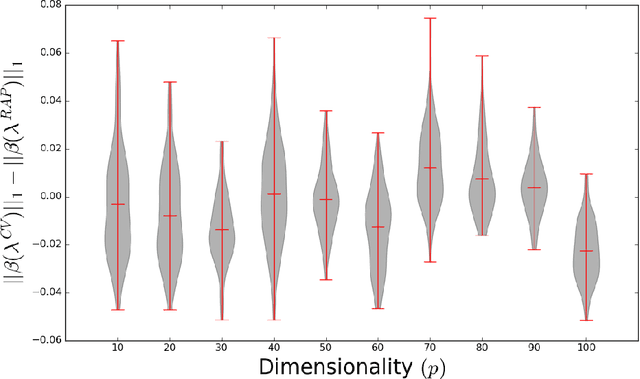

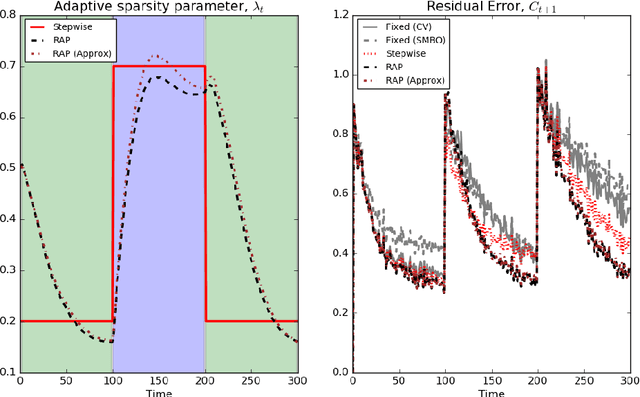

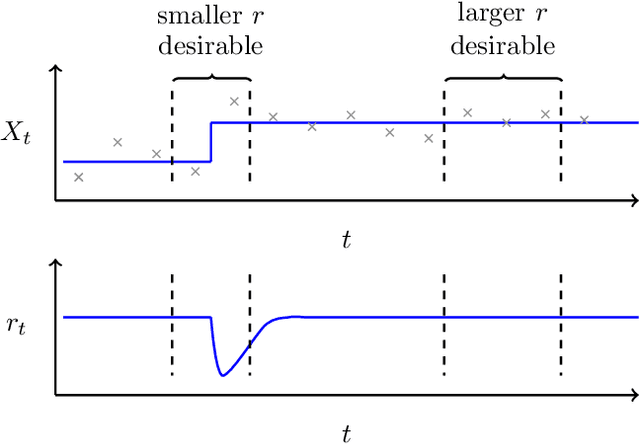

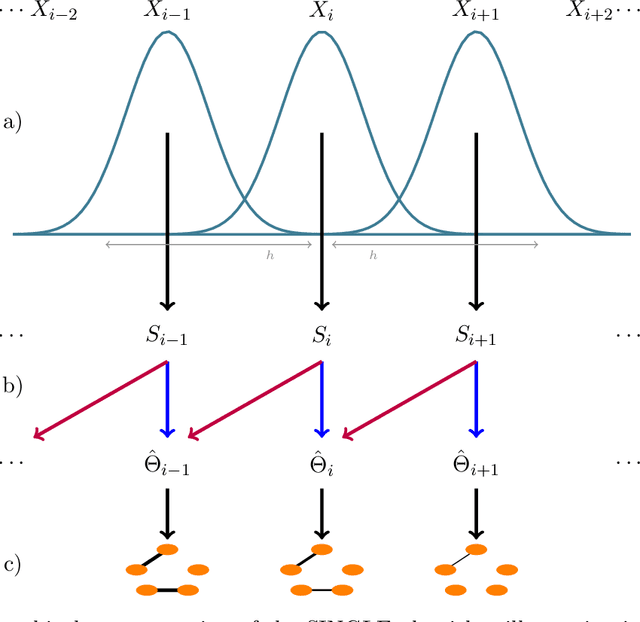

Abstract:Large scale, streaming datasets are ubiquitous in modern machine learning. Streaming algorithms must be scalable, amenable to incremental training and robust to the presence of non-stationarity. In this work consider the problem of learning $\ell_1$ regularized linear models in the context of streaming data. In particular, the focus of this work revolves around how to select the regularization parameter when data arrives sequentially and the underlying distribution is non-stationary (implying the choice of optimal regularization parameter is itself time-varying). We propose a framework through which to infer an adaptive regularization parameter. Our approach employs an $\ell_1$ penalty constraint where the corresponding sparsity parameter is iteratively updated via stochastic gradient descent. This serves to reformulate the choice of regularization parameter in a principled framework for online learning. The proposed method is derived for linear regression and subsequently extended to generalized linear models. We validate our approach using simulated and real datasets and present an application to a neuroimaging dataset.

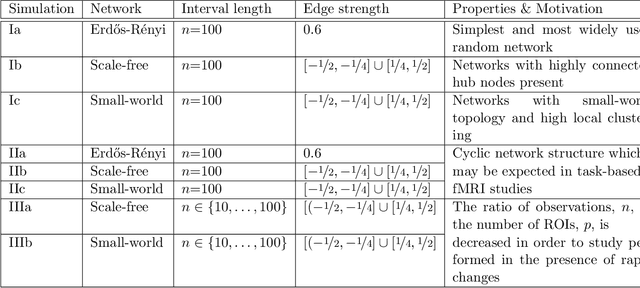

Streaming regularization parameter selection via stochastic gradient descent

Nov 02, 2016Abstract:We propose a framework to perform streaming covariance selection. Our approach employs regularization constraints where a time-varying sparsity parameter is iteratively estimated via stochastic gradient descent. This allows for the regularization parameter to be efficiently learnt in an online manner. The proposed framework is developed for linear regression models and extended to graphical models via neighbourhood selection. Under mild assumptions, we are able to obtain convergence results in a non-stochastic setting. The capabilities of such an approach are demonstrated using both synthetic data as well as neuroimaging data.

Text-mining the NeuroSynth corpus using Deep Boltzmann Machines

May 01, 2016

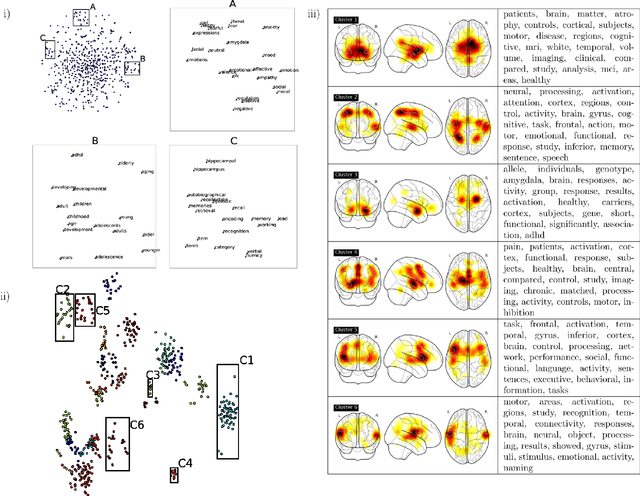

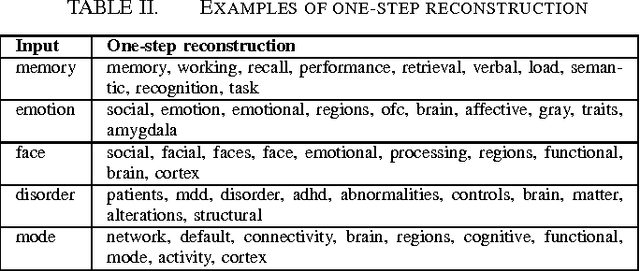

Abstract:Large-scale automated meta-analysis of neuroimaging data has recently established itself as an important tool in advancing our understanding of human brain function. This research has been pioneered by NeuroSynth, a database collecting both brain activation coordinates and associated text across a large cohort of neuroimaging research papers. One of the fundamental aspects of such meta-analysis is text-mining. To date, word counts and more sophisticated methods such as Latent Dirichlet Allocation have been proposed. In this work we present an unsupervised study of the NeuroSynth text corpus using Deep Boltzmann Machines (DBMs). The use of DBMs yields several advantages over the aforementioned methods, principal among which is the fact that it yields both word and document embeddings in a high-dimensional vector space. Such embeddings serve to facilitate the use of traditional machine learning techniques on the text corpus. The proposed DBM model is shown to learn embeddings with a clear semantic structure.

Stopping criteria for boosting automatic experimental design using real-time fMRI with Bayesian optimization

Mar 22, 2016

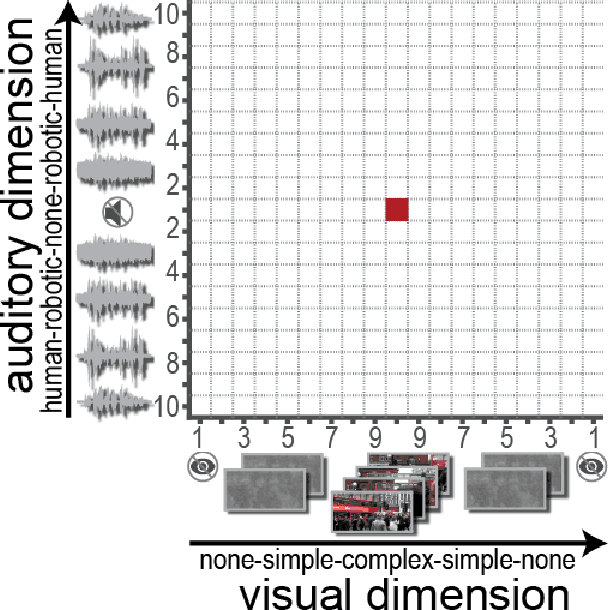

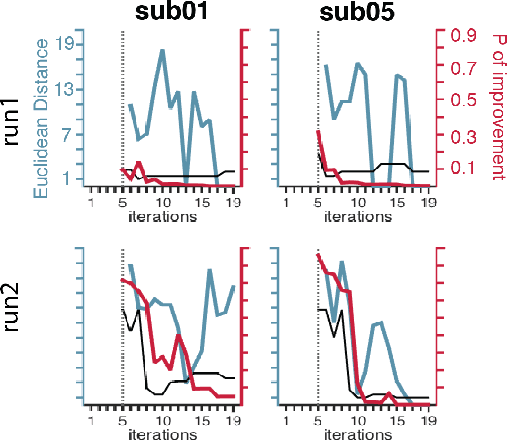

Abstract:Bayesian optimization has been proposed as a practical and efficient tool through which to tune parameters in many difficult settings. Recently, such techniques have been combined with real-time fMRI to propose a novel framework which turns on its head the conventional functional neuroimaging approach. This closed-loop method automatically designs the optimal experiment to evoke a desired target brain pattern. One of the challenges associated with extending such methods to real-time brain imaging is the need for adequate stopping criteria, an aspect of Bayesian optimization which has received limited attention. In light of high scanning costs and limited attentional capacities of subjects an accurate and reliable stopping criteria is essential. In order to address this issue we propose and empirically study the performance of two stopping criteria.

Learning population and subject-specific brain connectivity networks via Mixed Neighborhood Selection

Dec 07, 2015

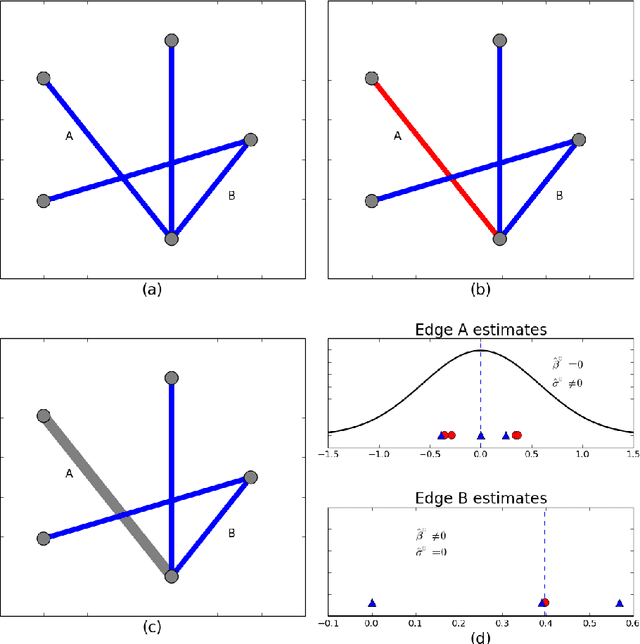

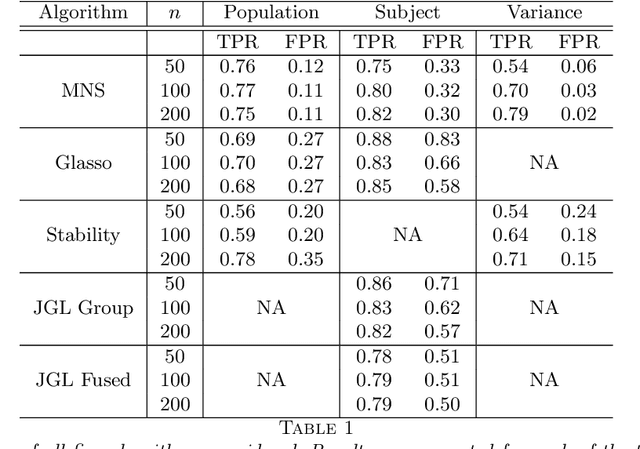

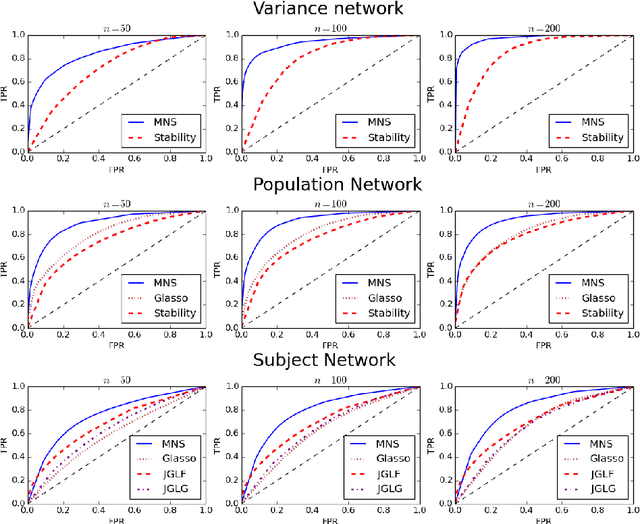

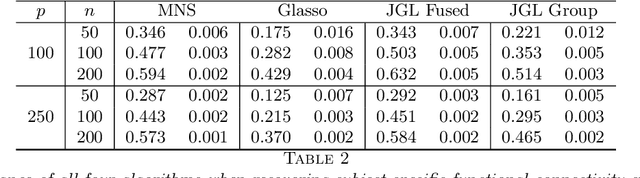

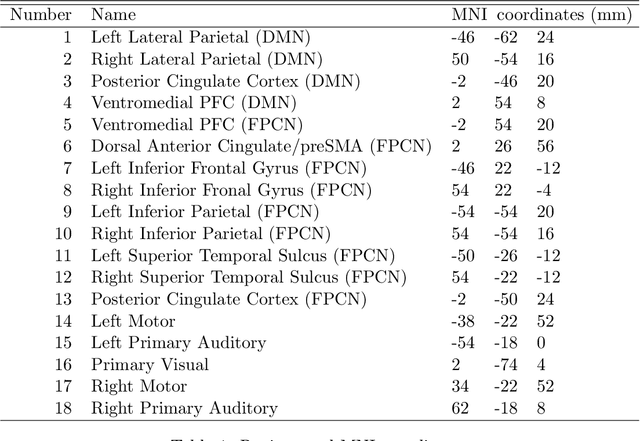

Abstract:In neuroimaging data analysis, Gaussian graphical models are often used to model statistical dependencies across spatially remote brain regions known as functional connectivity. Typically, data is collected across a cohort of subjects and the scientific objectives consist of estimating population and subject-specific graphical models. A third objective that is often overlooked involves quantifying inter-subject variability and thus identifying regions or sub-networks that demonstrate heterogeneity across subjects. Such information is fundamental in order to thoroughly understand the human connectome. We propose Mixed Neighborhood Selection in order to simultaneously address the three aforementioned objectives. By recasting covariance selection as a neighborhood selection problem we are able to efficiently learn the topology of each node. We introduce an additional mixed effect component to neighborhood selection in order to simultaneously estimate a graphical model for the population of subjects as well as for each individual subject. The proposed method is validated empirically through a series of simulations and applied to resting state data for healthy subjects taken from the ABIDE consortium.

Measuring the functional connectome "on-the-fly": towards a new control signal for fMRI-based brain-computer interfaces

Feb 08, 2015

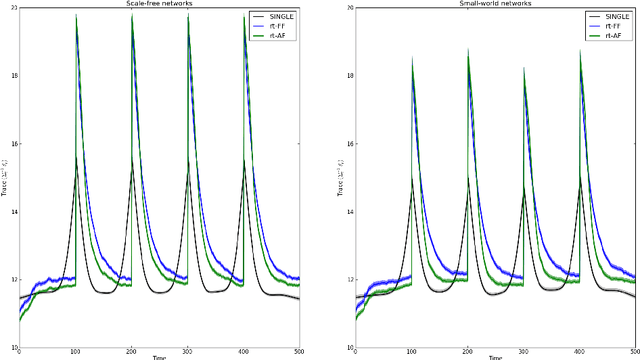

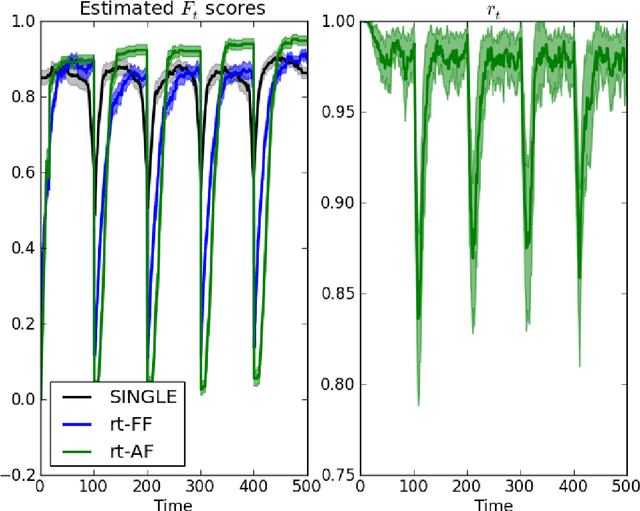

Abstract:There has been an explosion of interest in functional Magnetic Resonance Imaging (MRI) during the past two decades. Naturally, this has been accompanied by many major advances in the understanding of the human connectome. These advances have served to pose novel challenges as well as open new avenues for research. One of the most promising and exciting of such avenues is the study of functional MRI in real-time. Such studies have recently gained momentum and have been applied in a wide variety of settings; ranging from training of healthy subjects to self-regulate neuronal activity to being suggested as potential treatments for clinical populations. To date, the vast majority of these studies have focused on a single region at a time. This is due in part to the many challenges faced when estimating dynamic functional connectivity networks in real-time. In this work we propose a novel methodology with which to accurately track changes in functional connectivity networks in real-time. We adapt the recently proposed SINGLE algorithm for estimating sparse and temporally homo- geneous dynamic networks to be applicable in real-time. The proposed method is applied to motor task data from the Human Connectome Project as well as to real-time data ob- tained while exploring a virtual environment. We show that the algorithm is able to estimate significant task-related changes in network structure quickly enough to be useful in future brain-computer interface applications.

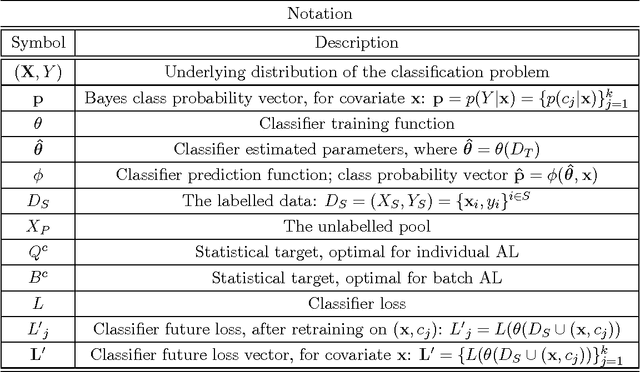

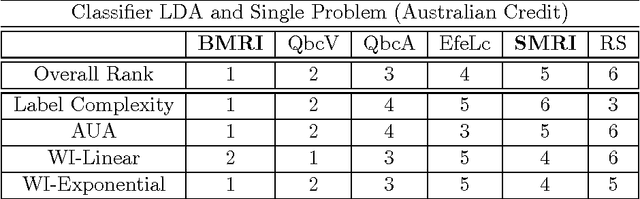

Estimating Optimal Active Learning via Model Retraining Improvement

Feb 05, 2015

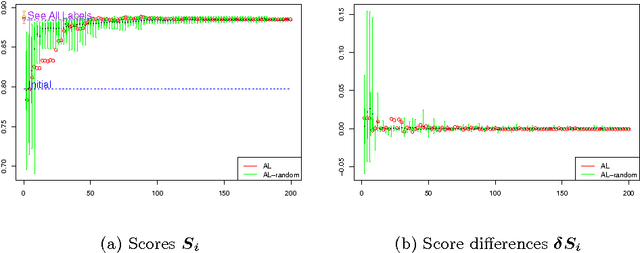

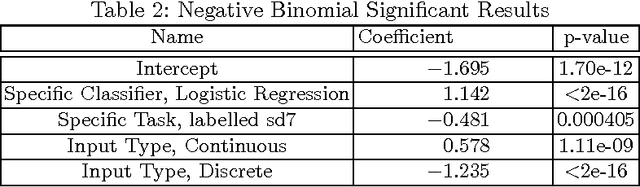

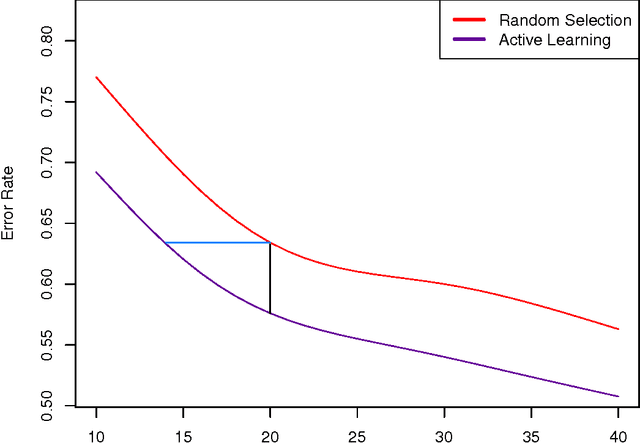

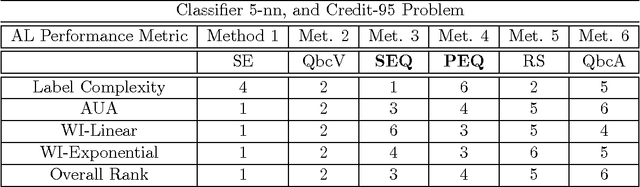

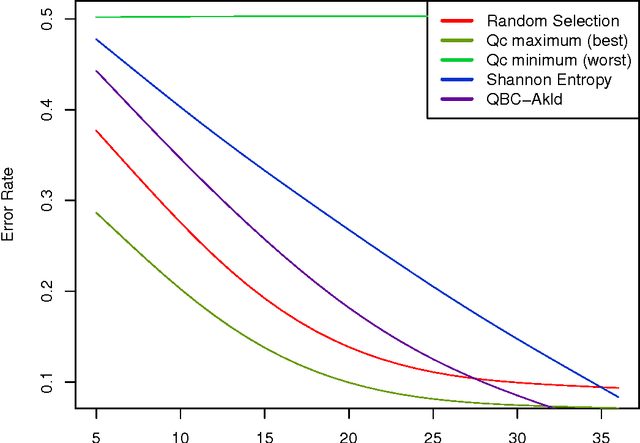

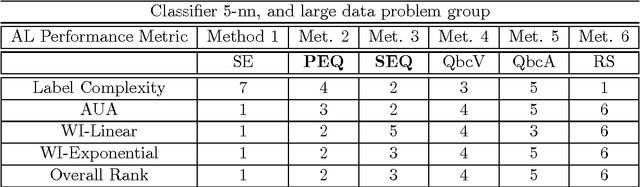

Abstract:A central question for active learning (AL) is: "what is the optimal selection?" Defining optimality by classifier loss produces a new characterisation of optimal AL behaviour, by treating expected loss reduction as a statistical target for estimation. This target forms the basis of model retraining improvement (MRI), a novel approach providing a statistical estimation framework for AL. This framework is constructed to address the central question of AL optimality, and to motivate the design of estimation algorithms. MRI allows the exploration of optimal AL behaviour, and the examination of AL heuristics, showing precisely how they make sub-optimal selections. The abstract formulation of MRI is used to provide a new guarantee for AL, that an unbiased MRI estimator should outperform random selection. This MRI framework reveals intricate estimation issues that in turn motivate the construction of new statistical AL algorithms. One new algorithm in particular performs strongly in a large-scale experimental study, compared to standard AL methods. This competitive performance suggests that practical efforts to minimise estimation bias may be important for AL applications.

When does Active Learning Work?

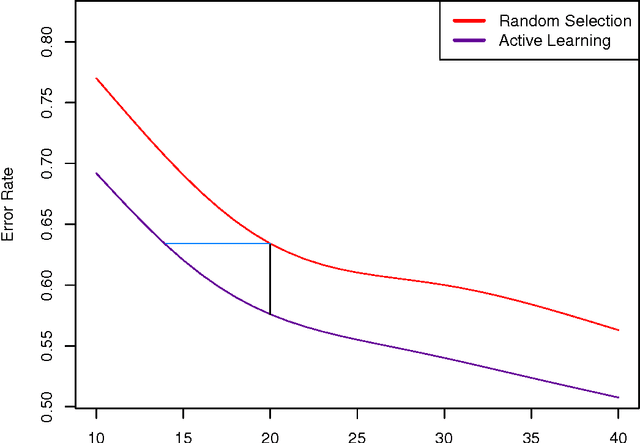

Aug 06, 2014

Abstract:Active Learning (AL) methods seek to improve classifier performance when labels are expensive or scarce. We consider two central questions: Where does AL work? How much does it help? To address these questions, a comprehensive experimental simulation study of Active Learning is presented. We consider a variety of tasks, classifiers and other AL factors, to present a broad exploration of AL performance in various settings. A precise way to quantify performance is needed in order to know when AL works. Thus we also present a detailed methodology for tackling the complexities of assessing AL performance in the context of this experimental study.

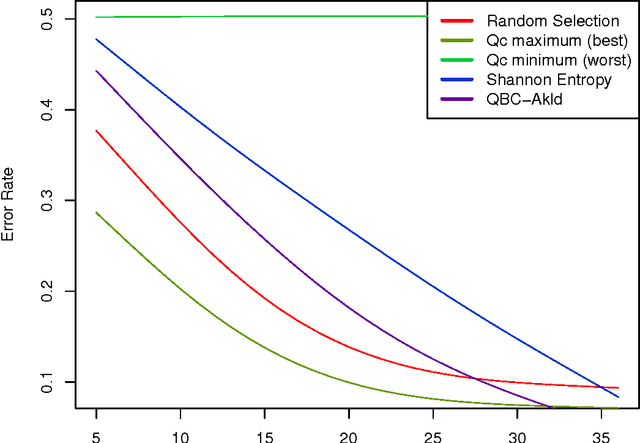

Targeting Optimal Active Learning via Example Quality

Jul 30, 2014

Abstract:In many classification problems unlabelled data is abundant and a subset can be chosen for labelling. This defines the context of active learning (AL), where methods systematically select that subset, to improve a classifier by retraining. Given a classification problem, and a classifier trained on a small number of labelled examples, consider the selection of a single further example. This example will be labelled by the oracle and then used to retrain the classifier. This example selection raises a central question: given a fully specified stochastic description of the classification problem, which example is the optimal selection? If optimality is defined in terms of loss, this definition directly produces expected loss reduction (ELR), a central quantity whose maximum yields the optimal example selection. This work presents a new theoretical approach to AL, example quality, which defines optimal AL behaviour in terms of ELR. Once optimal AL behaviour is defined mathematically, reasoning about this abstraction provides insights into AL. In a theoretical context the optimal selection is compared to existing AL methods, showing that heuristics can make sub-optimal selections. Algorithms are constructed to estimate example quality directly. A large-scale experimental study shows these algorithms to be competitive with standard AL methods.

Estimating Time-varying Brain Connectivity Networks from Functional MRI Time Series

Apr 13, 2014

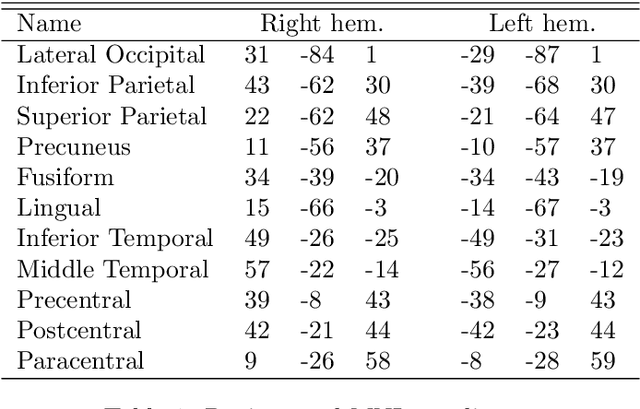

Abstract:Understanding the functional architecture of the brain in terms of networks is becoming increasingly common. In most fMRI applications functional networks are assumed to be stationary, resulting in a single network estimated for the entire time course. However recent results suggest that the connectivity between brain regions is highly non-stationary even at rest. As a result, there is a need for new brain imaging methodologies that comprehensively account for the dynamic (i.e., non-stationary) nature of the fMRI data. In this work we propose the Smooth Incremental Graphical Lasso Estimation (SINGLE) algorithm which estimates dynamic brain networks from fMRI data. We apply the SINGLE algorithm to functional MRI data from 24 healthy patients performing a choice-response task to demonstrate the dynamic changes in network structure that accompany a simple but attentionally demanding cognitive task. Using graph theoretic measures we show that the Right Inferior Frontal Gyrus, frequently reported as playing an important role in cognitive control, dynamically changes with the task. Our results suggest that the Right Inferior Frontal Gyrus plays a fundamental role in the attention and executive function during cognitively demanding tasks and may play a key role in regulating the balance between other brain regions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge