Christian Müller

Reliable Student: Addressing Noise in Semi-Supervised 3D Object Detection

Apr 27, 2024

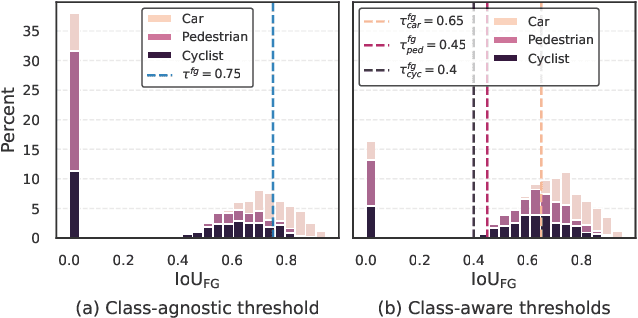

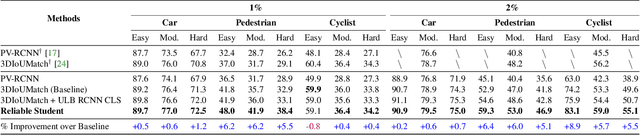

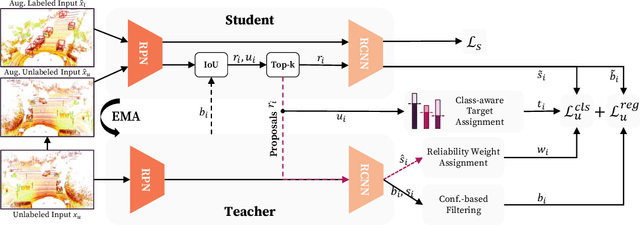

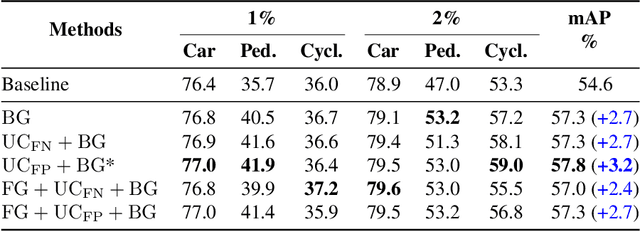

Abstract:Semi-supervised 3D object detection can benefit from the promising pseudo-labeling technique when labeled data is limited. However, recent approaches have overlooked the impact of noisy pseudo-labels during training, despite efforts to enhance pseudo-label quality through confidence-based filtering. In this paper, we examine the impact of noisy pseudo-labels on IoU-based target assignment and propose the Reliable Student framework, which incorporates two complementary approaches to mitigate errors. First, it involves a class-aware target assignment strategy that reduces false negative assignments in difficult classes. Second, it includes a reliability weighting strategy that suppresses false positive assignment errors while also addressing remaining false negatives from the first step. The reliability weights are determined by querying the teacher network for confidence scores of the student-generated proposals. Our work surpasses the previous state-of-the-art on KITTI 3D object detection benchmark on point clouds in the semi-supervised setting. On 1% labeled data, our approach achieves a 6.2% AP improvement for the pedestrian class, despite having only 37 labeled samples available. The improvements become significant for the 2% setting, achieving 6.0% AP and 5.7% AP improvements for the pedestrian and cyclist classes, respectively.

CamLessMonoDepth: Monocular Depth Estimation with Unknown Camera Parameters

Oct 27, 2021

Abstract:Perceiving 3D information is of paramount importance in many applications of computer vision. Recent advances in monocular depth estimation have shown that gaining such knowledge from a single camera input is possible by training deep neural networks to predict inverse depth and pose, without the necessity of ground truth data. The majority of such approaches, however, require camera parameters to be fed explicitly during training. As a result, image sequences from wild cannot be used during training. While there exist methods which also predict camera intrinsics, their performance is not on par with novel methods taking camera parameters as input. In this work, we propose a method for implicit estimation of pinhole camera intrinsics along with depth and pose, by learning from monocular image sequences alone. In addition, by utilizing efficient sub-pixel convolutions, we show that high fidelity depth estimates can be obtained. We also embed pixel-wise uncertainty estimation into the framework, to emphasize the possible applicability of this work in practical domain. Finally, we demonstrate the possibility of accurate prediction of depth information without prior knowledge of camera intrinsics, while outperforming the existing state-of-the-art approaches on KITTI benchmark.

Non-convex Global Minimization and False Discovery Rate Control for the TREX

Sep 20, 2016

Abstract:The TREX is a recently introduced method for performing sparse high-dimensional regression. Despite its statistical promise as an alternative to the lasso, square-root lasso, and scaled lasso, the TREX is computationally challenging in that it requires solving a non-convex optimization problem. This paper shows a remarkable result: despite the non-convexity of the TREX problem, there exists a polynomial-time algorithm that is guaranteed to find the global minimum. This result adds the TREX to a very short list of non-convex optimization problems that can be globally optimized (principal components analysis being a famous example). After deriving and developing this new approach, we demonstrate that (i) the ability of the preexisting TREX heuristic to reach the global minimum is strongly dependent on the difficulty of the underlying statistical problem, (ii) the new polynomial-time algorithm for TREX permits a novel variable ranking and selection scheme, (iii) this scheme can be incorporated into a rule that controls the false discovery rate (FDR) of included features in the model. To achieve this last aim, we provide an extension of the results of Barber & Candes (2015) to establish that the knockoff filter framework can be applied to the TREX. This investigation thus provides both a rare case study of a heuristic for non-convex optimization and a novel way of exploiting non-convexity for statistical inference.

Don't Fall for Tuning Parameters: Tuning-Free Variable Selection in High Dimensions With the TREX

May 24, 2015

Abstract:Lasso is a seminal contribution to high-dimensional statistics, but it hinges on a tuning parameter that is difficult to calibrate in practice. A partial remedy for this problem is Square-Root Lasso, because it inherently calibrates to the noise variance. However, Square-Root Lasso still requires the calibration of a tuning parameter to all other aspects of the model. In this study, we introduce TREX, an alternative to Lasso with an inherent calibration to all aspects of the model. This adaptation to the entire model renders TREX an estimator that does not require any calibration of tuning parameters. We show that TREX can outperform cross-validated Lasso in terms of variable selection and computational efficiency. We also introduce a bootstrapped version of TREX that can further improve variable selection. We illustrate the promising performance of TREX both on synthetic data and on a recent high-dimensional biological data set that considers riboflavin production in B. subtilis.

Topology Adaptive Graph Estimation in High Dimensions

Oct 27, 2014

Abstract:We introduce Graphical TREX (GTREX), a novel method for graph estimation in high-dimensional Gaussian graphical models. By conducting neighborhood selection with TREX, GTREX avoids tuning parameters and is adaptive to the graph topology. We compare GTREX with standard methods on a new simulation set-up that is designed to assess accurately the strengths and shortcomings of different methods. These simulations show that a neighborhood selection scheme based on Lasso and an optimal (in practice unknown) tuning parameter outperforms other standard methods over a large spectrum of scenarios. Moreover, we show that GTREX can rival this scheme and, therefore, can provide competitive graph estimation without the need for tuning parameter calibration.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge