Farzad Nozarian

Reliable Student: Addressing Noise in Semi-Supervised 3D Object Detection

Apr 27, 2024

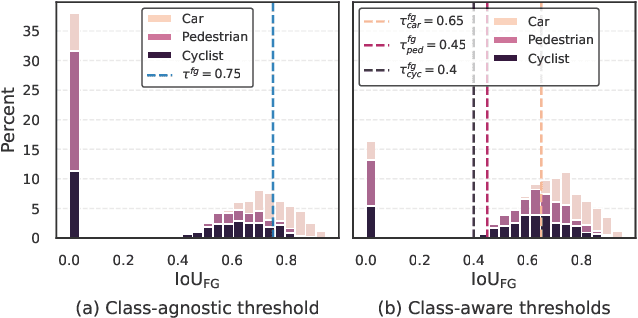

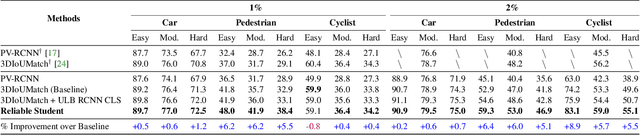

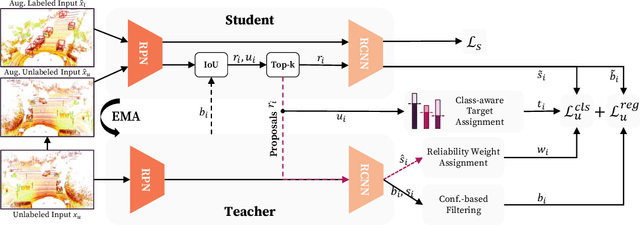

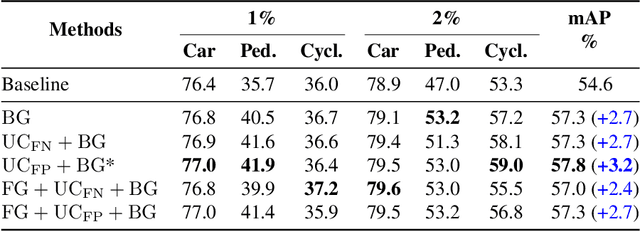

Abstract:Semi-supervised 3D object detection can benefit from the promising pseudo-labeling technique when labeled data is limited. However, recent approaches have overlooked the impact of noisy pseudo-labels during training, despite efforts to enhance pseudo-label quality through confidence-based filtering. In this paper, we examine the impact of noisy pseudo-labels on IoU-based target assignment and propose the Reliable Student framework, which incorporates two complementary approaches to mitigate errors. First, it involves a class-aware target assignment strategy that reduces false negative assignments in difficult classes. Second, it includes a reliability weighting strategy that suppresses false positive assignment errors while also addressing remaining false negatives from the first step. The reliability weights are determined by querying the teacher network for confidence scores of the student-generated proposals. Our work surpasses the previous state-of-the-art on KITTI 3D object detection benchmark on point clouds in the semi-supervised setting. On 1% labeled data, our approach achieves a 6.2% AP improvement for the pedestrian class, despite having only 37 labeled samples available. The improvements become significant for the 2% setting, achieving 6.0% AP and 5.7% AP improvements for the pedestrian and cyclist classes, respectively.

A Safety-Adapted Loss for Pedestrian Detection in Automated Driving

Feb 05, 2024Abstract:In safety-critical domains like automated driving (AD), errors by the object detector may endanger pedestrians and other vulnerable road users (VRU). As common evaluation metrics are not an adequate safety indicator, recent works employ approaches to identify safety-critical VRU and back-annotate the risk to the object detector. However, those approaches do not consider the safety factor in the deep neural network (DNN) training process. Thus, state-of-the-art DNN penalizes all misdetections equally irrespective of their criticality. Subsequently, to mitigate the occurrence of critical failure cases, i.e., false negatives, a safety-aware training strategy might be required to enhance the detection performance for critical pedestrians. In this paper, we propose a novel safety-aware loss variation that leverages the estimated per-pedestrian criticality scores during training. We exploit the reachability set-based time-to-collision (TTC-RSB) metric from the motion domain along with distance information to account for the worst-case threat quantifying the criticality. Our evaluation results using RetinaNet and FCOS on the nuScenes dataset demonstrate that training the models with our safety-aware loss function mitigates the misdetection of critical pedestrians without sacrificing performance for the general case, i.e., pedestrians outside the safety-critical zone.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge