Cheng Yao

HypomimiaCoach: An AU-based Digital Therapy System for Hypomimia Detection & Rehabilitation with Parkinson's Disease

Oct 13, 2024

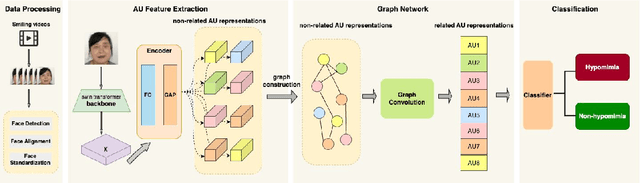

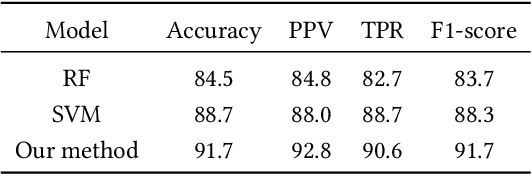

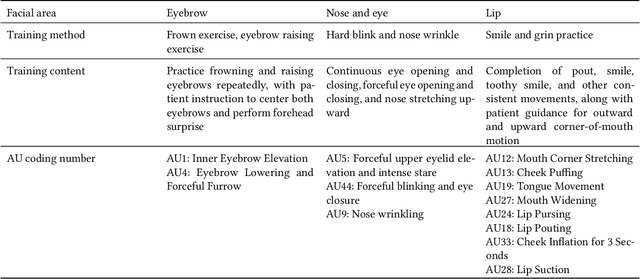

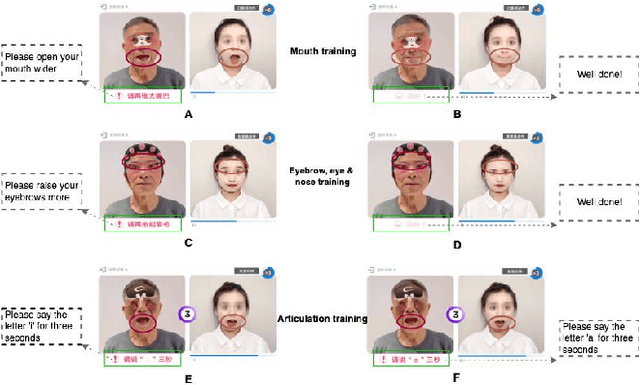

Abstract:Hypomimia is a non-motor symptom of Parkinson's disease that manifests as delayed facial movements and expressions, along with challenges in articulation and emotion. Currently, subjective evaluation by neurologists is the primary method for hypomimia detection, and conventional rehabilitation approaches heavily rely on verbal prompts from rehabilitation physicians. There remains a deficiency in accessible, user-friendly and scientifically rigorous assistive tools for hypomimia treatments. To investigate this, we developed HypomimaCoach, an Action Unit (AU)-based digital therapy system for hypomimia detection and rehabilitation in Parkinson's disease. The HypomimaCoach system was designed to facilitate engagement through the incorporation of both relaxed and controlled rehabilitation exercises, while also stimulating initiative through the integration of digital therapies that incorporated traditional face training methods. We extract action unit(AU) features and their relationship for hypomimia detection. In order to facilitate rehabilitation, a series of training programmes have been devised based on the Action Units (AUs) and patients are provided with real-time feedback through an additional AU recognition model, which guides them through their training routines. A pilot study was conducted with seven participants in China, all of whom exhibited symptoms of Parkinson's disease hypomimia. The results of the pilot study demonstrated a positive impact on participants' self-efficacy, with favourable feedback received. Furthermore, physician evaluations validated the system's applicability in a therapeutic setting for patients with Parkinson's disease, as well as its potential value in clinical applications.

Prompt Tuning for Multi-View Graph Contrastive Learning

Oct 16, 2023Abstract:In recent years, "pre-training and fine-tuning" has emerged as a promising approach in addressing the issues of label dependency and poor generalization performance in traditional GNNs. To reduce labeling requirement, the "pre-train, fine-tune" and "pre-train, prompt" paradigms have become increasingly common. In particular, prompt tuning is a popular alternative to "pre-training and fine-tuning" in natural language processing, which is designed to narrow the gap between pre-training and downstream objectives. However, existing study of prompting on graphs is still limited, lacking a framework that can accommodate commonly used graph pre-training methods and downstream tasks. In this paper, we propose a multi-view graph contrastive learning method as pretext and design a prompting tuning for it. Specifically, we first reformulate graph pre-training and downstream tasks into a common format. Second, we construct multi-view contrasts to capture relevant information of graphs by GNN. Third, we design a prompting tuning method for our multi-view graph contrastive learning method to bridge the gap between pretexts and downsteam tasks. Finally, we conduct extensive experiments on benchmark datasets to evaluate and analyze our proposed method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge