Changqing Liu

A general reduced-order neural operator for spatio-temporal predictive learning on complex spatial domains

Sep 09, 2024

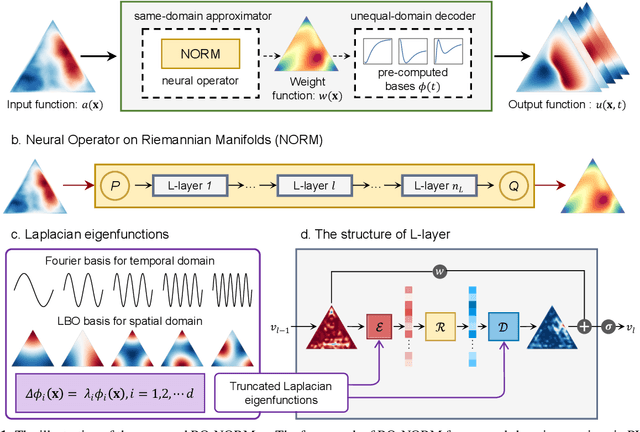

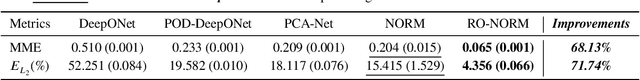

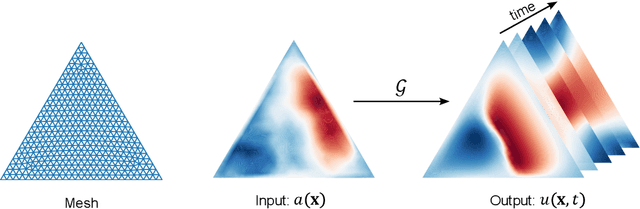

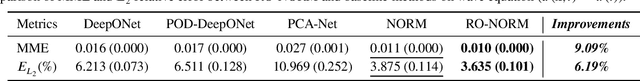

Abstract:Predictive learning for spatio-temporal processes (PL-STP) on complex spatial domains plays a critical role in various scientific and engineering fields, with its essence being the construction of operators between infinite-dimensional function spaces. This paper focuses on the unequal-domain mappings in PL-STP and categorising them into increase-domain and decrease-domain mapping. Recent advances in deep learning have revealed the great potential of neural operators (NOs) to learn operators directly from observational data. However, existing NOs require input space and output space to be the same domain, which pose challenges in ensuring predictive accuracy and stability for unequal-domain mappings. To this end, this study presents a general reduced-order neural operator named Reduced-Order Neural Operator on Riemannian Manifolds (RO-NORM), which consists of two parts: the unequal-domain encoder/decoder and the same-domain approximator. Motivated by the variable separation in classical modal decomposition, the unequal-domain encoder/decoder uses the pre-computed bases to reformulate the spatio-temporal function as a sum of products between spatial (or temporal) bases and corresponding temporally (or spatially) distributed weight functions, thus the original unequal-domain mapping can be converted into a same-domain mapping. Consequently, the same-domain approximator NORM is applied to model the transformed mapping. The performance of our proposed method has been evaluated on six benchmark cases, including parametric PDEs, engineering and biomedical applications, and compared with four baseline algorithms: DeepONet, POD-DeepONet, PCA-Net, and vanilla NORM. The experimental results demonstrate the superiority of RO-NORM in prediction accuracy and training efficiency for PL-STP.

Diffeomorphism Neural Operator for various domains and parameters of partial differential equations

Feb 19, 2024

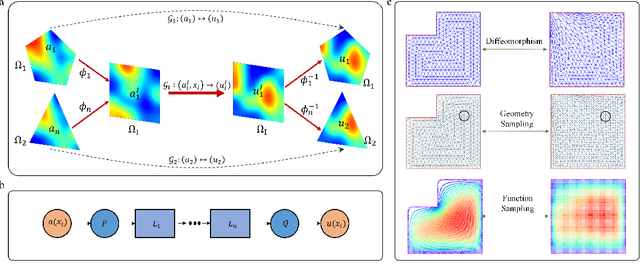

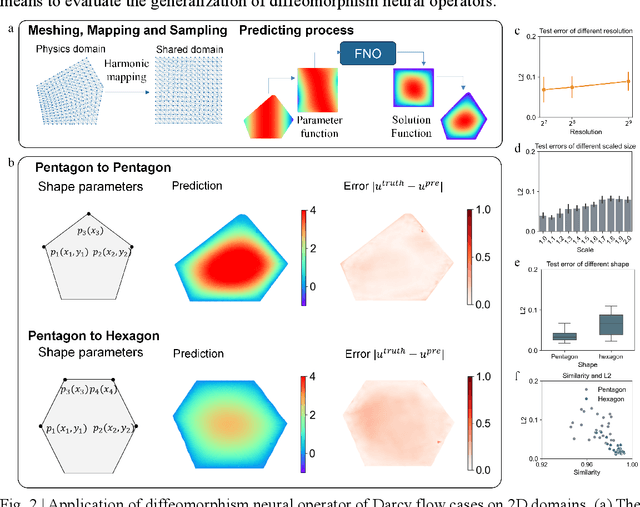

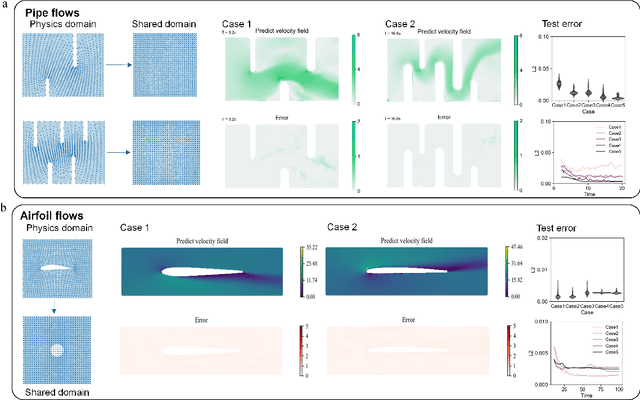

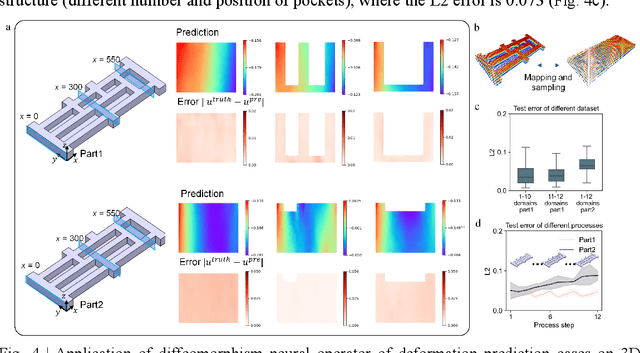

Abstract:Many science and engineering applications demand partial differential equations (PDE) evaluations that are traditionally computed with resource-intensive numerical solvers. Neural operator models provide an efficient alternative by learning the governing physical laws directly from data in a class of PDEs with different parameters, but constrained in a fixed boundary (domain). Many applications, such as design and manufacturing, would benefit from neural operators with flexible domains when studied at scale. Here we present a diffeomorphism neural operator learning framework towards developing domain-flexible models for physical systems with various and complex domains. Specifically, a neural operator trained in a shared domain mapped from various domains of fields by diffeomorphism is proposed, which transformed the problem of learning function mappings in varying domains (spaces) into the problem of learning operators on a shared diffeomorphic domain. Meanwhile, an index is provided to evaluate the generalization of diffeomorphism neural operators in different domains by the domain diffeomorphism similarity. Experiments on statics scenarios (Darcy flow, mechanics) and dynamic scenarios (pipe flow, airfoil flow) demonstrate the advantages of our approach for neural operator learning under various domains, where harmonic and volume parameterization are used as the diffeomorphism for 2D and 3D domains. Our diffeomorphism neural operator approach enables strong learning capability and robust generalization across varying domains and parameters.

Defining Concepts of Emotion: From Philosophy to Science

Feb 11, 2016Abstract:This paper is motivated by a series of (related) questions as to whether a computer can have pleasure and pain, what pleasure (and intensity of pleasure) is, and, ultimately, what concepts of emotion are. To determine what an emotion is, is a matter of conceptualization, namely, understanding and explicitly encoding the concept of emotion as people use it in everyday life. This is a notoriously difficult problem (Frijda, 1986, Fehr \& Russell, 1984). This paper firstly shows why this is a difficult problem by aligning it with the conceptualization of a few other so called semantic primitives such as "EXIST", "FORCE", "BIG" (plus "LIMIT"). The definitions of these thought-to-be-indefinable concepts, given in this paper, show what formal definitions of concepts look like and how concepts are constructed. As a by-product, owing to the explicit account of the meaning of "exist", the famous dispute between Einstein and Bohr is naturally resolved from linguistic point of view. Secondly, defending Frijda's view that emotion is action tendency (or Ryle's behavioral disposition (propensity)), we give a list of emotions defined in terms of action tendency. In particular, the definitions of pleasure and the feeling of beauty are presented. Further, we give a formal definition of "action tendency", from which the concept of "intensity" of emotions (including pleasure) is naturally derived in a formal fashion. The meanings of "wish", "wait", "good", "hot" are analyzed.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge