A general reduced-order neural operator for spatio-temporal predictive learning on complex spatial domains

Paper and Code

Sep 09, 2024

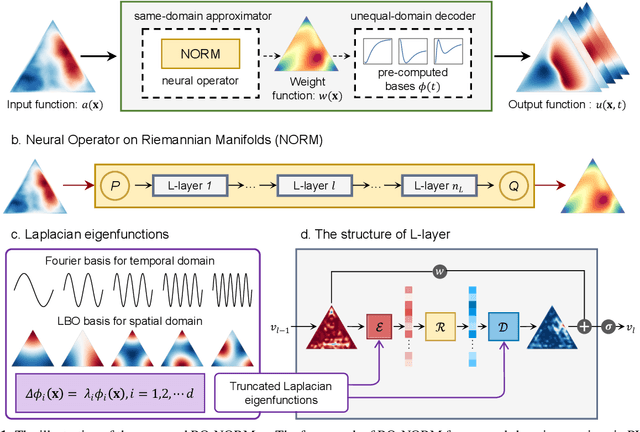

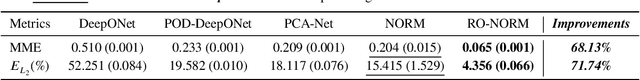

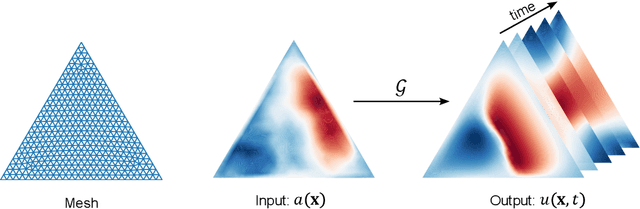

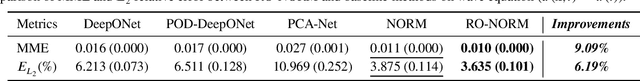

Predictive learning for spatio-temporal processes (PL-STP) on complex spatial domains plays a critical role in various scientific and engineering fields, with its essence being the construction of operators between infinite-dimensional function spaces. This paper focuses on the unequal-domain mappings in PL-STP and categorising them into increase-domain and decrease-domain mapping. Recent advances in deep learning have revealed the great potential of neural operators (NOs) to learn operators directly from observational data. However, existing NOs require input space and output space to be the same domain, which pose challenges in ensuring predictive accuracy and stability for unequal-domain mappings. To this end, this study presents a general reduced-order neural operator named Reduced-Order Neural Operator on Riemannian Manifolds (RO-NORM), which consists of two parts: the unequal-domain encoder/decoder and the same-domain approximator. Motivated by the variable separation in classical modal decomposition, the unequal-domain encoder/decoder uses the pre-computed bases to reformulate the spatio-temporal function as a sum of products between spatial (or temporal) bases and corresponding temporally (or spatially) distributed weight functions, thus the original unequal-domain mapping can be converted into a same-domain mapping. Consequently, the same-domain approximator NORM is applied to model the transformed mapping. The performance of our proposed method has been evaluated on six benchmark cases, including parametric PDEs, engineering and biomedical applications, and compared with four baseline algorithms: DeepONet, POD-DeepONet, PCA-Net, and vanilla NORM. The experimental results demonstrate the superiority of RO-NORM in prediction accuracy and training efficiency for PL-STP.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge