Changfeng Yu

Unsupervised Deraining: Where Asymmetric Contrastive Learning Meets Self-similarity

Nov 02, 2022

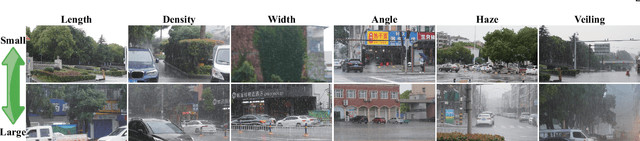

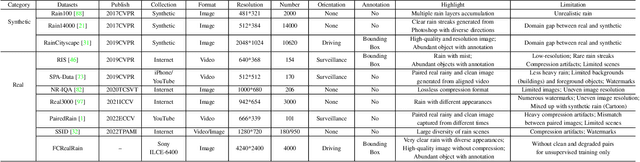

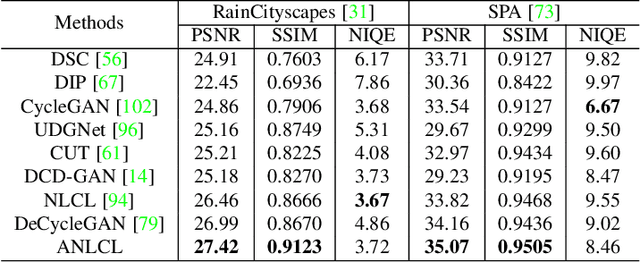

Abstract:Most of the existing learning-based deraining methods are supervisedly trained on synthetic rainy-clean pairs. The domain gap between the synthetic and real rain makes them less generalized to complex real rainy scenes. Moreover, the existing methods mainly utilize the property of the image or rain layers independently, while few of them have considered their mutually exclusive relationship. To solve above dilemma, we explore the intrinsic intra-similarity within each layer and inter-exclusiveness between two layers and propose an unsupervised non-local contrastive learning (NLCL) deraining method. The non-local self-similarity image patches as the positives are tightly pulled together, rain patches as the negatives are remarkably pushed away, and vice versa. On one hand, the intrinsic self-similarity knowledge within positive/negative samples of each layer benefits us to discover more compact representation; on the other hand, the mutually exclusive property between the two layers enriches the discriminative decomposition. Thus, the internal self-similarity within each layer (similarity) and the external exclusive relationship of the two layers (dissimilarity) serving as a generic image prior jointly facilitate us to unsupervisedly differentiate the rain from clean image. We further discover that the intrinsic dimension of the non-local image patches is generally higher than that of the rain patches. This motivates us to design an asymmetric contrastive loss to precisely model the compactness discrepancy of the two layers for better discriminative decomposition. In addition, considering that the existing real rain datasets are of low quality, either small scale or downloaded from the internet, we collect a real large-scale dataset under various rainy kinds of weather that contains high-resolution rainy images.

Unsupervised Image Deraining: Optimization Model Driven Deep CNN

Mar 25, 2022

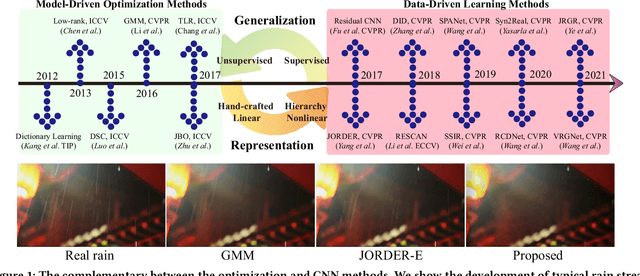

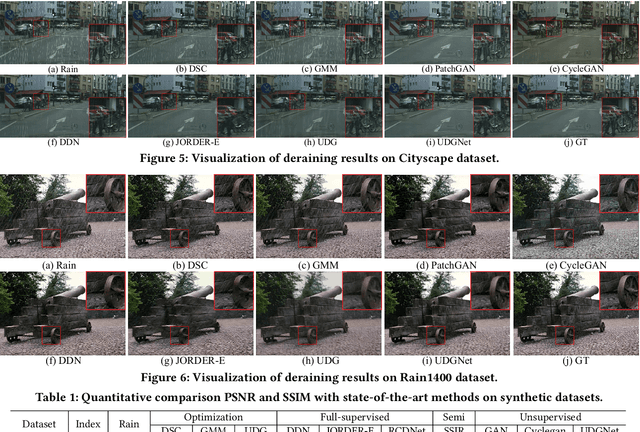

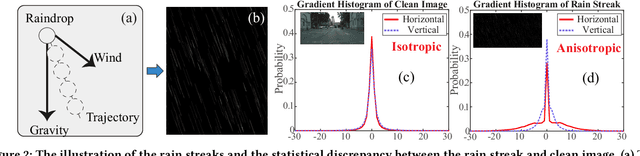

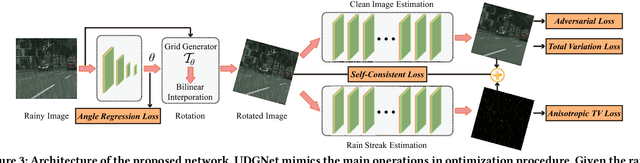

Abstract:The deep convolutional neural network has achieved significant progress for single image rain streak removal. However, most of the data-driven learning methods are full-supervised or semi-supervised, unexpectedly suffering from significant performance drops when dealing with real rain. These data-driven learning methods are representative yet generalize poor for real rain. The opposite holds true for the model-driven unsupervised optimization methods. To overcome these problems, we propose a unified unsupervised learning framework which inherits the generalization and representation merits for real rain removal. Specifically, we first discover a simple yet important domain knowledge that directional rain streak is anisotropic while the natural clean image is isotropic, and formulate the structural discrepancy into the energy function of the optimization model. Consequently, we design an optimization model-driven deep CNN in which the unsupervised loss function of the optimization model is enforced on the proposed network for better generalization. In addition, the architecture of the network mimics the main role of the optimization models with better feature representation. On one hand, we take advantage of the deep network to improve the representation. On the other hand, we utilize the unsupervised loss of the optimization model for better generalization. Overall, the unsupervised learning framework achieves good generalization and representation: unsupervised training (loss) with only a few real rainy images (input) and physical meaning network (architecture). Extensive experiments on synthetic and real-world rain datasets show the superiority of the proposed method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge