Chae-Bong Sohn

Dynamic Joint Scheduling of Anycast Transmission and Modulation in Hybrid Unicast-Multicast SWIPT-Based IoT Sensor Networks

Jul 17, 2023Abstract:The separate receiver architecture with a time- or power-splitting mode, widely used for simultaneous wireless information and power transfer (SWIPT), has a major drawback: Energy-intensive local oscillators and mixers need to be installed in the information decoding (ID) component to downconvert radio frequency (RF) signals to baseband signals, resulting in high energy consumption. As a solution to this challenge, an integrated receiver (IR) architecture has been proposed, and, in turn, various SWIPT modulation schemes compatible with the IR architecture have been developed. However, to the best of our knowledge, no research has been conducted on modulation scheduling in SWIPT-based IoT sensor networks while taking into account the IR architecture. Accordingly, in this paper, we address this research gap by studying the problem of joint scheduling for unicast/multicast, IoT sensor, and modulation (UMSM) in a time-slotted SWIPT-based IoT sensor network system. To this end, we leverage mathematical modeling and optimization techniques, such as the Lagrangian duality and stochastic optimization theory, to develop an UMSM scheduling algorithm that maximizes the weighted sum of average unicast service throughput and harvested energy of IoT sensors, while ensuring the minimum average throughput of both multicast and unicast, as well as the minimum average harvested energy of IoT sensors. Finally, we demonstrate through extensive simulations that our UMSM scheduling algorithm achieves superior energy harvesting (EH) and throughput performance while ensuring the satisfaction of specified constraints well.

DeepSoCS: A Neural Scheduler for Heterogeneous System-on-Chip (SoC) Resource Scheduling

Jun 05, 2020

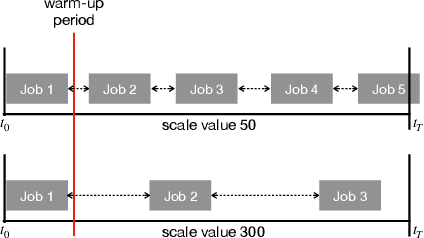

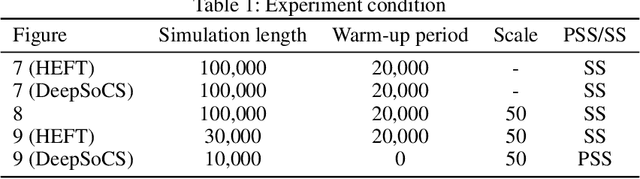

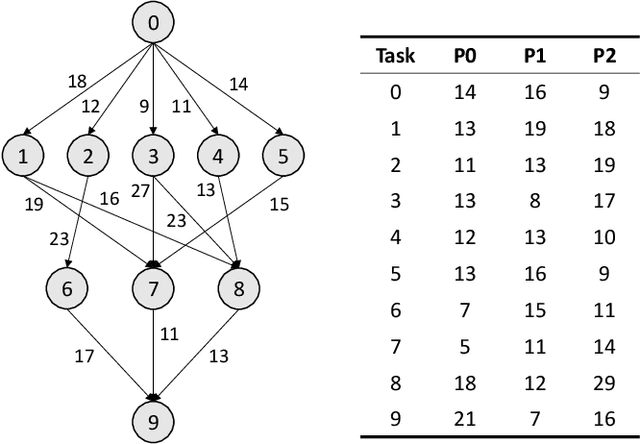

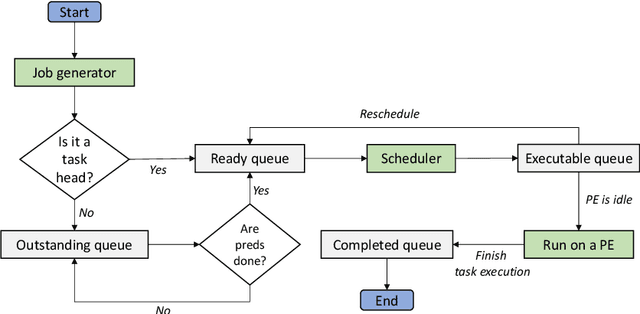

Abstract:In this paper, we~present a novel scheduling solution for a class of System-on-Chip (SoC) systems where heterogeneous chip resources (DSP, FPGA, GPU, etc.) must be efficiently scheduled for continuously arriving hierarchical jobs with their tasks represented by a directed acyclic graph. Traditionally, heuristic algorithms have been widely used for many resource scheduling domains, and Heterogeneous Earliest Finish Time (HEFT) has been a dominating state-of-the-art technique across a broad range of heterogeneous resource scheduling domains over many years. Despite their long-standing popularity, HEFT-like algorithms are known to be vulnerable to a small amount of noise added to the environment. Our Deep Reinforcement Learning (DRL)-based SoC Scheduler (DeepSoCS), capable of learning the "best" task ordering under dynamic environment changes, overcomes the brittleness of rule-based schedulers such as HEFT with significantly higher performance across different types of jobs. We~describe a DeepSoCS design process using a real-time heterogeneous SoC scheduling emulator, discuss major challenges, and present two novel neural network design features that lead to outperforming HEFT: (i) hierarchical job- and task-graph embedding; and (ii) efficient use of real-time task information in the state space. Furthermore, we~introduce effective techniques to address two fundamental challenges present in our environment: delayed consequences and joint actions. Through an extensive simulation study, we~show that our DeepSoCS exhibits the significantly higher performance of job execution time than that of HEFT with a higher level of robustness under realistic noise conditions. We~conclude with a discussion of the potential improvements for our DeepSoCS neural scheduler.

Deep Multi-Agent Reinforcement Learning with Relevance Graphs

Nov 30, 2018

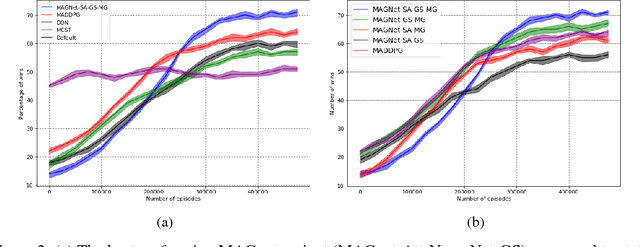

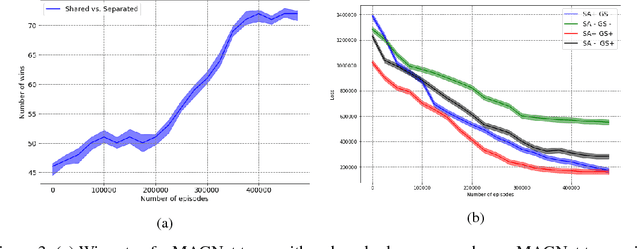

Abstract:Over recent years, deep reinforcement learning has shown strong successes in complex single-agent tasks, and more recently this approach has also been applied to multi-agent domains. In this paper, we propose a novel approach, called MAGnet, to multi-agent reinforcement learning (MARL) that utilizes a relevance graph representation of the environment obtained by a self-attention mechanism, and a message-generation technique inspired by the NerveNet architecture. We applied our MAGnet approach to the Pommerman game and the results show that it significantly outperforms state-of-the-art MARL solutions, including DQN, MADDPG, and MCTS.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge