Bo Ryu

DeepMPR: Enhancing Opportunistic Routing in Wireless Networks through Multi-Agent Deep Reinforcement Learning

Jun 16, 2023Abstract:Opportunistic routing relies on the broadcast capability of wireless networks. It brings higher reliability and robustness in highly dynamic and/or severe environments such as mobile or vehicular ad-hoc networks (MANETs/VANETs). To reduce the cost of broadcast, multicast routing schemes use the connected dominating set (CDS) or multi-point relaying (MPR) set to decrease the network overhead and hence, their selection algorithms are critical. Common MPR selection algorithms are heuristic, rely on coordination between nodes, need high computational power for large networks, and are difficult to tune for network uncertainties. In this paper, we use multi-agent deep reinforcement learning to design a novel MPR multicast routing technique, DeepMPR, which is outperforming the OLSR MPR selection algorithm while it does not require MPR announcement messages from the neighbors. Our evaluation results demonstrate the performance gains of our trained DeepMPR multicast forwarding policy compared to other popular techniques.

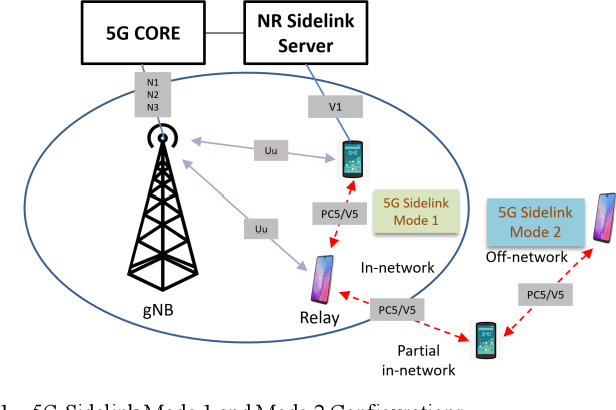

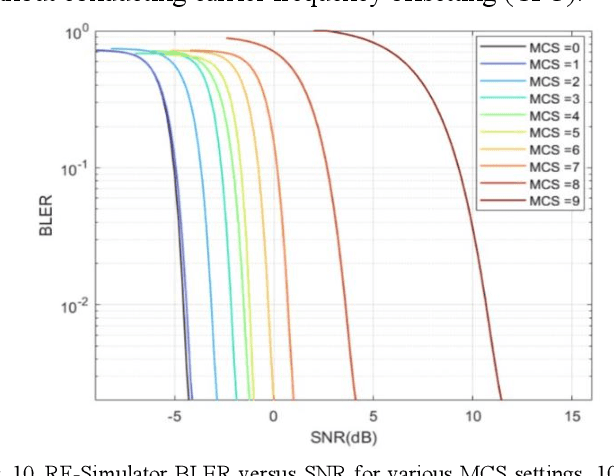

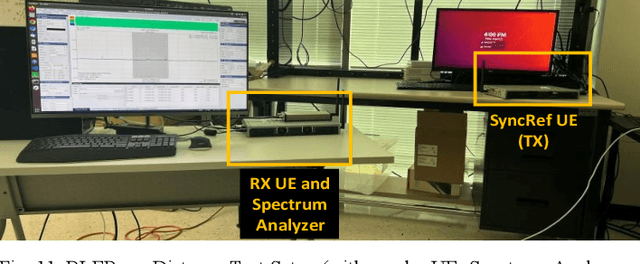

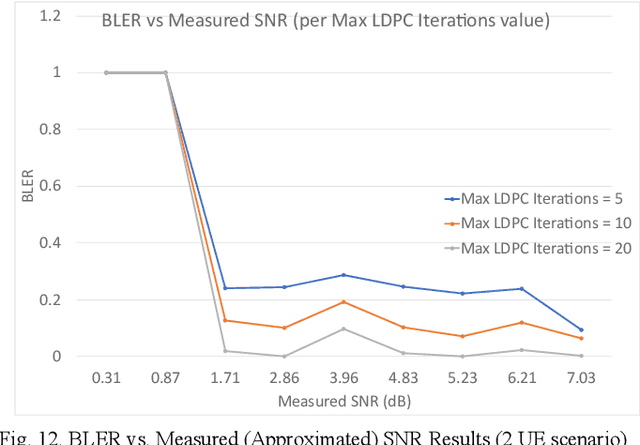

Open Source-based Over-The-Air 5G New Radio Sidelink Testbed

Jun 15, 2023

Abstract:The focus of this paper is the prototype development for 5G new radio (NR) sidelink communications, which enables NR UEs to transfer data independently without the assistance of a base station (gNB), designated as sidelink mode 2. Our design leverages open-source software operating on software-defined radios (SDRs), which can be easily extended for multiple UE scenarios. The software includes all signal processing components specified by 5G sidelink standards, including Low -Density Parity Check (LDPC) encoding/decoding, polar encoding/decoding, data and control multiplexing, modulation/demodulation, and orthogonal frequency-division multiplexing (OFDM) modulation/demodulation. It can be configured to operate with different bands, bandwidths, and multiple antenna settings. One method to demonstrate the completed Physical Sidelink Broadcast Channel (PSBCH) development is to show synchronization between a SyncRef UE and a nearby UE. The SyncRef UE broadcasts a sidelink synchronization signal block (S-SSB) periodically, which the nearby UE detects and uses to synchronize its timing and frequency components with the SyncRef UE. Once a connection is established, the SyncRef UE acts as a transmitter and shares data with the receiver UE (nearby UE) via the physical sidelink share channel (PSSCH). Our physical sidelink framework is tested using both an RF simulator and an over-the-air (OTA) testbed. In this work, we show both synchronization and data transmission/reception with 5G sidelink mode 2, where our OTA experimental results align well with our simulation results.

Deep Reinforcement Learning for System-on-Chip: Myths and Realities

Jul 29, 2022

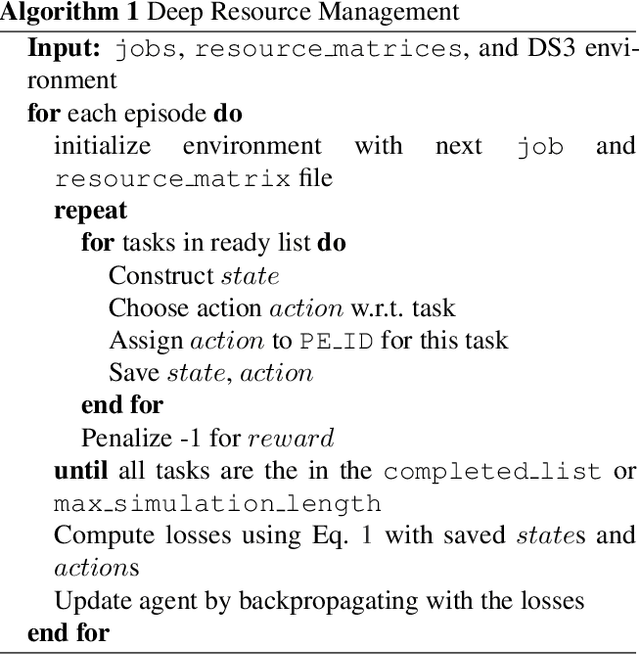

Abstract:Neural schedulers based on deep reinforcement learning (DRL) have shown considerable potential for solving real-world resource allocation problems, as they have demonstrated significant performance gain in the domain of cluster computing. In this paper, we investigate the feasibility of neural schedulers for the domain of System-on-Chip (SoC) resource allocation through extensive experiments and comparison with non-neural, heuristic schedulers. The key finding is three-fold. First, neural schedulers designed for cluster computing domain do not work well for SoC due to i) heterogeneity of SoC computing resources and ii) variable action set caused by randomness in incoming jobs. Second, our novel neural scheduler technique, Eclectic Interaction Matching (EIM), overcomes the above challenges, thus significantly improving the existing neural schedulers. Specifically, we rationalize the underlying reasons behind the performance gain by the EIM-based neural scheduler. Third, we discover that the ratio of the average processing elements (PE) switching delay and the average PE computation time significantly impacts the performance of neural SoC schedulers even with EIM. Consequently, future neural SoC scheduler design must consider this metric as well as its implementation overhead for practical utility.

DeepCQ+: Robust and Scalable Routing with Multi-Agent Deep Reinforcement Learning for Highly Dynamic Networks

Nov 29, 2021

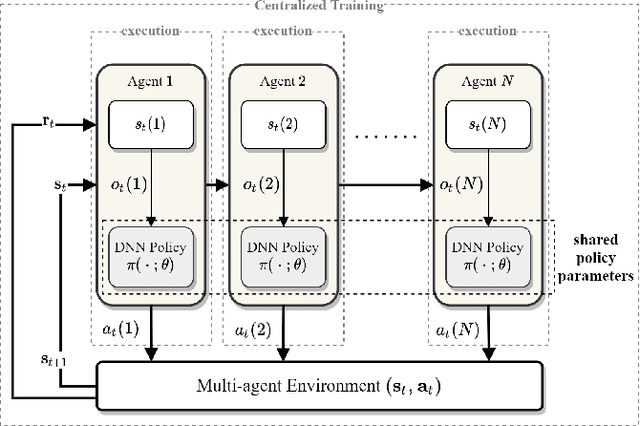

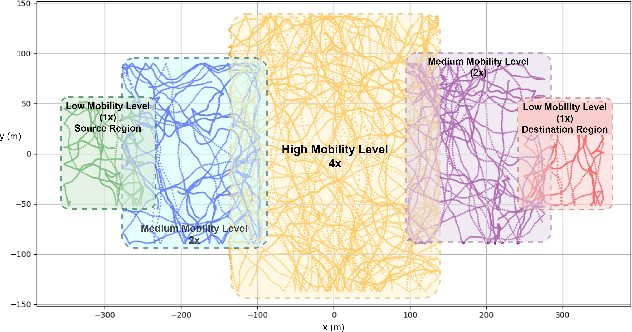

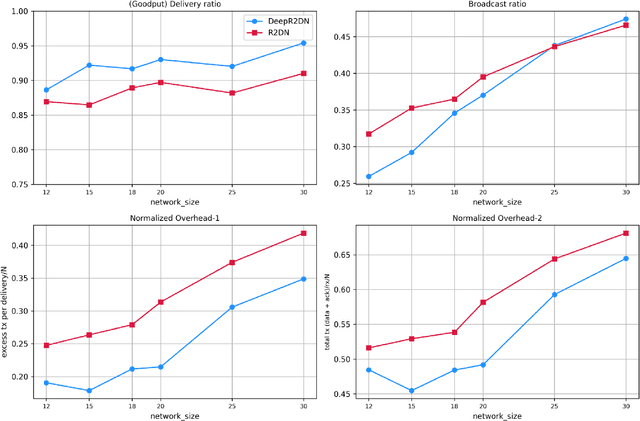

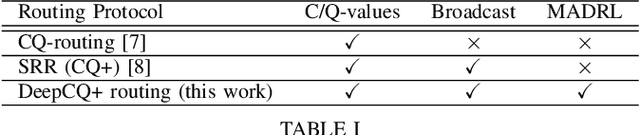

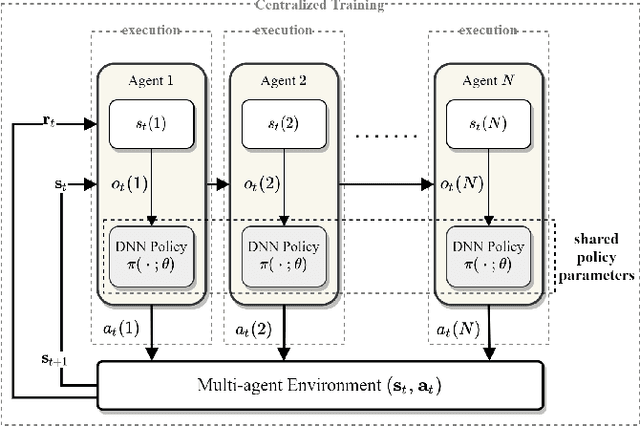

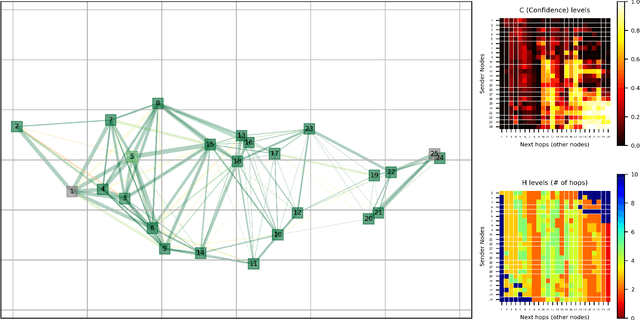

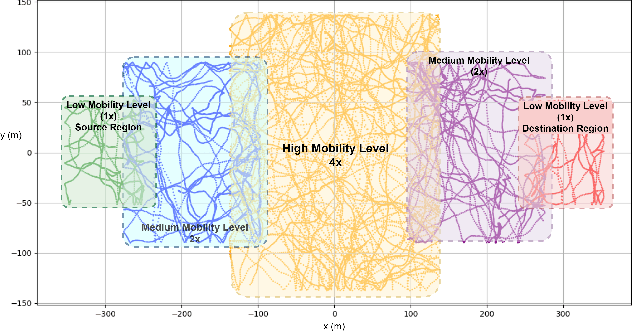

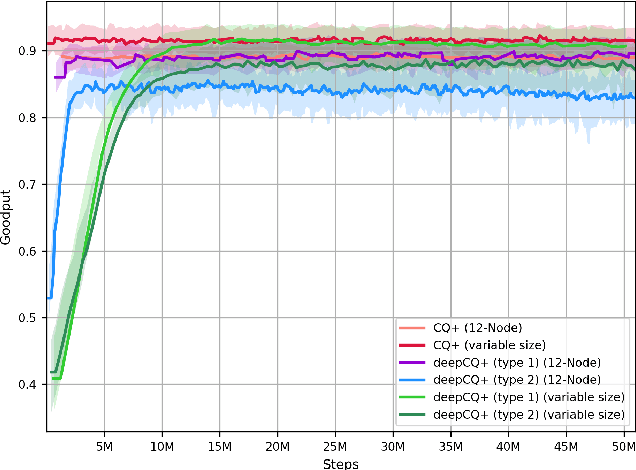

Abstract:Highly dynamic mobile ad-hoc networks (MANETs) remain as one of the most challenging environments to develop and deploy robust, efficient, and scalable routing protocols. In this paper, we present DeepCQ+ routing protocol which, in a novel manner integrates emerging multi-agent deep reinforcement learning (MADRL) techniques into existing Q-learning-based routing protocols and their variants and achieves persistently higher performance across a wide range of topology and mobility configurations. While keeping the overall protocol structure of the Q-learning-based routing protocols, DeepCQ+ replaces statically configured parameterized thresholds and hand-written rules with carefully designed MADRL agents such that no configuration of such parameters is required a priori. Extensive simulation shows that DeepCQ+ yields significantly increased end-to-end throughput with lower overhead and no apparent degradation of end-to-end delays (hop counts) compared to its Q-learning based counterparts. Qualitatively, and perhaps more significantly, DeepCQ+ maintains remarkably similar performance gains under many scenarios that it was not trained for in terms of network sizes, mobility conditions, and traffic dynamics. To the best of our knowledge, this is the first successful application of the MADRL framework for the MANET routing problem that demonstrates a high degree of scalability and robustness even under environments that are outside the trained range of scenarios. This implies that our MARL-based DeepCQ+ design solution significantly improves the performance of Q-learning based CQ+ baseline approach for comparison and increases its practicality and explainability because the real-world MANET environment will likely vary outside the trained range of MANET scenarios. Additional techniques to further increase the gains in performance and scalability are discussed.

A Scalable and Reproducible System-on-Chip Simulation for Reinforcement Learning

Apr 27, 2021

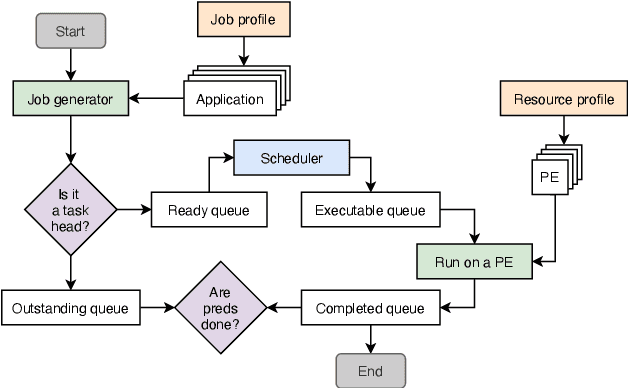

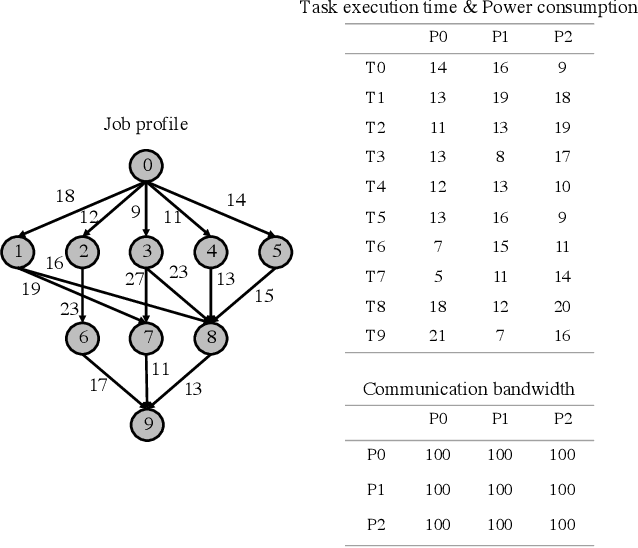

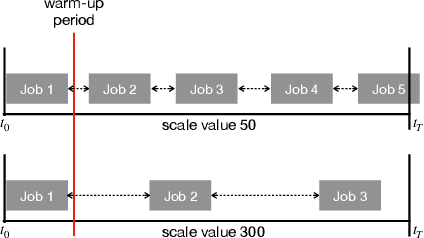

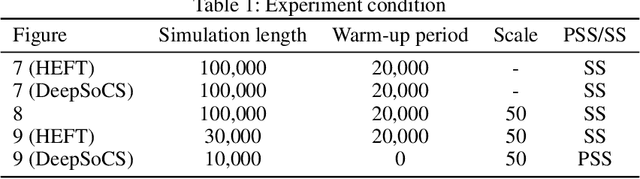

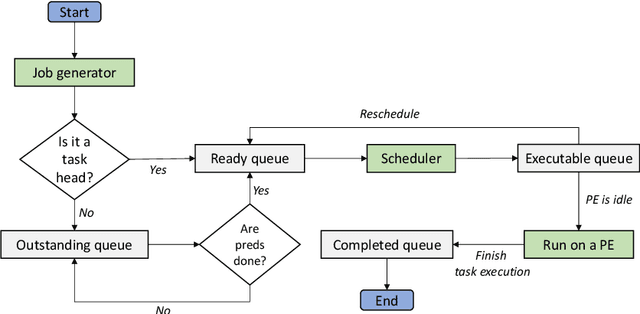

Abstract:Deep Reinforcement Learning (DRL) underlies in a simulated environment and optimizes objective goals. By extending the conventional interaction scheme, this paper proffers gym-ds3, a scalable and reproducible open environment tailored for a high-fidelity Domain-Specific System-on-Chip (DSSoC) application. The simulation corroborates to schedule hierarchical jobs onto heterogeneous System-on-Chip (SoC) processors and bridges the system to reinforcement learning research. We systematically analyze the representative SoC simulator and discuss the primary challenging aspects that the system (1) continuously generates indefinite jobs at a rapid injection rate, (2) optimizes complex objectives, and (3) operates in steady-state scheduling. We provide exemplary snippets and experimentally demonstrate the run-time performances on different schedulers that successfully mimic results achieved from the standard DS3 framework and real-world embedded systems.

Robust and Scalable Routing with Multi-Agent Deep Reinforcement Learning for MANETs

Jan 09, 2021

Abstract:We address the packet routing problem in highly dynamic mobile ad-hoc networks (MANETs). In the network routing problem each router chooses the next-hop(s) of each packet to deliver the packet to a destination with lower delay, higher reliability, and less overhead in the network. In this paper, we present a novel framework and routing policies, DeepCQ+ routing, using multi-agent deep reinforcement learning (MADRL) which is designed to be robust and scalable for MANETs. Unlike other deep reinforcement learning (DRL)-based routing solutions in the literature, our approach has enabled us to train over a limited range of network parameters and conditions, but achieve realistic routing policies for a much wider range of conditions including a variable number of nodes, different data flows with varying data rates and source/destination pairs, diverse mobility levels, and other dynamic topology of networks. We demonstrate the scalability, robustness, and performance enhancements obtained by DeepCQ+ routing over a recently proposed model-free and non-neural robust and reliable routing technique (i.e. CQ+ routing). DeepCQ+ routing outperforms non-DRL-based CQ+ routing in terms of overhead while maintains same goodput rate. Under a wide range of network sizes and mobility conditions, we have observed the reduction in normalized overhead of 10-15%, indicating that the DeepCQ+ routing policy delivers more packets end-to-end with less overhead used. To the best of our knowledge, this is the first successful application of MADRL for the MANET routing problem that simultaneously achieves scalability and robustness under dynamic conditions while outperforming its non-neural counterpart. More importantly, we provide a framework to design scalable and robust routing policy with any desired network performance metric of interest.

DeepSoCS: A Neural Scheduler for Heterogeneous System-on-Chip (SoC) Resource Scheduling

Jun 05, 2020

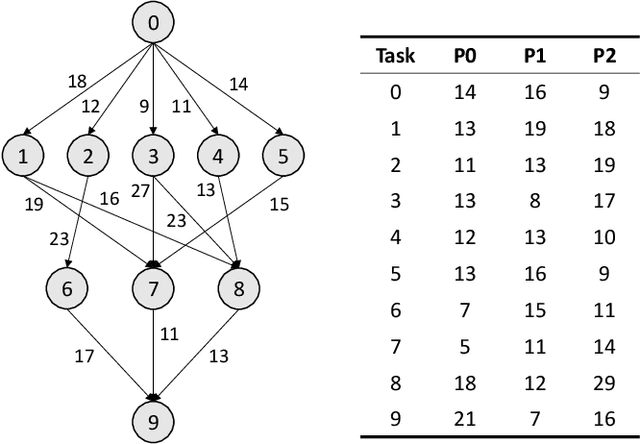

Abstract:In this paper, we~present a novel scheduling solution for a class of System-on-Chip (SoC) systems where heterogeneous chip resources (DSP, FPGA, GPU, etc.) must be efficiently scheduled for continuously arriving hierarchical jobs with their tasks represented by a directed acyclic graph. Traditionally, heuristic algorithms have been widely used for many resource scheduling domains, and Heterogeneous Earliest Finish Time (HEFT) has been a dominating state-of-the-art technique across a broad range of heterogeneous resource scheduling domains over many years. Despite their long-standing popularity, HEFT-like algorithms are known to be vulnerable to a small amount of noise added to the environment. Our Deep Reinforcement Learning (DRL)-based SoC Scheduler (DeepSoCS), capable of learning the "best" task ordering under dynamic environment changes, overcomes the brittleness of rule-based schedulers such as HEFT with significantly higher performance across different types of jobs. We~describe a DeepSoCS design process using a real-time heterogeneous SoC scheduling emulator, discuss major challenges, and present two novel neural network design features that lead to outperforming HEFT: (i) hierarchical job- and task-graph embedding; and (ii) efficient use of real-time task information in the state space. Furthermore, we~introduce effective techniques to address two fundamental challenges present in our environment: delayed consequences and joint actions. Through an extensive simulation study, we~show that our DeepSoCS exhibits the significantly higher performance of job execution time than that of HEFT with a higher level of robustness under realistic noise conditions. We~conclude with a discussion of the potential improvements for our DeepSoCS neural scheduler.

Neural Heterogeneous Scheduler

Jun 09, 2019

Abstract:Access to parallel and distributed computation has enabled researchers and developers to improve algorithms and performance in many applications. Recent research has focused on next generation special purpose systems with multiple kinds of coprocessors, known as heterogeneous system-on-chips (SoC). In this paper, we introduce a method to intelligently schedule--and learn to schedule--a stream of tasks to available processing elements in such a system. We use deep reinforcement learning enabling complex sequential decision making and empirically show that our reinforcement learning system provides for a viable, better alternative to conventional scheduling heuristics with respect to minimizing execution time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge