Robust and Scalable Routing with Multi-Agent Deep Reinforcement Learning for MANETs

Paper and Code

Jan 09, 2021

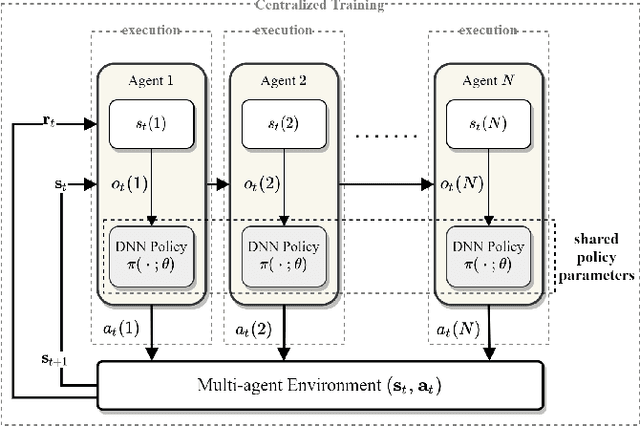

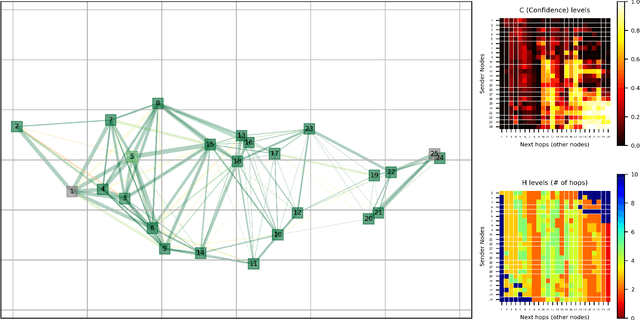

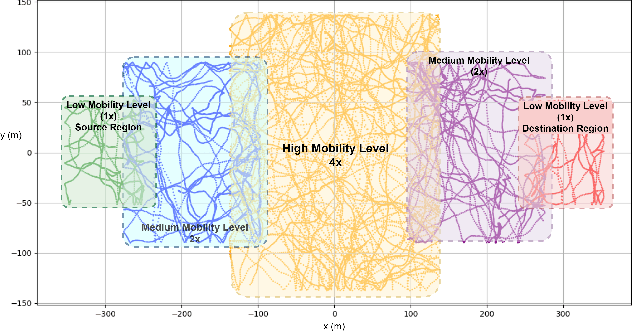

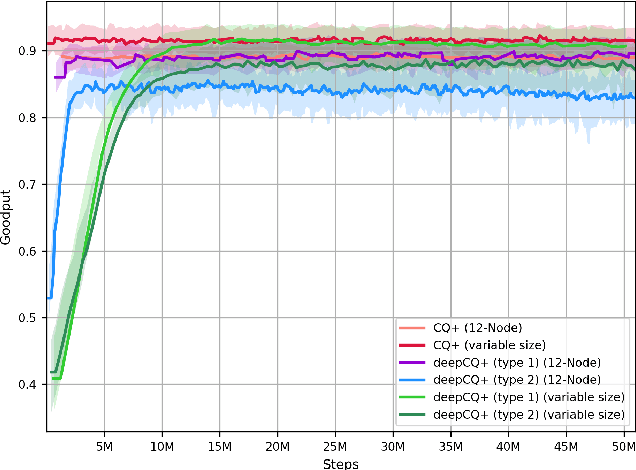

We address the packet routing problem in highly dynamic mobile ad-hoc networks (MANETs). In the network routing problem each router chooses the next-hop(s) of each packet to deliver the packet to a destination with lower delay, higher reliability, and less overhead in the network. In this paper, we present a novel framework and routing policies, DeepCQ+ routing, using multi-agent deep reinforcement learning (MADRL) which is designed to be robust and scalable for MANETs. Unlike other deep reinforcement learning (DRL)-based routing solutions in the literature, our approach has enabled us to train over a limited range of network parameters and conditions, but achieve realistic routing policies for a much wider range of conditions including a variable number of nodes, different data flows with varying data rates and source/destination pairs, diverse mobility levels, and other dynamic topology of networks. We demonstrate the scalability, robustness, and performance enhancements obtained by DeepCQ+ routing over a recently proposed model-free and non-neural robust and reliable routing technique (i.e. CQ+ routing). DeepCQ+ routing outperforms non-DRL-based CQ+ routing in terms of overhead while maintains same goodput rate. Under a wide range of network sizes and mobility conditions, we have observed the reduction in normalized overhead of 10-15%, indicating that the DeepCQ+ routing policy delivers more packets end-to-end with less overhead used. To the best of our knowledge, this is the first successful application of MADRL for the MANET routing problem that simultaneously achieves scalability and robustness under dynamic conditions while outperforming its non-neural counterpart. More importantly, we provide a framework to design scalable and robust routing policy with any desired network performance metric of interest.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge