Chaabane Djeraba

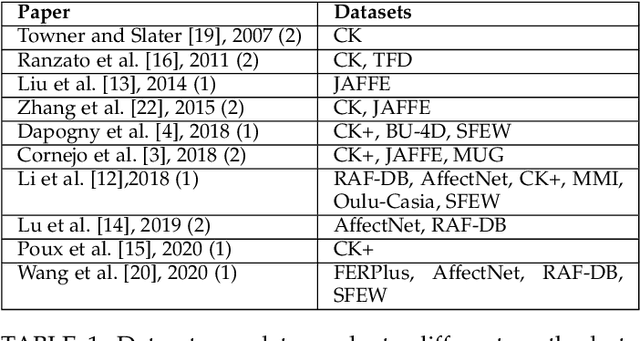

A survey on Graph Deep Representation Learning for Facial Expression Recognition

Nov 13, 2024

Abstract:This comprehensive review delves deeply into the various methodologies applied to facial expression recognition (FER) through the lens of graph representation learning (GRL). Initially, we introduce the task of FER and the concepts of graph representation and GRL. Afterward, we discuss some of the most prevalent and valuable databases for this task. We explore promising approaches for graph representation in FER, including graph diffusion, spatio-temporal graphs, and multi-stream architectures. Finally, we identify future research opportunities and provide concluding remarks.

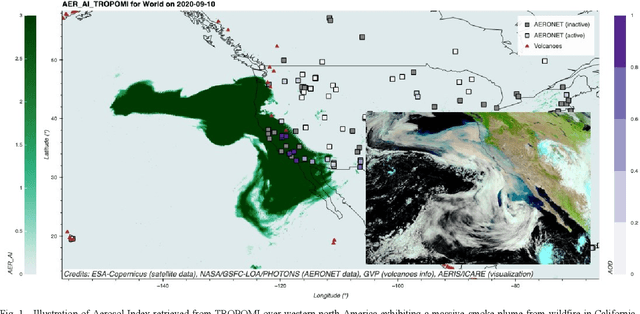

Mining atmospheric data

Jun 26, 2021

Abstract:This paper overviews two interdependent issues important for mining remote sensing data (e.g. images) obtained from atmospheric monitoring missions. The first issue relates the building new public datasets and benchmarks, which are hot priority of the remote sensing community. The second issue is the investigation of deep learning methodologies for atmospheric data classification based on vast amount of data without annotations and with localized annotated data provided by sparse observing networks at the surface. The targeted application is air quality assessment and prediction. Air quality is defined as the pollution level linked with several atmospheric constituents such as gases and aerosols. There are dependency relationships between the bad air quality, caused by air pollution, and the public health. The target application is the development of a fast prediction model for local and regional air quality assessment and tracking. The results of mining data will have significant implication for citizen and decision makers by providing a fast prediction and reliable air quality monitoring system able to cover the local and regional scale through intelligent extrapolation of sparse ground-based in situ measurement networks.

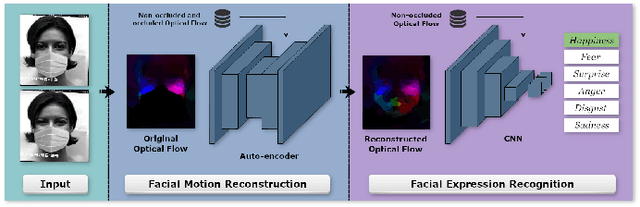

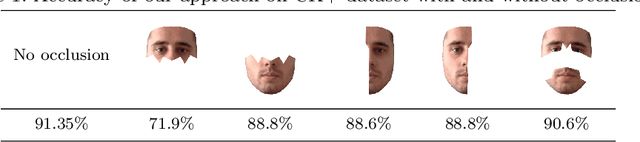

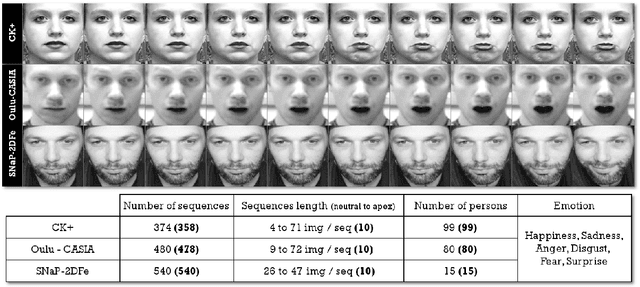

Dynamic Facial Expression Recognition under Partial Occlusion with Optical Flow Reconstruction

Dec 24, 2020

Abstract:Video facial expression recognition is useful for many applications and received much interest lately. Although some solutions give really good results in a controlled environment (no occlusion), recognition in the presence of partial facial occlusion remains a challenging task. To handle occlusions, solutions based on the reconstruction of the occluded part of the face have been proposed. These solutions are mainly based on the texture or the geometry of the face. However, the similarity of the face movement between different persons doing the same expression seems to be a real asset for the reconstruction. In this paper we exploit this asset and propose a new solution based on an auto-encoder with skip connections to reconstruct the occluded part of the face in the optical flow domain. To the best of our knowledge, this is the first proposition to directly reconstruct the movement for facial expression recognition. We validated our approach in the controlled dataset CK+ on which different occlusions were generated. Our experiments show that the proposed method reduce significantly the gap, in terms of recognition accuracy, between occluded and non-occluded situations. We also compare our approach with existing state-of-the-art solutions. In order to lay the basis of a reproducible and fair comparison in the future, we also propose a new experimental protocol that includes occlusion generation and reconstruction evaluation.

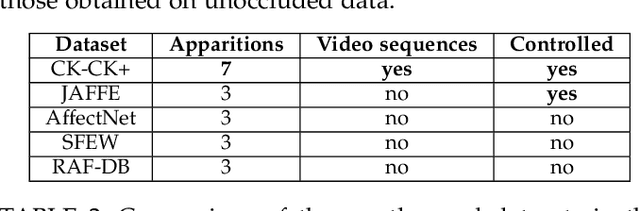

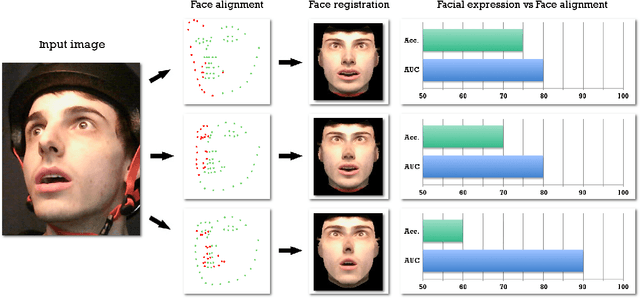

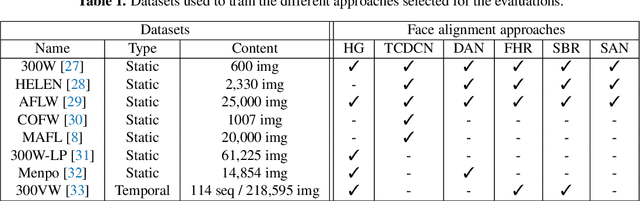

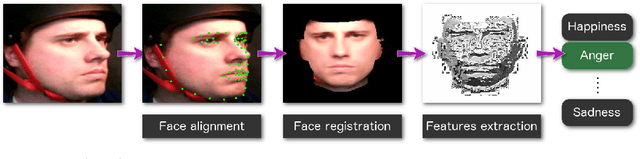

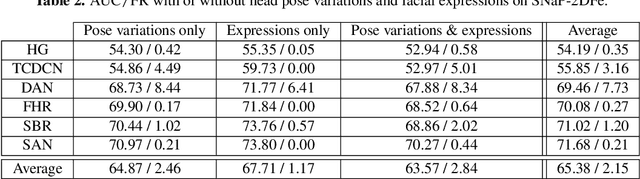

What is the relationship between face alignment and facial expression recognition?

May 26, 2019

Abstract:Face expression recognition is still a complex task, particularly due to the presence of head pose variations. Although face alignment approaches are becoming increasingly accurate for characterizing facial regions, it is important to consider the impact of these approaches when they are used for other related tasks such as head pose registration or facial expression recognition. In this paper, we compare the performance of recent face alignment approaches to highlight the most appropriate techniques for preserving facial geometry when correcting the head pose variation. Also, we highlight the most suitable techniques that locate facial landmarks in the presence of head pose variations and facial expressions.

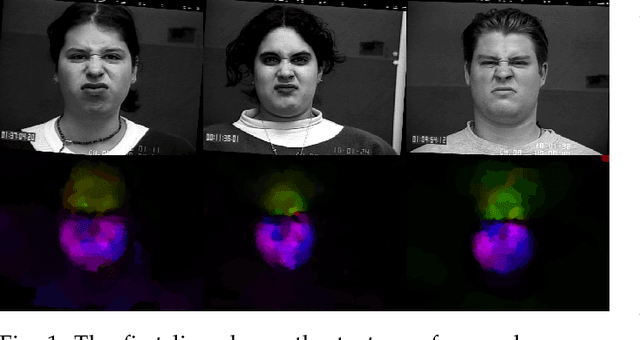

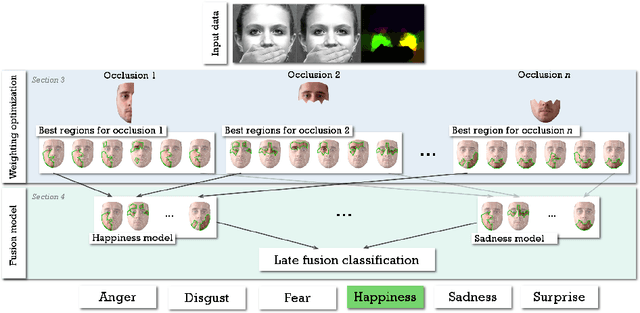

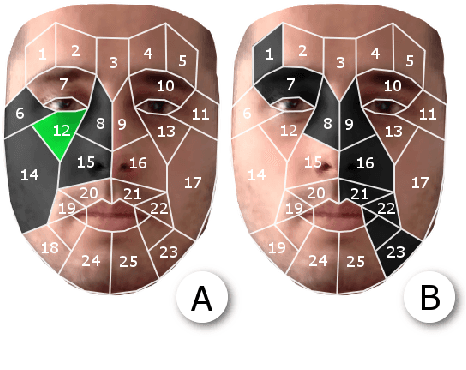

Facial Expressions Analysis Under Occlusions Based on Specificities of Facial Motion Propagation

Apr 30, 2019

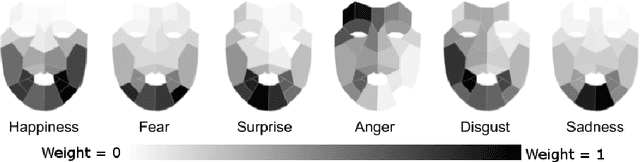

Abstract:Although much progress has been made in the facial expression analysis field, facial occlusions are still challenging. The main innovation brought by this contribution consists in exploiting the specificities of facial movement propagation for recognizing expressions in presence of important occlusions. The movement induced by an expression extends beyond the movement epicenter. Thus, the movement occurring in an occluded region propagates towards neighboring visible regions. In presence of occlusions, per expression, we compute the importance of each unoccluded facial region and we construct adapted facial frameworks that boost the performance of per expression binary classifier. The output of each expression-dependant binary classifier is then aggregated and fed into a fusion process that aims constructing, per occlusion, a unique model that recognizes all the facial expressions considered. The evaluations highlight the robustness of this approach in presence of significant facial occlusions.

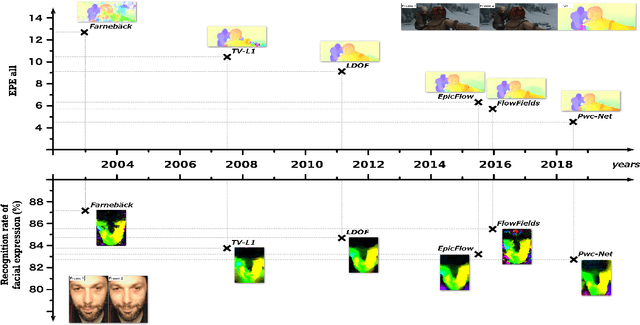

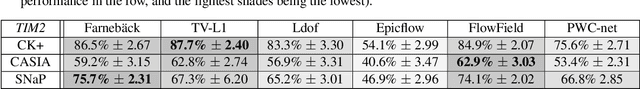

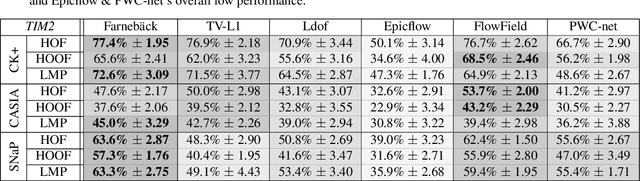

Optical Flow Techniques for Facial Expression Analysis: Performance Evaluation and Improvements

Apr 25, 2019

Abstract:Optical flow techniques are becoming increasingly performant and robust when estimating motion in a scene, but their performance has yet to be proven in the area of facial expression recognition. In this work, a variety of optical flow approaches are evaluated across multiple facial expression datasets, so as to provide a consistent performance evaluation. Additionally, the strengths of multiple optical flow approaches are combined in a novel data augmentation scheme. Under this scheme, increases in average accuracy of up to 6% (depending on the choice of optical flow approaches and dataset) have been achieved.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge