Cairong Yan

Refining Strokes by Learning Offset Attributes between Strokes for Flexible Sketch Edit at Stroke-Level

Jan 31, 2026Abstract:Sketch edit at stroke-level aims to transplant source strokes onto a target sketch via stroke expansion or replacement, while preserving semantic consistency and visual fidelity with the target sketch. Recent studies addressed it by relocating source strokes at appropriate canvas positions. However, as source strokes could exhibit significant variations in both size and orientation, we may fail to produce plausible sketch editing results by merely repositioning them without further adjustments. For example, anchoring an oversized source stroke onto the target without proper scaling would fail to produce a semantically coherent outcome. In this paper, we propose SketchMod to refine the source stroke through transformation so as to align it with the target sketch's patterns, further realize flexible sketch edit at stroke-level. As the source stroke refinement is governed by the patterns of the target sketch, we learn three key offset attributes (scale, orientation and position) from the source stroke to another, and align it with the target by: 1) resizing to match spatial proportions by scale, 2) rotating to align with local geometry by orientation, and 3) displacing to meet with semantic layout by position. Besides, a stroke's profiles can be precisely controlled during sketch edit via the exposed captured stroke attributes. Experimental results indicate that SketchMod achieves precise and flexible performances on stroke-level sketch edit.

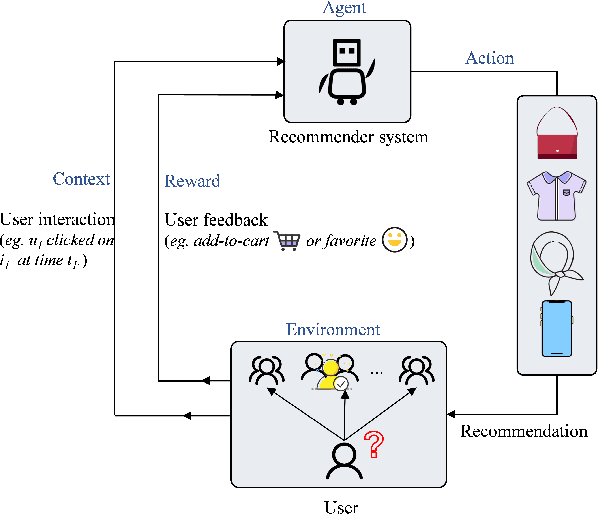

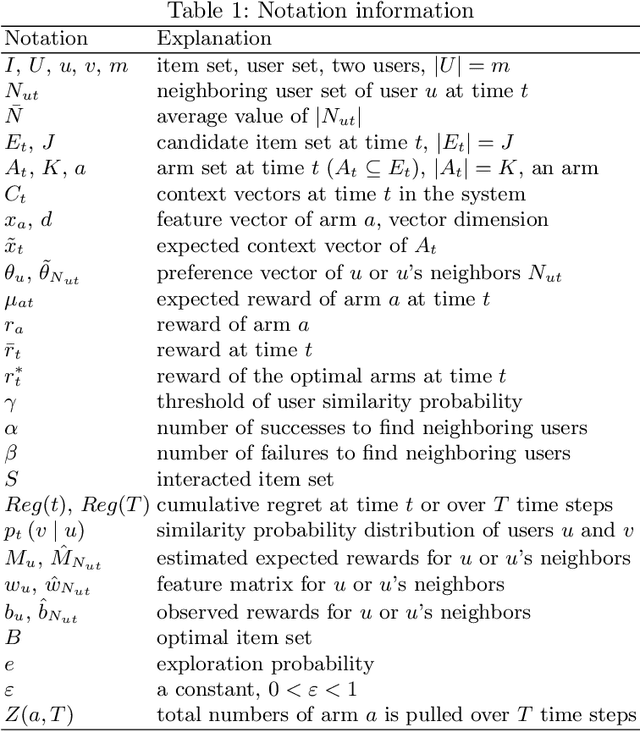

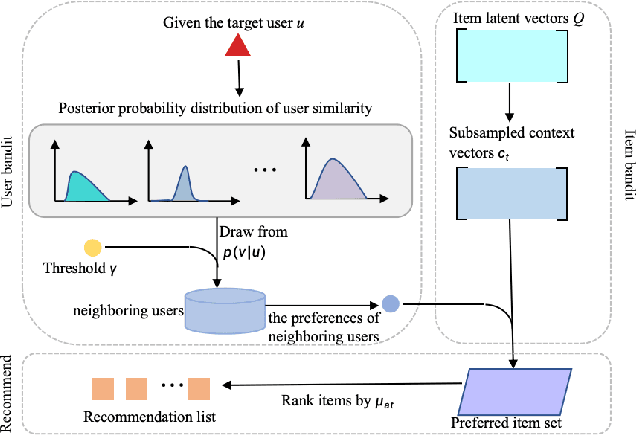

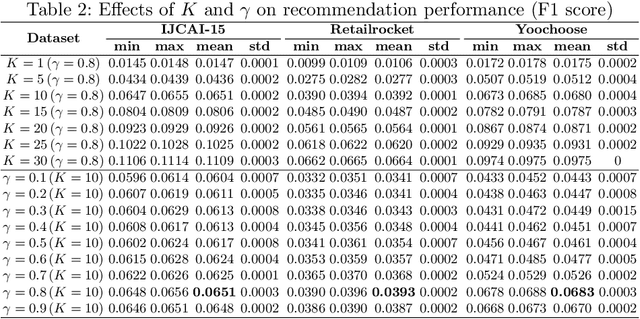

CoCoB: Adaptive Collaborative Combinatorial Bandits for Online Recommendation

May 05, 2025

Abstract:Clustering bandits have gained significant attention in recommender systems by leveraging collaborative information from neighboring users to better capture target user preferences. However, these methods often lack a clear definition of similar users and face challenges when users with unique preferences lack appropriate neighbors. In such cases, relying on divergent preferences of misidentified neighbors can degrade recommendation quality. To address these limitations, this paper proposes an adaptive Collaborative Combinatorial Bandits algorithm (CoCoB). CoCoB employs an innovative two-sided bandit architecture, applying bandit principles to both the user and item sides. The user-bandit employs an enhanced Bayesian model to explore user similarity, identifying neighbors based on a similarity probability threshold. The item-bandit treats items as arms, generating diverse recommendations informed by the user-bandit's output. CoCoB dynamically adapts, leveraging neighbor preferences when available or focusing solely on the target user otherwise. Regret analysis under a linear contextual bandit setting and experiments on three real-world datasets demonstrate CoCoB's effectiveness, achieving an average 2.4% improvement in F1 score over state-of-the-art methods.

Multi-Moving Camera Pedestrian Tracking with a New Dataset and Global Link Model

Jan 02, 2024

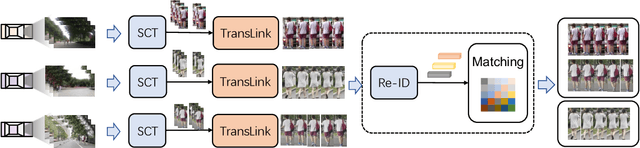

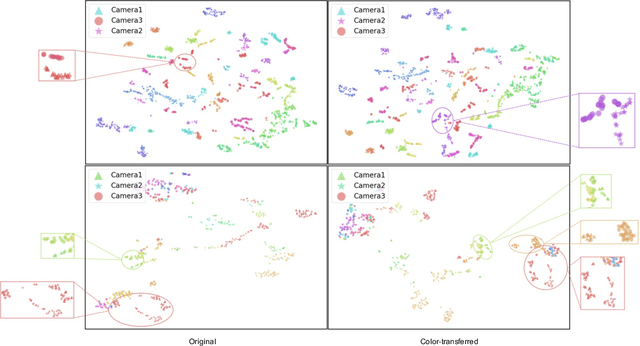

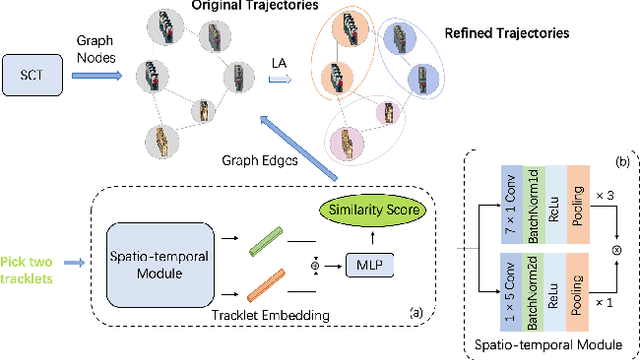

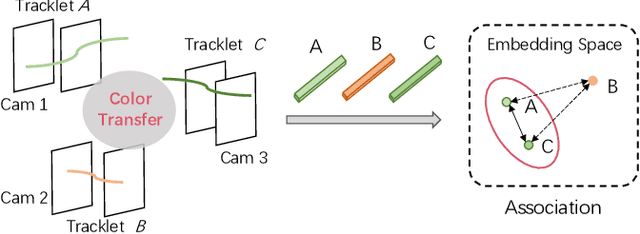

Abstract:Ensuring driving safety for autonomous vehicles has become increasingly crucial, highlighting the need for systematic tracking of pedestrians on the road. Most vehicles are equipped with visual sensors, however, the large-scale visual dataset from different agents has not been well studied yet. Basically, most of the multi-target multi-camera (MTMC) tracking systems are composed of two modules: single camera tracking (SCT) and inter-camera tracking (ICT). To reliably coordinate between them, MTMC tracking has been a very complicated task, while tracking across multi-moving cameras makes it even more challenging. In this paper, we focus on multi-target multi-moving camera (MTMMC) tracking, which is attracting increasing attention from the research community. Observing there are few datasets for MTMMC tracking, we collect a new dataset, called Multi-Moving Camera Track (MMCT), which contains sequences under various driving scenarios. To address the common problems of identity switch easily faced by most existing SCT trackers, especially for moving cameras due to ego-motion between the camera and targets, a lightweight appearance-free global link model, called Linker, is proposed to mitigate the identity switch by associating two disjoint tracklets of the same target into a complete trajectory within the same camera. Incorporated with Linker, existing SCT trackers generally obtain a significant improvement. Moreover, a strong baseline approach of re-identification (Re-ID) is effectively incorporated to extract robust appearance features under varying surroundings for pedestrian association across moving cameras for ICT, resulting in a much improved MTMMC tracking system, which can constitute a step further towards coordinated mining of multiple moving cameras. The dataset is available at https://github.com/dhu-mmct/DHU-MMCT}{https://github.com/dhu-mmct/DHU-MMCT .

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge