Caihua Xiong

A bilevel optimal motion planning model with application to autonomous parking

Dec 01, 2023Abstract:In this paper, we present a bilevel optimal motion planning (BOMP) model for autonomous parking. The BOMP model treats motion planning as an optimal control problem, in which the upper level is designed for vehicle nonlinear dynamics, and the lower level is for geometry collision-free constraints. The significant feature of the BOMP model is that the lower level is a linear programming problem that serves as a constraint for the upper-level problem. That is, an optimal control problem contains an embedded optimization problem as constraints. Traditional optimal control methods cannot solve the BOMP problem directly. Therefore, the modified approximate Karush-Kuhn-Tucker theory is applied to generate a general nonlinear optimal control problem. Then the pseudospectral optimal control method solves the converted problem. Particularly, the lower level is the $J_2$-function that acts as a distance function between convex polyhedron objects. Polyhedrons can approximate vehicles in higher precision than spheres or ellipsoids. Besides, the modified $J_2$-function (MJ) and the active-points based modified $J_2$-function (APMJ) are proposed to reduce the variables number and time complexity. As a result, an iteirative two-stage BOMP algorithm for autonomous parking concerning dynamical feasibility and collision-free property is proposed. The MJ function is used in the initial stage to find an initial collision-free approximate optimal trajectory and the active points, then the APMJ function in the final stage finds out the optimal trajectory. Simulation results and experiment on Turtlebot3 validate the BOMP model, and demonstrate that the computation speed increases almost two orders of magnitude compared with the area criterion based collision avoidance method.

RCDN -- Robust X-Corner Detection Algorithm based on Advanced CNN Model

Jul 07, 2023

Abstract:Accurate detection and localization of X-corner on both planar and non-planar patterns is a core step in robotics and machine vision. However, previous works could not make a good balance between accuracy and robustness, which are both crucial criteria to evaluate the detectors performance. To address this problem, in this paper we present a novel detection algorithm which can maintain high sub-pixel precision on inputs under multiple interference, such as lens distortion, extreme poses and noise. The whole algorithm, adopting a coarse-to-fine strategy, contains a X-corner detection network and three post-processing techniques to distinguish the correct corner candidates, as well as a mixed sub-pixel refinement technique and an improved region growth strategy to recover the checkerboard pattern partially visible or occluded automatically. Evaluations on real and synthetic images indicate that the presented algorithm has the higher detection rate, sub-pixel accuracy and robustness than other commonly used methods. Finally, experiments of camera calibration and pose estimation verify it can also get smaller re-projection error in quantitative comparisons to the state-of-the-art.

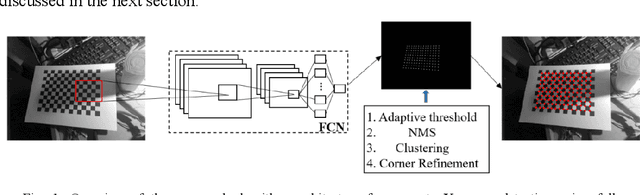

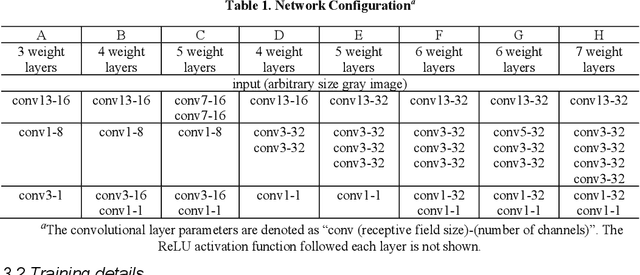

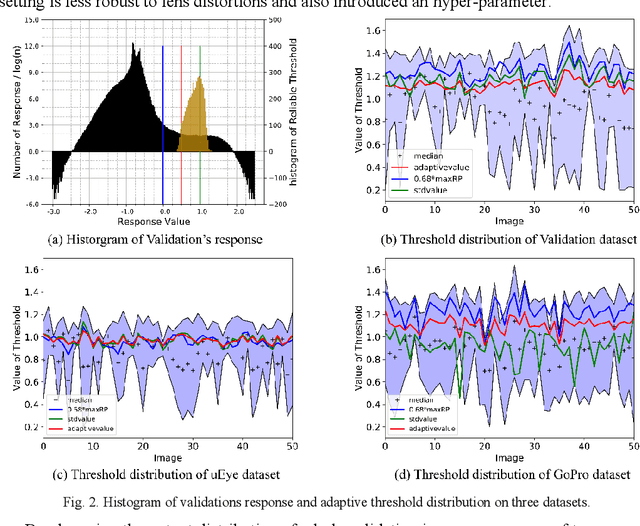

CCDN: Checkerboard Corner Detection Network for Robust Camera Calibration

Feb 10, 2023Abstract:Aiming to improve the checkerboard corner detection robustness against the images with poor quality, such as lens distortion, extreme poses, and noise, we propose a novel detection algorithm which can maintain high accuracy on inputs under multiply scenarios without any prior knowledge of the checkerboard pattern. This whole algorithm includes a checkerboard corner detection network and some post-processing techniques. The network model is a fully convolutional network with improvements of loss function and learning rate, which can deal with the images of arbitrary size and produce correspondingly-sized output with a corner score on each pixel by efficient inference and learning. Besides, in order to remove the false positives, we employ three post-processing techniques including threshold related to maximum response, non-maximum suppression, and clustering. Evaluations on two different datasets show its superior robustness, accuracy and wide applicability in quantitative comparisons with the state-of-the-art methods, like MATE, ChESS, ROCHADE and OCamCalib.

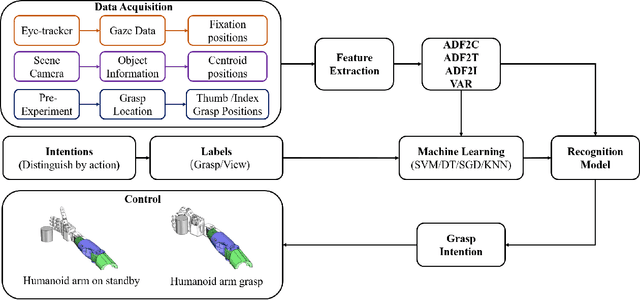

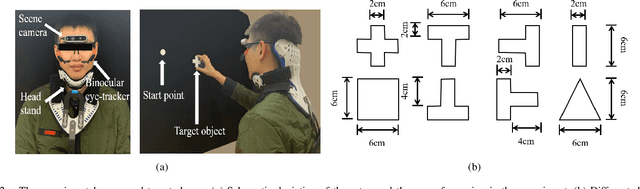

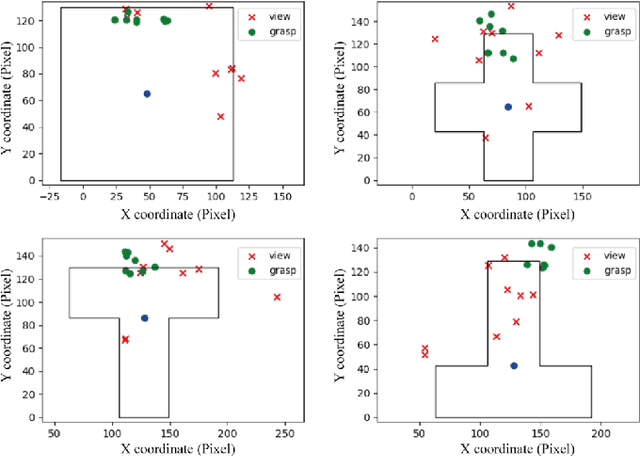

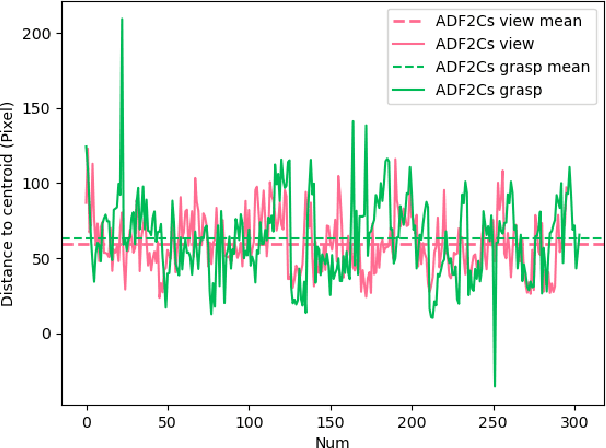

Natural grasp intention recognition based on gaze fixation in human-robot interaction

Dec 16, 2020

Abstract:Eye movement is closely related to limb actions, so it can be used to infer movement intentions. More importantly, in some cases, eye movement is the only way for paralyzed and impaired patients with severe movement disorders to communicate and interact with the environment. Despite this, eye-tracking technology still has very limited application scenarios as an intention recognition method. The goal of this paper is to achieve a natural fixation-based grasping intention recognition method, with which a user with hand movement disorders can intuitively express what tasks he/she wants to do by directly looking at the object of interest. Toward this goal, we design experiments to study the relationships of fixations in different tasks. We propose some quantitative features from these relationships and analyze them statistically. Then we design a natural method for grasping intention recognition. The experimental results prove that the accuracy of the proposed method for the grasping intention recognition exceeds 89\% on the training objects. When this method is extendedly applied to objects not included in the training set, the average accuracy exceeds 85\%. The grasping experiment in the actual environment verifies the effectiveness of the proposed method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge