Byron Reeves

Using Large Language Models to Create AI Personas for Replication and Prediction of Media Effects: An Empirical Test of 133 Published Experimental Research Findings

Aug 28, 2024

Abstract:This report analyzes the potential for large language models (LLMs) to expedite accurate replication of published message effects studies. We tested LLM-powered participants (personas) by replicating 133 experimental findings from 14 papers containing 45 recent studies in the Journal of Marketing (January 2023-May 2024). We used a new software tool, Viewpoints AI (https://viewpoints.ai/), that takes study designs, stimuli, and measures as input, automatically generates prompts for LLMs to act as a specified sample of unique personas, and collects their responses to produce a final output in the form of a complete dataset and statistical analysis. The underlying LLM used was Anthropic's Claude Sonnet 3.5. We generated 19,447 AI personas to replicate these studies with the exact same sample attributes, study designs, stimuli, and measures reported in the original human research. Our LLM replications successfully reproduced 76% of the original main effects (84 out of 111), demonstrating strong potential for AI-assisted replication of studies in which people respond to media stimuli. When including interaction effects, the overall replication rate was 68% (90 out of 133). The use of LLMs to replicate and accelerate marketing research on media effects is discussed with respect to the replication crisis in social science, potential solutions to generalizability problems in sampling subjects and experimental conditions, and the ability to rapidly test consumer responses to various media stimuli. We also address the limitations of this approach, particularly in replicating complex interaction effects in media response studies, and suggest areas for future research and improvement in AI-assisted experimental replication of media effects.

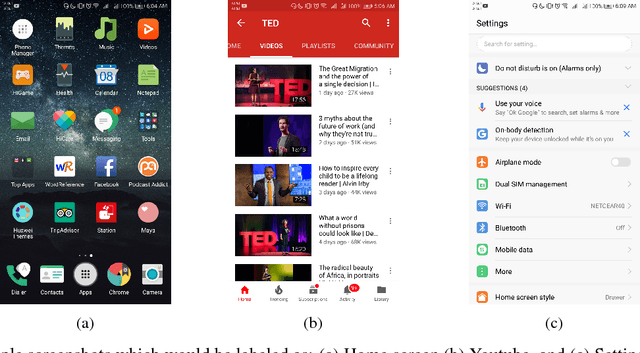

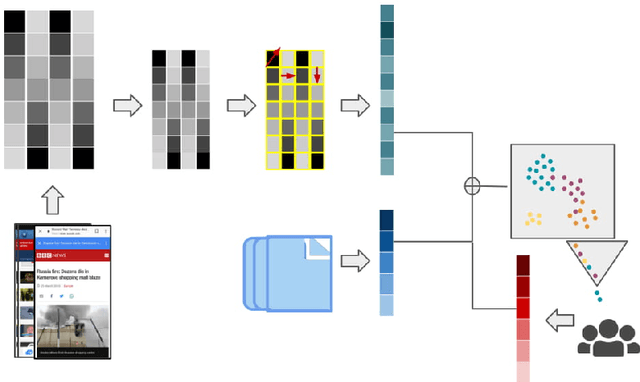

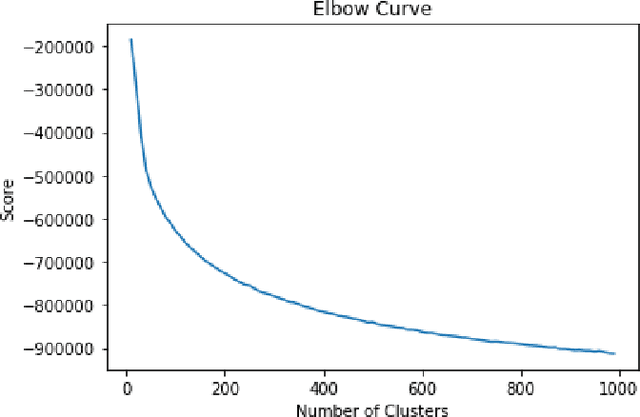

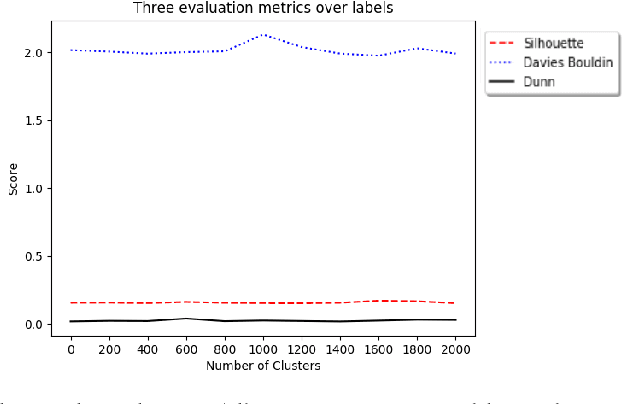

Guess What's on my Screen? Clustering Smartphone Screenshots with Active Learning

Jan 10, 2019

Abstract:A significant proportion of individuals' daily activities is experienced through digital devices. Smartphones in particular have become one of the preferred interfaces for content consumption and social interaction. Identifying the content embedded in frequently-captured smartphone screenshots is thus a crucial prerequisite to studies of media behavior and health intervention planning that analyze activity interplay and content switching over time. Screenshot images can depict heterogeneous contents and applications, making the a priori definition of adequate taxonomies a cumbersome task, even for humans. Privacy protection of the sensitive data captured on screens means the costs associated with manual annotation are large, as the effort cannot be crowd-sourced. Thus, there is need to examine utility of unsupervised and semi-supervised methods for digital screenshot classification. This work introduces the implications of applying clustering on large screenshot sets when only a limited amount of labels is available. In this paper we develop a framework for combining K-Means clustering with Active Learning for efficient leveraging of labeled and unlabeled samples, with the goal of discovering latent classes and describing a large collection of screenshot data. We tested whether SVM-embedded or XGBoost-embedded solutions for class probability propagation provide for more well-formed cluster configurations. Visual and textual vector representations of the screenshot images are derived and combined to assess the relative contribution of multi-modal features to the overall performance.

Text Extraction and Retrieval from Smartphone Screenshots: Building a Repository for Life in Media

Jan 04, 2018

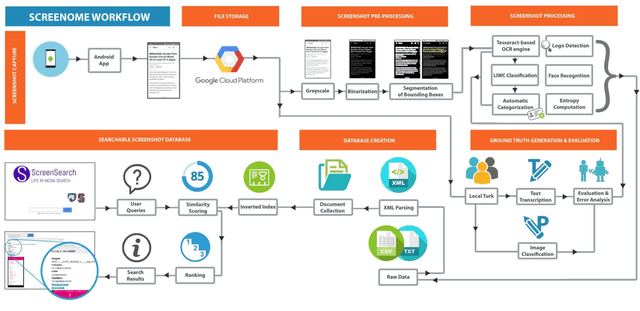

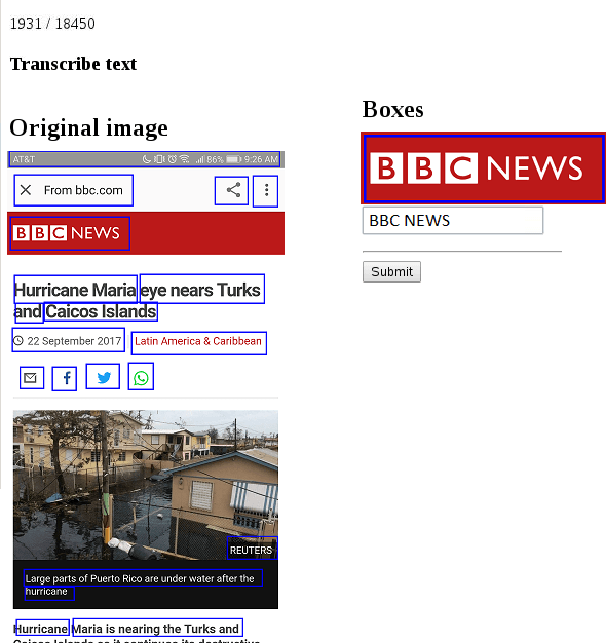

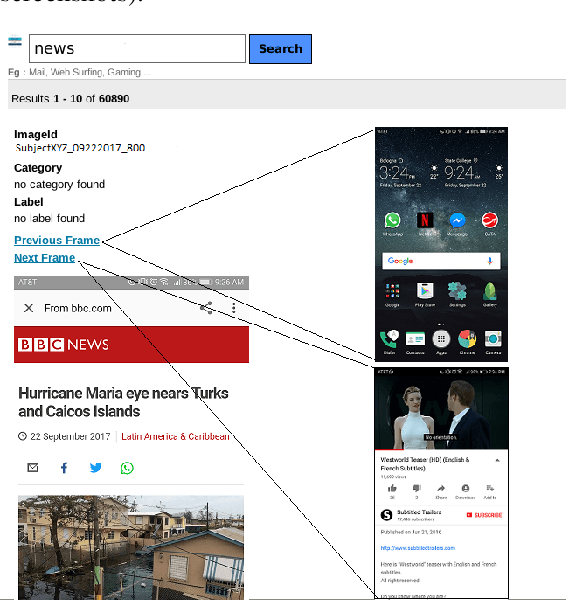

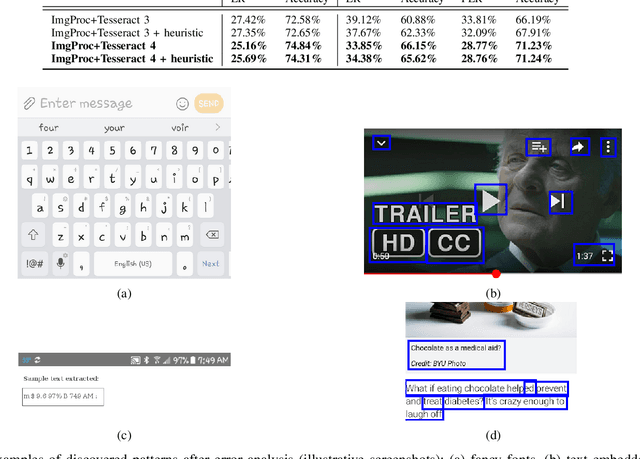

Abstract:Daily engagement in life experiences is increasingly interwoven with mobile device use. Screen capture at the scale of seconds is being used in behavioral studies and to implement "just-in-time" health interventions. The increasing psychological breadth of digital information will continue to make the actual screens that people view a preferred if not required source of data about life experiences. Effective and efficient Information Extraction and Retrieval from digital screenshots is a crucial prerequisite to successful use of screen data. In this paper, we present the experimental workflow we exploited to: (i) pre-process a unique collection of screen captures, (ii) extract unstructured text embedded in the images, (iii) organize image text and metadata based on a structured schema, (iv) index the resulting document collection, and (v) allow for Image Retrieval through a dedicated vertical search engine application. The adopted procedure integrates different open source libraries for traditional image processing, Optical Character Recognition (OCR), and Image Retrieval. Our aim is to assess whether and how state-of-the-art methodologies can be applied to this novel data set. We show how combining OpenCV-based pre-processing modules with a Long short-term memory (LSTM) based release of Tesseract OCR, without ad hoc training, led to a 74% character-level accuracy of the extracted text. Further, we used the processed repository as baseline for a dedicated Image Retrieval system, for the immediate use and application for behavioral and prevention scientists. We discuss issues of Text Information Extraction and Retrieval that are particular to the screenshot image case and suggest important future work.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge