Dolzodmaa Davaasuren

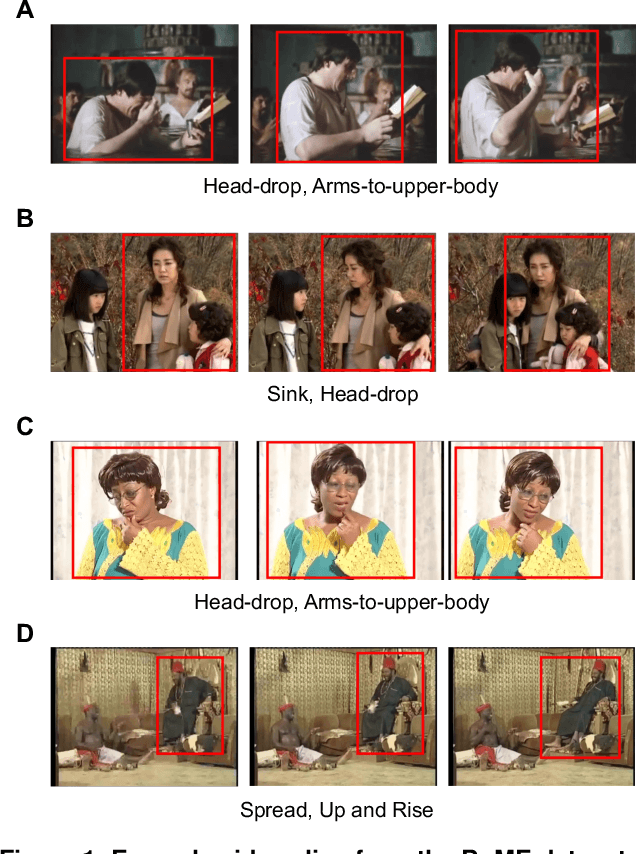

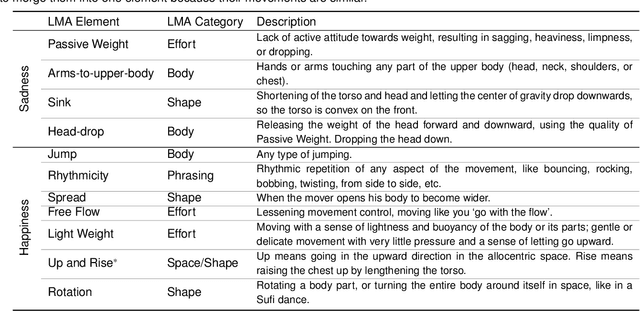

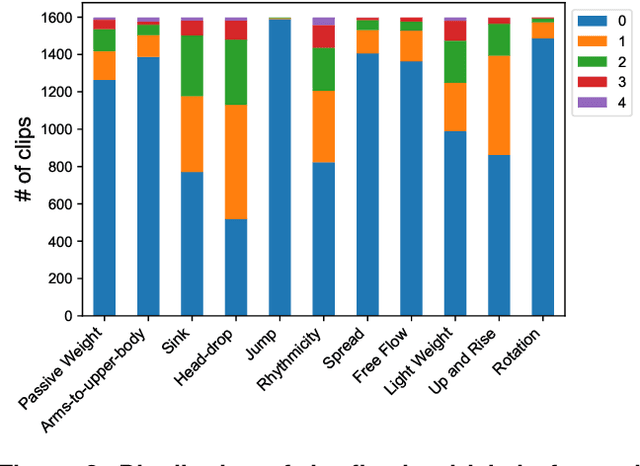

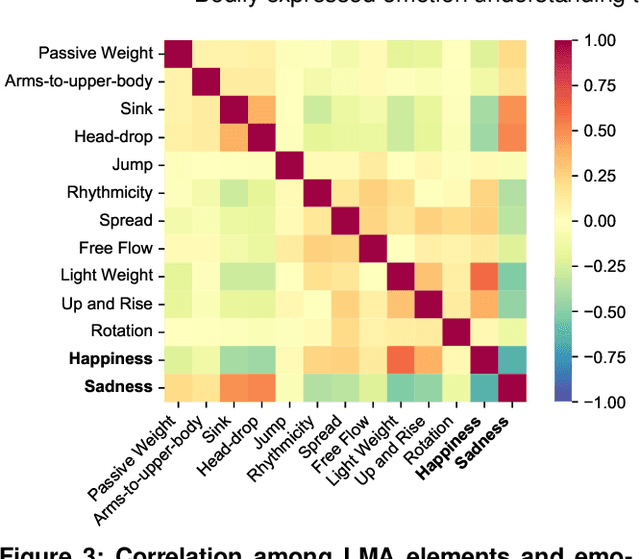

Bodily expressed emotion understanding through integrating Laban movement analysis

Apr 05, 2023

Abstract:Body movements carry important information about a person's emotions or mental state and are essential in daily communication. Enhancing the ability of machines to understand emotions expressed through body language can improve the communication of assistive robots with children and elderly users, provide psychiatric professionals with quantitative diagnostic and prognostic assistance, and aid law enforcement in identifying deception. This study develops a high-quality human motor element dataset based on the Laban Movement Analysis movement coding system and utilizes that to jointly learn about motor elements and emotions. Our long-term ambition is to integrate knowledge from computing, psychology, and performing arts to enable automated understanding and analysis of emotion and mental state through body language. This work serves as a launchpad for further research into recognizing emotions through analysis of human movement.

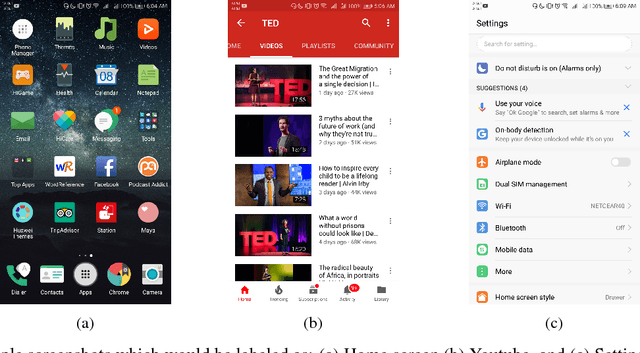

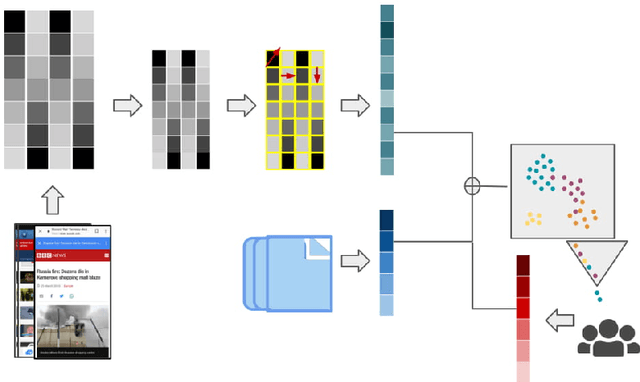

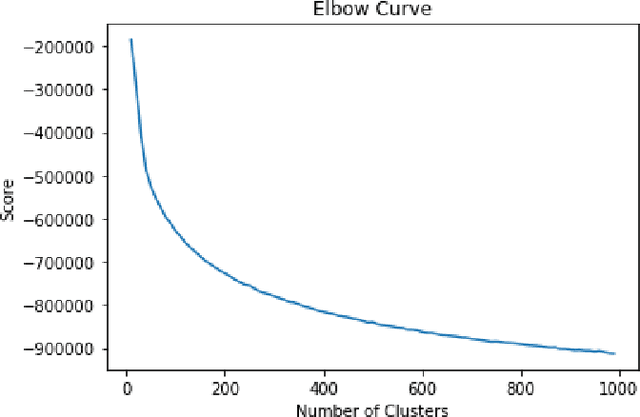

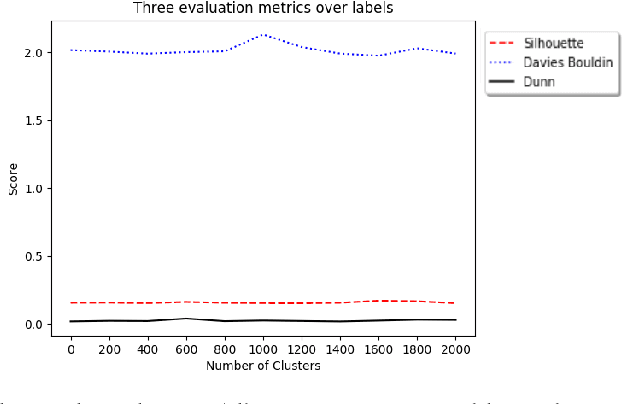

Guess What's on my Screen? Clustering Smartphone Screenshots with Active Learning

Jan 10, 2019

Abstract:A significant proportion of individuals' daily activities is experienced through digital devices. Smartphones in particular have become one of the preferred interfaces for content consumption and social interaction. Identifying the content embedded in frequently-captured smartphone screenshots is thus a crucial prerequisite to studies of media behavior and health intervention planning that analyze activity interplay and content switching over time. Screenshot images can depict heterogeneous contents and applications, making the a priori definition of adequate taxonomies a cumbersome task, even for humans. Privacy protection of the sensitive data captured on screens means the costs associated with manual annotation are large, as the effort cannot be crowd-sourced. Thus, there is need to examine utility of unsupervised and semi-supervised methods for digital screenshot classification. This work introduces the implications of applying clustering on large screenshot sets when only a limited amount of labels is available. In this paper we develop a framework for combining K-Means clustering with Active Learning for efficient leveraging of labeled and unlabeled samples, with the goal of discovering latent classes and describing a large collection of screenshot data. We tested whether SVM-embedded or XGBoost-embedded solutions for class probability propagation provide for more well-formed cluster configurations. Visual and textual vector representations of the screenshot images are derived and combined to assess the relative contribution of multi-modal features to the overall performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge