Byeongkeun Kang

Generalized Zero-Shot Learning for Point Cloud Segmentation with Evidence-Based Dynamic Calibration

Sep 10, 2025Abstract:Generalized zero-shot semantic segmentation of 3D point clouds aims to classify each point into both seen and unseen classes. A significant challenge with these models is their tendency to make biased predictions, often favoring the classes encountered during training. This problem is more pronounced in 3D applications, where the scale of the training data is typically smaller than in image-based tasks. To address this problem, we propose a novel method called E3DPC-GZSL, which reduces overconfident predictions towards seen classes without relying on separate classifiers for seen and unseen data. E3DPC-GZSL tackles the overconfidence problem by integrating an evidence-based uncertainty estimator into a classifier. This estimator is then used to adjust prediction probabilities using a dynamic calibrated stacking factor that accounts for pointwise prediction uncertainty. In addition, E3DPC-GZSL introduces a novel training strategy that improves uncertainty estimation by refining the semantic space. This is achieved by merging learnable parameters with text-derived features, thereby improving model optimization for unseen data. Extensive experiments demonstrate that the proposed approach achieves state-of-the-art performance on generalized zero-shot semantic segmentation datasets, including ScanNet v2 and S3DIS.

* 20 pages, 12 figures, AAAI 2025

Unsupervised Contrastive Learning Using Out-Of-Distribution Data for Long-Tailed Dataset

Jun 15, 2025Abstract:This work addresses the task of self-supervised learning (SSL) on a long-tailed dataset that aims to learn balanced and well-separated representations for downstream tasks such as image classification. This task is crucial because the real world contains numerous object categories, and their distributions are inherently imbalanced. Towards robust SSL on a class-imbalanced dataset, we investigate leveraging a network trained using unlabeled out-of-distribution (OOD) data that are prevalently available online. We first train a network using both in-domain (ID) and sampled OOD data by back-propagating the proposed pseudo semantic discrimination loss alongside a domain discrimination loss. The OOD data sampling and loss functions are designed to learn a balanced and well-separated embedding space. Subsequently, we further optimize the network on ID data by unsupervised contrastive learning while using the previously trained network as a guiding network. The guiding network is utilized to select positive/negative samples and to control the strengths of attractive/repulsive forces in contrastive learning. We also distil and transfer its embedding space to the training network to maintain balancedness and separability. Through experiments on four publicly available long-tailed datasets, we demonstrate that the proposed method outperforms previous state-of-the-art methods.

* 13 pages

Completely Weakly Supervised Class-Incremental Learning for Semantic Segmentation

May 16, 2025Abstract:This work addresses the task of completely weakly supervised class-incremental learning for semantic segmentation to learn segmentation for both base and additional novel classes using only image-level labels. While class-incremental semantic segmentation (CISS) is crucial for handling diverse and newly emerging objects in the real world, traditional CISS methods require expensive pixel-level annotations for training. To overcome this limitation, partially weakly-supervised approaches have recently been proposed. However, to the best of our knowledge, this is the first work to introduce a completely weakly-supervised method for CISS. To achieve this, we propose to generate robust pseudo-labels by combining pseudo-labels from a localizer and a sequence of foundation models based on their uncertainty. Moreover, to mitigate catastrophic forgetting, we introduce an exemplar-guided data augmentation method that generates diverse images containing both previous and novel classes with guidance. Finally, we conduct experiments in three common experimental settings: 15-5 VOC, 10-10 VOC, and COCO-to-VOC, and in two scenarios: disjoint and overlap. The experimental results demonstrate that our completely weakly supervised method outperforms even partially weakly supervised methods in the 15-5 VOC and 10-10 VOC settings while achieving competitive accuracy in the COCO-to-VOC setting.

* 8 pages

Generalized Class Discovery in Instance Segmentation

Feb 12, 2025

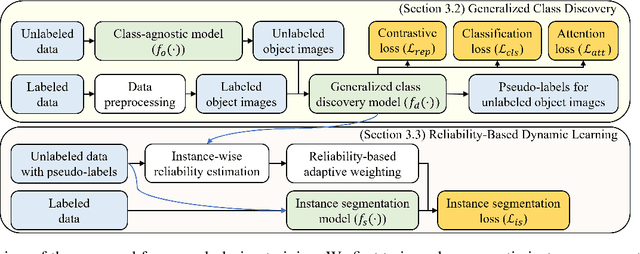

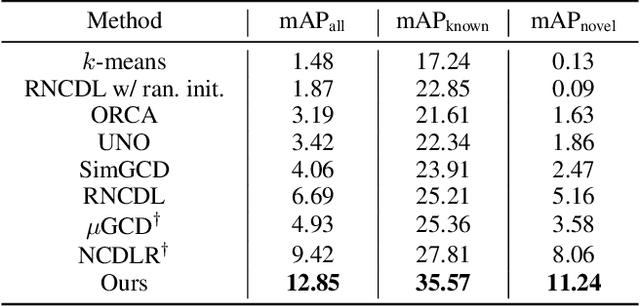

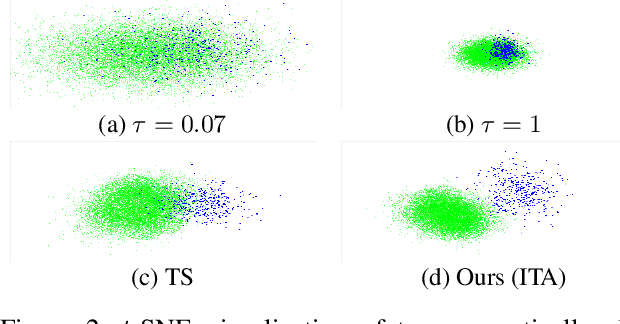

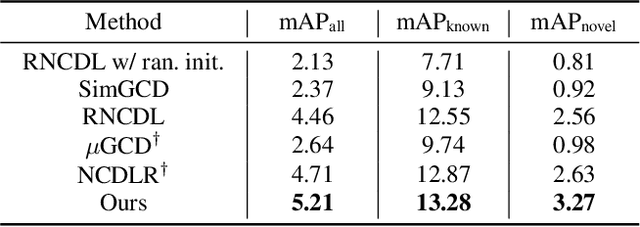

Abstract:This work addresses the task of generalized class discovery (GCD) in instance segmentation. The goal is to discover novel classes and obtain a model capable of segmenting instances of both known and novel categories, given labeled and unlabeled data. Since the real world contains numerous objects with long-tailed distributions, the instance distribution for each class is inherently imbalanced. To address the imbalanced distributions, we propose an instance-wise temperature assignment (ITA) method for contrastive learning and class-wise reliability criteria for pseudo-labels. The ITA method relaxes instance discrimination for samples belonging to head classes to enhance GCD. The reliability criteria are to avoid excluding most pseudo-labels for tail classes when training an instance segmentation network using pseudo-labels from GCD. Additionally, we propose dynamically adjusting the criteria to leverage diverse samples in the early stages while relying only on reliable pseudo-labels in the later stages. We also introduce an efficient soft attention module to encode object-specific representations for GCD. Finally, we evaluate our proposed method by conducting experiments on two settings: COCO$_{half}$ + LVIS and LVIS + Visual Genome. The experimental results demonstrate that the proposed method outperforms previous state-of-the-art methods.

Content-Aware Preserving Image Generation

Nov 15, 2024

Abstract:Remarkable progress has been achieved in image generation with the introduction of generative models. However, precisely controlling the content in generated images remains a challenging task due to their fundamental training objective. This paper addresses this challenge by proposing a novel image generation framework explicitly designed to incorporate desired content in output images. The framework utilizes advanced encoding techniques, integrating subnetworks called content fusion and frequency encoding modules. The frequency encoding module first captures features and structures of reference images by exclusively focusing on selected frequency components. Subsequently, the content fusion module generates a content-guiding vector that encapsulates desired content features. During the image generation process, content-guiding vectors from real images are fused with projected noise vectors. This ensures the production of generated images that not only maintain consistent content from guiding images but also exhibit diverse stylistic variations. To validate the effectiveness of the proposed framework in preserving content attributes, extensive experiments are conducted on widely used benchmark datasets, including Flickr-Faces-High Quality, Animal Faces High Quality, and Large-scale Scene Understanding datasets.

MSTA3D: Multi-scale Twin-attention for 3D Instance Segmentation

Nov 05, 2024Abstract:Recently, transformer-based techniques incorporating superpoints have become prevalent in 3D instance segmentation. However, they often encounter an over-segmentation problem, especially noticeable with large objects. Additionally, unreliable mask predictions stemming from superpoint mask prediction further compound this issue. To address these challenges, we propose a novel framework called MSTA3D. It leverages multi-scale feature representation and introduces a twin-attention mechanism to effectively capture them. Furthermore, MSTA3D integrates a box query with a box regularizer, offering a complementary spatial constraint alongside semantic queries. Experimental evaluations on ScanNetV2, ScanNet200 and S3DIS datasets demonstrate that our approach surpasses state-of-the-art 3D instance segmentation methods.

* 14 pages, 9 figures, 7 tables, conference

Enhancing Long-Term Person Re-Identification Using Global, Local Body Part, and Head Streams

Mar 05, 2024

Abstract:This work addresses the task of long-term person re-identification. Typically, person re-identification assumes that people do not change their clothes, which limits its applications to short-term scenarios. To overcome this limitation, we investigate long-term person re-identification, which considers both clothes-changing and clothes-consistent scenarios. In this paper, we propose a novel framework that effectively learns and utilizes both global and local information. The proposed framework consists of three streams: global, local body part, and head streams. The global and head streams encode identity-relevant information from an entire image and a cropped image of the head region, respectively. Both streams encode the most distinct, less distinct, and average features using the combinations of adversarial erasing, max pooling, and average pooling. The local body part stream extracts identity-related information for each body part, allowing it to be compared with the same body part from another image. Since body part annotations are not available in re-identification datasets, pseudo-labels are generated using clustering. These labels are then utilized to train a body part segmentation head in the local body part stream. The proposed framework is trained by backpropagating the weighted summation of the identity classification loss, the pair-based loss, and the pseudo body part segmentation loss. To demonstrate the effectiveness of the proposed method, we conducted experiments on three publicly available datasets (Celeb-reID, PRCC, and VC-Clothes). The experimental results demonstrate that the proposed method outperforms the previous state-of-the-art method.

* 16 pages

Multiscale Vision Transformer With Deep Clustering-Guided Refinement for Weakly Supervised Object Localization

Dec 15, 2023

Abstract:This work addresses the task of weakly-supervised object localization. The goal is to learn object localization using only image-level class labels, which are much easier to obtain compared to bounding box annotations. This task is important because it reduces the need for labor-intensive ground-truth annotations. However, methods for object localization trained using weak supervision often suffer from limited accuracy in localization. To address this challenge and enhance localization accuracy, we propose a multiscale object localization transformer (MOLT). It comprises multiple object localization transformers that extract patch embeddings across various scales. Moreover, we introduce a deep clustering-guided refinement method that further enhances localization accuracy by utilizing separately extracted image segments. These segments are obtained by clustering pixels using convolutional neural networks. Finally, we demonstrate the effectiveness of our proposed method by conducting experiments on the publicly available ILSVRC-2012 dataset.

* 5 pages

Pixel-Level Clustering Network for Unsupervised Image Segmentation

Oct 24, 2023

Abstract:While image segmentation is crucial in various computer vision applications, such as autonomous driving, grasping, and robot navigation, annotating all objects at the pixel-level for training is nearly impossible. Therefore, the study of unsupervised image segmentation methods is essential. In this paper, we present a pixel-level clustering framework for segmenting images into regions without using ground truth annotations. The proposed framework includes feature embedding modules with an attention mechanism, a feature statistics computing module, image reconstruction, and superpixel segmentation to achieve accurate unsupervised segmentation. Additionally, we propose a training strategy that utilizes intra-consistency within each superpixel, inter-similarity/dissimilarity between neighboring superpixels, and structural similarity between images. To avoid potential over-segmentation caused by superpixel-based losses, we also propose a post-processing method. Furthermore, we present an extension of the proposed method for unsupervised semantic segmentation. We conducted experiments on three publicly available datasets (Berkeley segmentation dataset, PASCAL VOC 2012 dataset, and COCO-Stuff dataset) to demonstrate the effectiveness of the proposed framework. The experimental results show that the proposed framework outperforms previous state-of-the-art methods.

* 13 pages

FDCNet: Feature Drift Compensation Network for Class-Incremental Weakly Supervised Object Localization

Sep 17, 2023

Abstract:This work addresses the task of class-incremental weakly supervised object localization (CI-WSOL). The goal is to incrementally learn object localization for novel classes using only image-level annotations while retaining the ability to localize previously learned classes. This task is important because annotating bounding boxes for every new incoming data is expensive, although object localization is crucial in various applications. To the best of our knowledge, we are the first to address this task. Thus, we first present a strong baseline method for CI-WSOL by adapting the strategies of class-incremental classifiers to mitigate catastrophic forgetting. These strategies include applying knowledge distillation, maintaining a small data set from previous tasks, and using cosine normalization. We then propose the feature drift compensation network to compensate for the effects of feature drifts on class scores and localization maps. Since updating network parameters to learn new tasks causes feature drifts, compensating for the final outputs is necessary. Finally, we evaluate our proposed method by conducting experiments on two publicly available datasets (ImageNet-100 and CUB-200). The experimental results demonstrate that the proposed method outperforms other baseline methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge