Bruce Spottiswoode

TAI-GAN: A Temporally and Anatomically Informed Generative Adversarial Network for early-to-late frame conversion in dynamic cardiac PET inter-frame motion correction

Feb 14, 2024

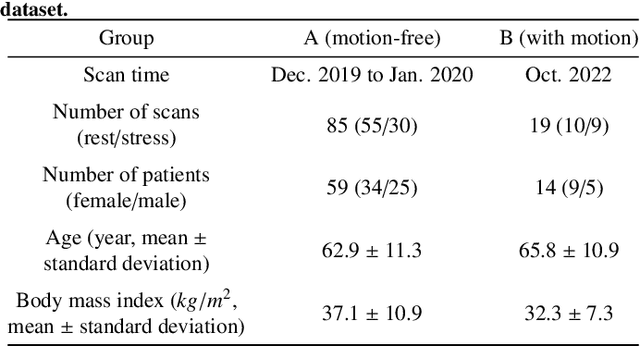

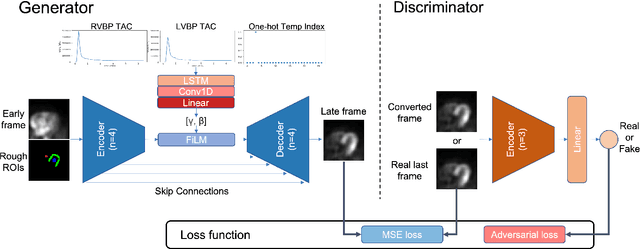

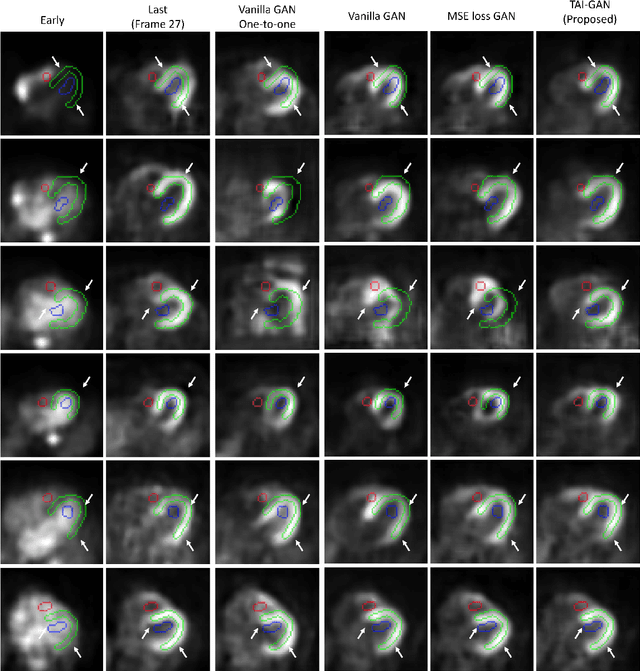

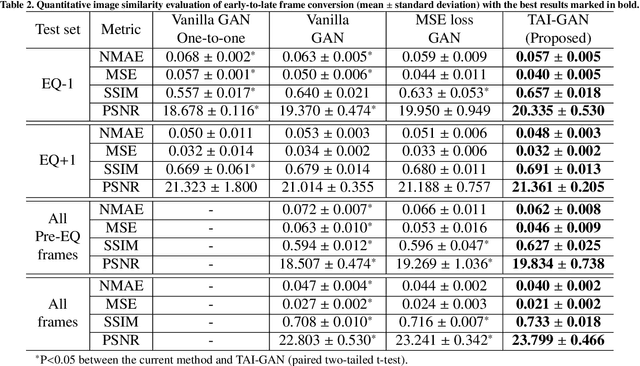

Abstract:Inter-frame motion in dynamic cardiac positron emission tomography (PET) using rubidium-82 (82-Rb) myocardial perfusion imaging impacts myocardial blood flow (MBF) quantification and the diagnosis accuracy of coronary artery diseases. However, the high cross-frame distribution variation due to rapid tracer kinetics poses a considerable challenge for inter-frame motion correction, especially for early frames where intensity-based image registration techniques often fail. To address this issue, we propose a novel method called Temporally and Anatomically Informed Generative Adversarial Network (TAI-GAN) that utilizes an all-to-one mapping to convert early frames into those with tracer distribution similar to the last reference frame. The TAI-GAN consists of a feature-wise linear modulation layer that encodes channel-wise parameters generated from temporal information and rough cardiac segmentation masks with local shifts that serve as anatomical information. Our proposed method was evaluated on a clinical 82-Rb PET dataset, and the results show that our TAI-GAN can produce converted early frames with high image quality, comparable to the real reference frames. After TAI-GAN conversion, the motion estimation accuracy and subsequent myocardial blood flow (MBF) quantification with both conventional and deep learning-based motion correction methods were improved compared to using the original frames.

TAI-GAN: Temporally and Anatomically Informed GAN for early-to-late frame conversion in dynamic cardiac PET motion correction

Aug 23, 2023Abstract:The rapid tracer kinetics of rubidium-82 ($^{82}$Rb) and high variation of cross-frame distribution in dynamic cardiac positron emission tomography (PET) raise significant challenges for inter-frame motion correction, particularly for the early frames where conventional intensity-based image registration techniques are not applicable. Alternatively, a promising approach utilizes generative methods to handle the tracer distribution changes to assist existing registration methods. To improve frame-wise registration and parametric quantification, we propose a Temporally and Anatomically Informed Generative Adversarial Network (TAI-GAN) to transform the early frames into the late reference frame using an all-to-one mapping. Specifically, a feature-wise linear modulation layer encodes channel-wise parameters generated from temporal tracer kinetics information, and rough cardiac segmentations with local shifts serve as the anatomical information. We validated our proposed method on a clinical $^{82}$Rb PET dataset and found that our TAI-GAN can produce converted early frames with high image quality, comparable to the real reference frames. After TAI-GAN conversion, motion estimation accuracy and clinical myocardial blood flow (MBF) quantification were improved compared to using the original frames. Our code is published at https://github.com/gxq1998/TAI-GAN.

Unsupervised inter-frame motion correction for whole-body dynamic PET using convolutional long short-term memory in a convolutional neural network

Jun 13, 2022

Abstract:Subject motion in whole-body dynamic PET introduces inter-frame mismatch and seriously impacts parametric imaging. Traditional non-rigid registration methods are generally computationally intense and time-consuming. Deep learning approaches are promising in achieving high accuracy with fast speed, but have yet been investigated with consideration for tracer distribution changes or in the whole-body scope. In this work, we developed an unsupervised automatic deep learning-based framework to correct inter-frame body motion. The motion estimation network is a convolutional neural network with a combined convolutional long short-term memory layer, fully utilizing dynamic temporal features and spatial information. Our dataset contains 27 subjects each under a 90-min FDG whole-body dynamic PET scan. With 9-fold cross-validation, compared with both traditional and deep learning baselines, we demonstrated that the proposed network obtained superior performance in enhanced qualitative and quantitative spatial alignment between parametric $K_{i}$ and $V_{b}$ images and in significantly reduced parametric fitting error. We also showed the potential of the proposed motion correction method for impacting downstream analysis of the estimated parametric images, improving the ability to distinguish malignant from benign hypermetabolic regions of interest. Once trained, the motion estimation inference time of our proposed network was around 460 times faster than the conventional registration baseline, showing its potential to be easily applied in clinical settings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge