Bowen Xie

Conditional Image Generation with Pretrained Generative Model

Dec 20, 2023Abstract:In recent years, diffusion models have gained popularity for their ability to generate higher-quality images in comparison to GAN models. However, like any other large generative models, these models require a huge amount of data, computational resources, and meticulous tuning for successful training. This poses a significant challenge, rendering it infeasible for most individuals. As a result, the research community has devised methods to leverage pre-trained unconditional diffusion models with additional guidance for the purpose of conditional image generative. These methods enable conditional image generations on diverse inputs and, most importantly, circumvent the need for training the diffusion model. In this paper, our objective is to reduce the time-required and computational overhead introduced by the addition of guidance in diffusion models -- while maintaining comparable image quality. We propose a set of methods based on our empirical analysis, demonstrating a reduction in computation time by approximately threefold.

MOB-FL: Mobility-Aware Federated Learning for Intelligent Connected Vehicles

Dec 07, 2022Abstract:Federated learning (FL) is a promising approach to enable the future Internet of vehicles consisting of intelligent connected vehicles (ICVs) with powerful sensing, computing and communication capabilities. We consider a base station (BS) coordinating nearby ICVs to train a neural network in a collaborative yet distributed manner, in order to limit data traffic and privacy leakage. However, due to the mobility of vehicles, the connections between the BS and ICVs are short-lived, which affects the resource utilization of ICVs, and thus, the convergence speed of the training process. In this paper, we propose an accelerated FL-ICV framework, by optimizing the duration of each training round and the number of local iterations, for better convergence performance of FL. We propose a mobility-aware optimization algorithm called MOB-FL, which aims at maximizing the resource utilization of ICVs under short-lived wireless connections, so as to increase the convergence speed. Simulation results based on the beam selection and the trajectory prediction tasks verify the effectiveness of the proposed solution.

MEET: Mobility-Enhanced Edge inTelligence for Smart and Green 6G Networks

Oct 27, 2022Abstract:Edge intelligence is an emerging paradigm for real-time training and inference at the wireless edge, thus enabling mission-critical applications. Accordingly, base stations (BSs) and edge servers (ESs) need to be densely deployed, leading to huge deployment and operation costs, in particular the energy costs. In this article, we propose a new framework called Mobility-Enhanced Edge inTelligence (MEET), which exploits the sensing, communication, computing, and self-powering capabilities of intelligent connected vehicles for the smart and green 6G networks. Specifically, the operators can incorporate infrastructural vehicles as movable BSs or ESs, and schedule them in a more flexible way to align with the communication and computation traffic fluctuations. Meanwhile, the remaining compute resources of opportunistic vehicles are exploited for edge training and inference, where mobility can further enhance edge intelligence by bringing more compute resources, communication opportunities, and diverse data. In this way, the deployment and operation costs are spread over the vastly available vehicles, so that the edge intelligence is realized cost-effectively and sustainably. Furthermore, these vehicles can be either powered by renewable energy to reduce carbon emissions, or charged more flexibly during off-peak hours to cut electricity bills.

Classification of Diabetic Retinopathy Severity in Fundus Images with DenseNet121 and ResNet50

Aug 19, 2021

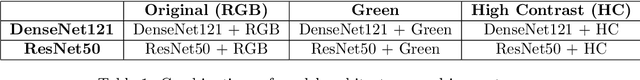

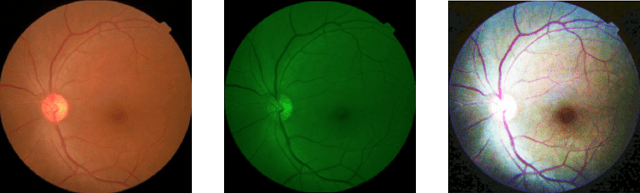

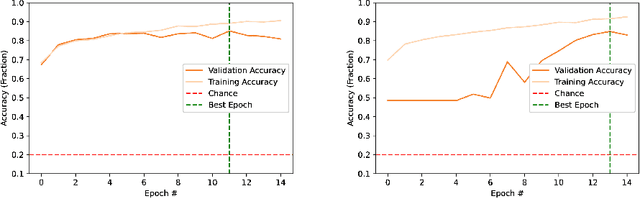

Abstract:In this work, deep learning algorithms are used to classify fundus images in terms of diabetic retinopathy severity. Six different combinations of two model architectures, the Dense Convolutional Network-121 and the Residual Neural Network-50 and three image types, RGB, Green, and High Contrast, were tested to find the highest performing combination. We achieved an average validation loss of 0.17 and a max validation accuracy of 85 percent. By testing out multiple combinations, certain combinations of parameters performed better than others, though minimal variance was found overall. Green filtration was shown to perform the poorest, while amplified contrast appeared to have a negligible effect in comparison to RGB analysis. ResNet50 proved to be less of a robust model as opposed to DenseNet121.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge