Bernd Finkbeiner

Saarland University

On Conformant Planning and Model-Checking of $\exists^*\forall^*$ Hyperproperties

Dec 29, 2025Abstract:We study the connection of two problems within the planning and verification community: Conformant planning and model-checking of hyperproperties. Conformant planning is the task of finding a sequential plan that achieves a given objective independent of non-deterministic action effects during the plan's execution. Hyperproperties are system properties that relate multiple execution traces of a system and, e.g., capture information-flow and fairness policies. In this paper, we show that model-checking of $\exists^*\forall^*$ hyperproperties is closely related to the problem of computing a conformant plan. Firstly, we show that we can efficiently reduce a hyperproperty model-checking instance to a conformant planning instance, and prove that our encoding is sound and complete. Secondly, we establish the converse direction: Every conformant planning problem is, itself, a hyperproperty model-checking task.

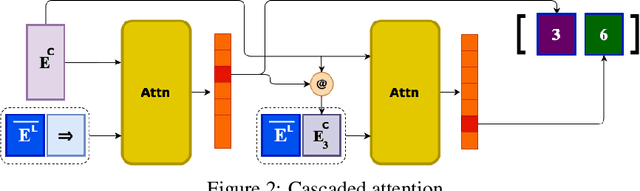

Learning Representations Through Contrastive Neural Model Checking

Oct 02, 2025Abstract:Model checking is a key technique for verifying safety-critical systems against formal specifications, where recent applications of deep learning have shown promise. However, while ubiquitous for vision and language domains, representation learning remains underexplored in formal verification. We introduce Contrastive Neural Model Checking (CNML), a novel method that leverages the model checking task as a guiding signal for learning aligned representations. CNML jointly embeds logical specifications and systems into a shared latent space through a self-supervised contrastive objective. On industry-inspired retrieval tasks, CNML considerably outperforms both algorithmic and neural baselines in cross-modal and intra-modal settings.We further show that the learned representations effectively transfer to downstream tasks and generalize to more complex formulas. These findings demonstrate that model checking can serve as an objective for learning representations for formal languages.

Stream-Based Monitoring of Algorithmic Fairness

Jan 30, 2025Abstract:Automatic decision and prediction systems are increasingly deployed in applications where they significantly impact the livelihood of people, such as for predicting the creditworthiness of loan applicants or the recidivism risk of defendants. These applications have given rise to a new class of algorithmic-fairness specifications that require the systems to decide and predict without bias against social groups. Verifying these specifications statically is often out of reach for realistic systems, since the systems may, e.g., employ complex learning components, and reason over a large input space. In this paper, we therefore propose stream-based monitoring as a solution for verifying the algorithmic fairness of decision and prediction systems at runtime. Concretely, we present a principled way to formalize algorithmic fairness over temporal data streams in the specification language RTLola and demonstrate the efficacy of this approach on a number of benchmarks. Besides synthetic scenarios that particularly highlight its efficiency on streams with a scaling amount of data, we notably evaluate the monitor on real-world data from the recidivism prediction tool COMPAS.

Non-Deterministic Planning for Hyperproperty Verification

May 22, 2024

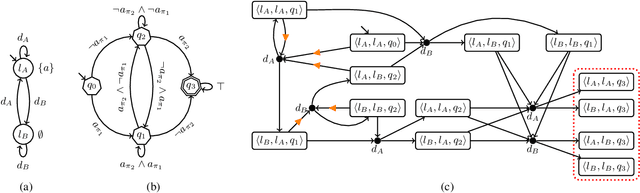

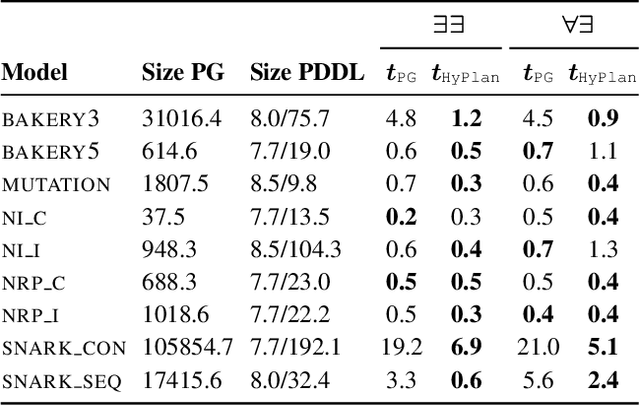

Abstract:Non-deterministic planning aims to find a policy that achieves a given objective in an environment where actions have uncertain effects, and the agent - potentially - only observes parts of the current state. Hyperproperties are properties that relate multiple paths of a system and can, e.g., capture security and information-flow policies. Popular logics for expressing temporal hyperproperties - such as HyperLTL - extend LTL by offering selective quantification over executions of a system. In this paper, we show that planning offers a powerful intermediate language for the automated verification of hyperproperties. Concretely, we present an algorithm that, given a HyperLTL verification problem, constructs a non-deterministic multi-agent planning instance (in the form of a QDec-POMDP) that, when admitting a plan, implies the satisfaction of the verification problem. We show that for large fragments of HyperLTL, the resulting planning instance corresponds to a classical, FOND, or POND planning problem. We implement our encoding in a prototype verification tool and report on encouraging experimental results.

Monitoring Second-Order Hyperproperties

Apr 15, 2024Abstract:Hyperproperties express the relationship between multiple executions of a system. This is needed in many AI-related fields, such as knowledge representation and planning, to capture system properties related to knowledge, information flow, and privacy. In this paper, we study the monitoring of complex hyperproperties at runtime. Previous work in this area has either focused on the simpler problem of monitoring trace properties (which are sets of traces, while hyperproperties are sets of sets of traces) or on monitoring first-order hyperproperties, which are expressible in temporal logics with first-order quantification over traces, such as HyperLTL. We present the first monitoring algorithm for the much more expressive class of second-order hyperproperties. Second-order hyperproperties include system properties like common knowledge, which cannot be expressed in first-order logics like HyperLTL. We introduce Hyper$^2$LTL$_f$, a temporal logic over finite traces that allows for second-order quantification over sets of traces. We study the monitoring problem in two fundamental execution models: (1) the parallel model, where a fixed number of traces is monitored in parallel, and (2) the sequential model, where an unbounded number of traces is observed sequentially, one trace after the other. For the parallel model, we show that the monitoring of the second-order hyperproperties of Hyper$^2$LTL$_f$ can be reduced to monitoring first-order hyperproperties. For the sequential model, we present a monitoring algorithm that handles second-order quantification efficiently, exploiting optimizations based on the monotonicity of subformulas, graph-based storing of executions, and fixpoint hashing. We present experimental results from a range of benchmarks, including examples from common knowledge and planning.

Hyper Strategy Logic

Mar 20, 2024

Abstract:Strategy logic (SL) is a powerful temporal logic that enables strategic reasoning in multi-agent systems. SL supports explicit (first-order) quantification over strategies and provides a logical framework to express many important properties such as Nash equilibria, dominant strategies, etc. While in SL the same strategy can be used in multiple strategy profiles, each such profile is evaluated w.r.t. a path-property, i.e., a property that considers the single path resulting from a particular strategic interaction. In this paper, we present Hyper Strategy Logic (HyperSL), a strategy logic where the outcome of multiple strategy profiles can be compared w.r.t. a hyperproperty, i.e., a property that relates multiple paths. We show that HyperSL can capture important properties that cannot be expressed in SL, including non-interference, quantitative Nash equilibria, optimal adversarial planning, and reasoning under imperfect information. On the algorithmic side, we identify an expressive fragment of HyperSL with decidable model checking and present a model-checking algorithm. We contribute a prototype implementation of our algorithm and report on encouraging experimental results.

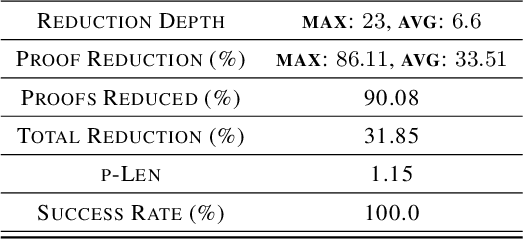

NeuRes: Learning Proofs of Propositional Satisfiability

Feb 13, 2024

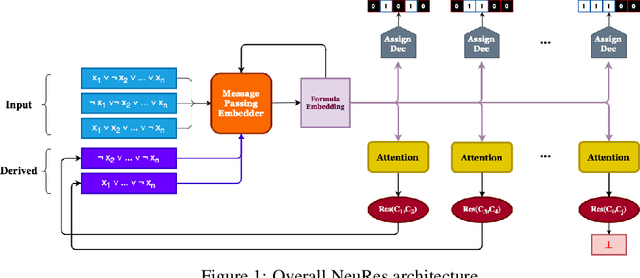

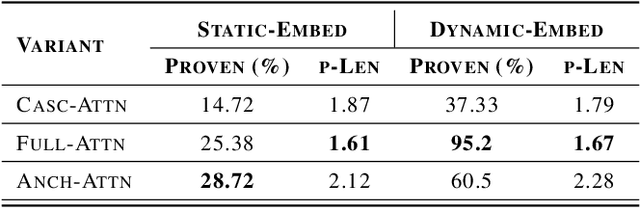

Abstract:We introduce NeuRes, a neuro-symbolic proof-based SAT solver. Unlike other neural SAT solving methods, NeuRes is capable of proving unsatisfiability as opposed to merely predicting it. By design, NeuRes operates in a certificate-driven fashion by employing propositional resolution to prove unsatisfiability and to accelerate the process of finding satisfying truth assignments in case of unsat and sat formulas, respectively. To realize this, we propose a novel architecture that adapts elements from Graph Neural Networks and Pointer Networks to autoregressively select pairs of nodes from a dynamic graph structure, which is essential to the generation of resolution proofs. Our model is trained and evaluated on a dataset of teacher proofs and truth assignments that we compiled with the same random formula distribution used by NeuroSAT. In our experiments, we show that NeuRes solves more test formulas than NeuroSAT by a rather wide margin on different distributions while being much more data-efficient. Furthermore, we show that NeuRes is capable of largely shortening teacher proofs by notable proportions. We use this feature to devise a bootstrapped training procedure that manages to reduce a dataset of proofs generated by an advanced solver by ~23% after training on it with no extra guidance.

On Alternating-time Temporal Logic, Hyperproperties, and Strategy Sharing

Dec 19, 2023Abstract:Alternating-time temporal logic (ATL$^*$) is a well-established framework for formal reasoning about multi-agent systems. However, while ATL$^*$ can reason about the strategic ability of agents (e.g., some coalition $A$ can ensure that a goal is reached eventually), we cannot compare multiple strategic interactions, nor can we require multiple agents to follow the same strategy. For example, we cannot state that coalition $A$ can reach a goal sooner (or more often) than some other coalition $A'$. In this paper, we propose HyperATLS$^*_S$, an extension of ATL$^*$ in which we can (1) compare the outcome of multiple strategic interactions w.r.t. a hyperproperty, i.e., a property that refers to multiple paths at the same time, and (2) enforce that some agents share the same strategy. We show that HyperATL$^*_S$ is a rich specification language that captures important AI-related properties that were out of reach of existing logics. We prove that model checking of HyperATL$^*_S$ on concurrent game structures is decidable. We implement our model-checking algorithm in a tool we call HyMASMC and evaluate it on a range of benchmarks.

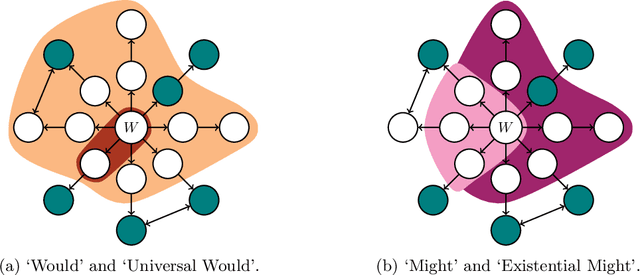

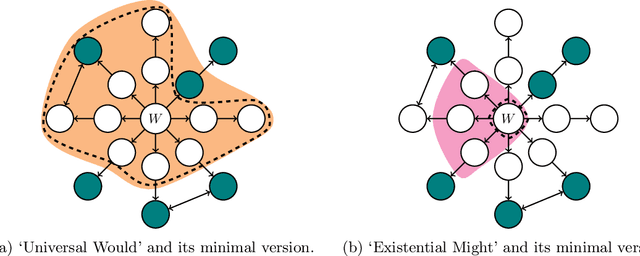

Counterfactuals Modulo Temporal Logics

Jun 15, 2023

Abstract:Lewis' theory of counterfactuals is the foundation of many contemporary notions of causality. In this paper, we extend this theory in the temporal direction to enable symbolic counterfactual reasoning on infinite sequences, such as counterexamples found by a model checker and trajectories produced by a reinforcement learning agent. In particular, our extension considers a more relaxed notion of similarity between worlds and proposes two additional counterfactual operators that close a semantic gap between the previous two in this more general setting. Further, we consider versions of counterfactuals that minimize the distance to the witnessing counterfactual worlds, a common requirement in causal analysis. To automate counterfactual reasoning in the temporal domain, we introduce a logic that combines temporal and counterfactual operators, and outline decision procedures for the satisfiability and trace-checking problems of this logic.

Iterative Circuit Repair Against Formal Specifications

Mar 02, 2023

Abstract:We present a deep learning approach for repairing sequential circuits against formal specifications given in linear-time temporal logic (LTL). Given a defective circuit and its formal specification, we train Transformer models to output circuits that satisfy the corresponding specification. We propose a separated hierarchical Transformer for multimodal representation learning of the formal specification and the circuit. We introduce a data generation algorithm that enables generalization to more complex specifications and out-of-distribution datasets. In addition, our proposed repair mechanism significantly improves the automated synthesis of circuits from LTL specifications with Transformers. It improves the state-of-the-art by $6.8$ percentage points on held-out instances and $11.8$ percentage points on an out-of-distribution dataset from the annual reactive synthesis competition.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge