Benjamin Kuipers

Learning and Acting in Peripersonal Space: Moving, Reaching, and Grasping

Sep 27, 2018

Abstract:The young infant explores its body, its sensorimotor system, and the immediately accessible parts of its environment, over the course of a few months creating a model of peripersonal space useful for reaching and grasping objects around it. Drawing on constraints from the empirical literature on infant behavior, we present a preliminary computational model of this learning process, implemented and evaluated on a physical robot. The learning agent explores the relationship between the configuration space of the arm, sensing joint angles through proprioception, and its visual perceptions of the hand and grippers. The resulting knowledge is represented as the peripersonal space (PPS) graph, where nodes represent states of the arm, edges represent safe movements, and paths represent safe trajectories from one pose to another. In our model, the learning process is driven by intrinsic motivation. When repeatedly performing an action, the agent learns the typical result, but also detects unusual outcomes, and is motivated to learn how to make those unusual results reliable. Arm motions typically leave the static background unchanged, but occasionally bump an object, changing its static position. The reach action is learned as a reliable way to bump and move an object in the environment. Similarly, once a reliable reach action is learned, it typically makes a quasi-static change in the environment, moving an object from one static position to another. The unusual outcome is that the object is accidentally grasped (thanks to the innate Palmar reflex), and thereafter moves dynamically with the hand. Learning to make grasps reliable is more complex than for reaches, but we demonstrate significant progress. Our current results are steps toward autonomous sensorimotor learning of motion, reaching, and grasping in peripersonal space, based on unguided exploration and intrinsic motivation.

Object Manipulation Learning by Imitation

Nov 19, 2017

Abstract:We aim to enable robot to learn object manipulation by imitation. Given external observations of demonstrations on object manipulations, we believe that two underlying problems to address in learning by imitation is 1) segment a given demonstration into skills that can be individually learned and reused, and 2) formulate the correct RL (Reinforcement Learning) problem that only considers the relevant aspects of each skill so that the policy for each skill can be effectively learned. Previous works made certain progress in this direction, but none has taken private information into account. The public information is the information that is available in the external observations of demonstration, and the private information is the information that are only available to the agent that executes the actions, such as tactile sensations. Our contribution is that we provide a method for the robot to automatically segment the demonstration of object manipulations into multiple skills, and formulate the correct RL problem for each skill, and automatically decide whether the private information is an important aspect of each skill based on interaction with the world. Our experiment shows that our robot learns to pick up a block, and stack it onto another block by imitating an observed demonstration. The evaluation is based on 1) whether the demonstration is reasonably segmented, 2) whether the correct RL problems are formulated, 3) and whether a good policy is learned.

Ethical Considerations in Artificial Intelligence Courses

Jan 26, 2017Abstract:The recent surge in interest in ethics in artificial intelligence may leave many educators wondering how to address moral, ethical, and philosophical issues in their AI courses. As instructors we want to develop curriculum that not only prepares students to be artificial intelligence practitioners, but also to understand the moral, ethical, and philosophical impacts that artificial intelligence will have on society. In this article we provide practical case studies and links to resources for use by AI educators. We also provide concrete suggestions on how to integrate AI ethics into a general artificial intelligence course and how to teach a stand-alone artificial intelligence ethics course.

A Nonlinear Constrained Optimization Framework for Comfortable and Customizable Motion Planning of Nonholonomic Mobile Robots - Part II

May 22, 2013

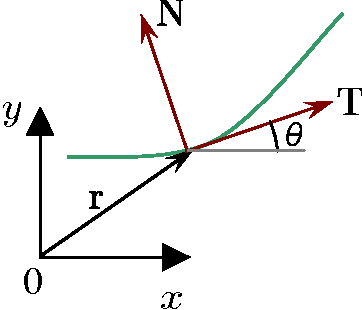

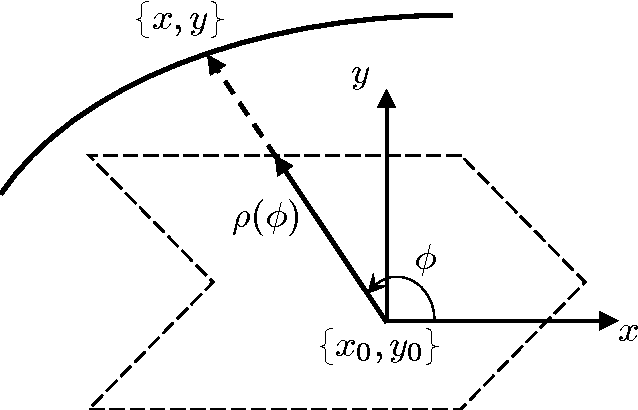

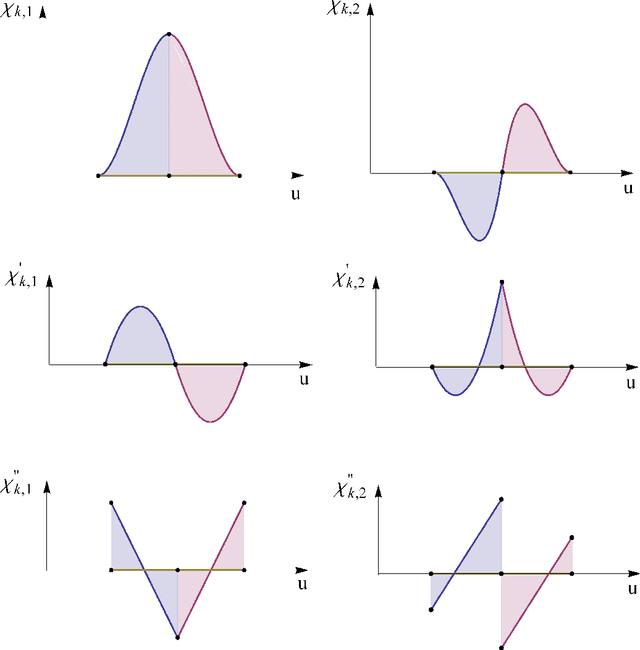

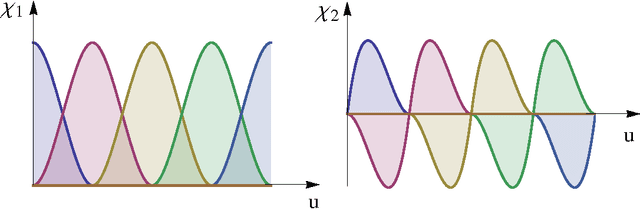

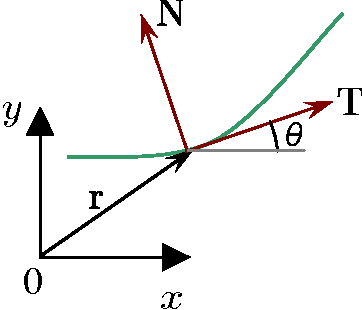

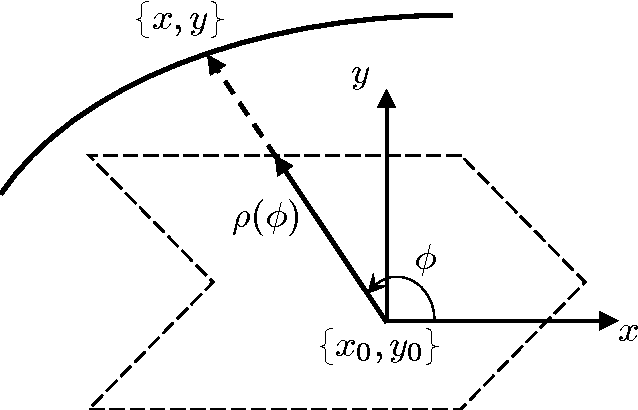

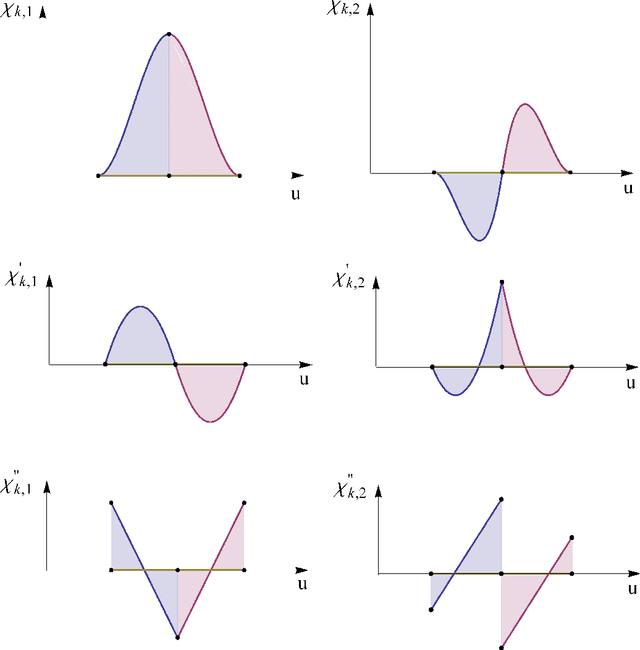

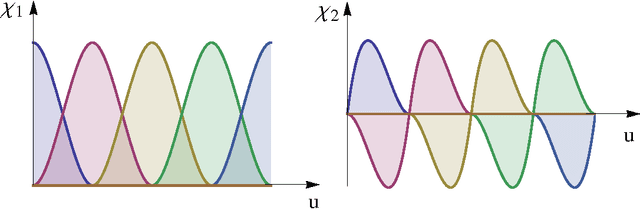

Abstract:In this series of papers, we present a motion planning framework for planning comfortable and customizable motion of nonholonomic mobile robots such as intelligent wheelchairs and autonomous cars. In Part I, we presented the mathematical foundation of our framework, where we model motion discomfort as a weighted cost functional and define comfortable motion planning as a nonlinear constrained optimization problem of computing trajectories that minimize this discomfort given the appropriate boundary conditions and constraints. In this paper, we discretize the infinite-dimensional optimization problem using conforming finite elements. We describe shape functions to handle different kinds of boundary conditions and the choice of unknowns to obtain a sparse Hessian matrix. We also describe in detail how any trajectory computation problem can have infinitely many locally optimal solutions and our method of handling them. Additionally, since we have a nonlinear and constrained problem, computation of high quality initial guesses is crucial for efficient solution. We show how to compute them.

A Nonlinear Constrained Optimization Framework for Comfortable and Customizable Motion Planning of Nonholonomic Mobile Robots - Part I

May 22, 2013

Abstract:In this series of papers, we present a motion planning framework for planning comfortable and customizable motion of nonholonomic mobile robots such as intelligent wheelchairs and autonomous cars. In this first one we present the mathematical foundation of our framework. The motion of a mobile robot that transports a human should be comfortable and customizable. We identify several properties that a trajectory must have for comfort. We model motion discomfort as a weighted cost functional and define comfortable motion planning as a nonlinear constrained optimization problem of computing trajectories that minimize this discomfort given the appropriate boundary conditions and constraints. The optimization problem is infinite-dimensional and we discretize it using conforming finite elements. We also outline a method by which different users may customize the motion to achieve personal comfort. There exists significant past work in kinodynamic motion planning, to the best of our knowledge, our work is the first comprehensive formulation of kinodynamic motion planning for a nonholonomic mobile robot as a nonlinear optimization problem that includes all of the following - a careful analysis of boundary conditions, continuity requirements on trajectory, dynamic constraints, obstacle avoidance constraints, and a robust numerical implementation. In this paper, we present the mathematical foundation of the motion planning framework and formulate the full nonlinear constrained optimization problem. We describe, in brief, the discretization method using finite elements and the process of computing initial guesses for the optimization problem. Details of the above two are presented in Part II of the series.

An existing, ecologically-successful genus of collectively intelligent artificial creatures

Apr 18, 2012Abstract:People sometimes worry about the Singularity [Vinge, 1993; Kurzweil, 2005], or about the world being taken over by artificially intelligent robots. I believe the risks of these are very small. However, few people recognize that we already share our world with artificial creatures that participate as intelligent agents in our society: corporations. Our planet is inhabited by two distinct kinds of intelligent beings --- individual humans and corporate entities --- whose natures and interests are intimately linked. To co-exist well, we need to find ways to define the rights and responsibilities of both individual humans and corporate entities, and to find ways to ensure that corporate entities behave as responsible members of society.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge