Benjamin Boerschinger

Tomayto, Tomahto. Beyond Token-level Answer Equivalence for Question Answering Evaluation

Feb 15, 2022Abstract:The predictions of question answering (QA) systems are typically evaluated against manually annotated finite sets of one or more answers. This leads to a coverage limitation that results in underestimating the true performance of systems, and is typically addressed by extending over exact match (EM) with predefined rules or with the token-level F1 measure. In this paper, we present the first systematic conceptual and data-driven analysis to examine the shortcomings of token-level equivalence measures. To this end, we define the asymmetric notion of answer equivalence (AE), accepting answers that are equivalent to or improve over the reference, and collect over 26K human judgements for candidates produced by multiple QA systems on SQuAD. Through a careful analysis of this data, we reveal and quantify several concrete limitations of the F1 measure, such as false impression of graduality, missing dependence on question, and more. Since collecting AE annotations for each evaluated model is expensive, we learn a BERT matching BEM measure to approximate this task. Being a simpler task than QA, we find BEM to provide significantly better AE approximations than F1, and more accurately reflect the performance of systems. Finally, we also demonstrate the practical utility of AE and BEM on the concrete application of minimal accurate prediction sets, reducing the number of required answers by up to 2.6 times.

Boosting Search Engines with Interactive Agents

Sep 01, 2021

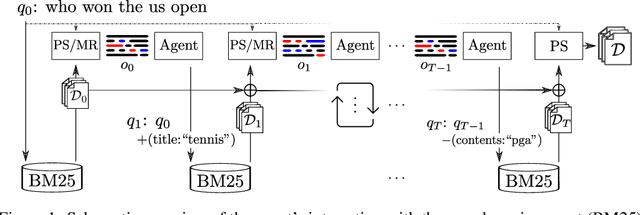

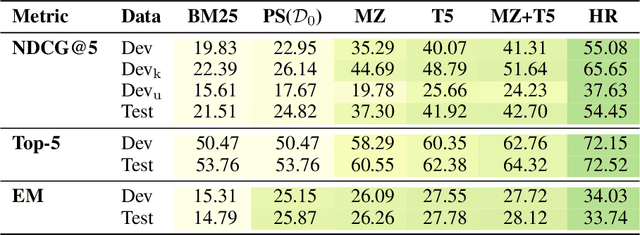

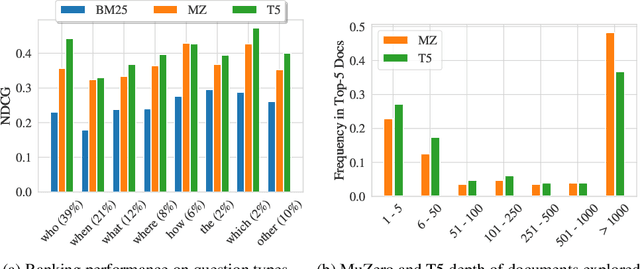

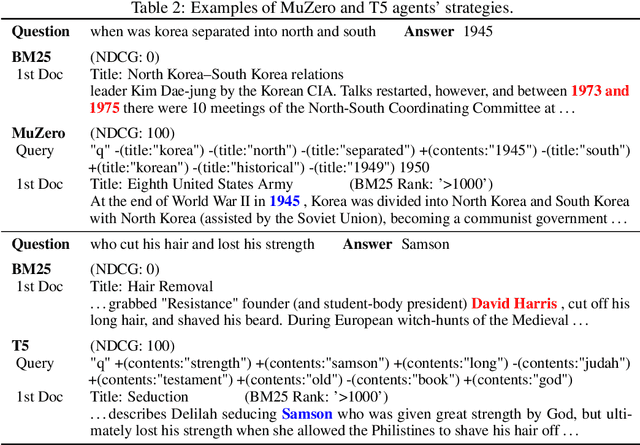

Abstract:Can machines learn to use a search engine as an interactive tool for finding information? That would have far reaching consequences for making the world's knowledge more accessible. This paper presents first steps in designing agents that learn meta-strategies for contextual query refinements. Our approach uses machine reading to guide the selection of refinement terms from aggregated search results. Agents are then empowered with simple but effective search operators to exert fine-grained and transparent control over queries and search results. We develop a novel way of generating synthetic search sessions, which leverages the power of transformer-based generative language models through (self-)supervised learning. We also present a reinforcement learning agent with dynamically constrained actions that can learn interactive search strategies completely from scratch. In both cases, we obtain significant improvements over one-shot search with a strong information retrieval baseline. Finally, we provide an in-depth analysis of the learned search policies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge