Bart Elen

Generative AI for Synthetic Data Across Multiple Medical Modalities: A Systematic Review of Recent Developments and Challenges

Jul 02, 2024

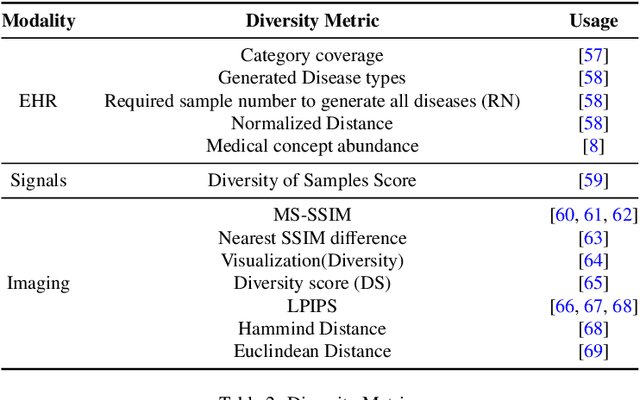

Abstract:This paper presents a comprehensive systematic review of generative models (GANs, VAEs, DMs, and LLMs) used to synthesize various medical data types, including imaging (dermoscopic, mammographic, ultrasound, CT, MRI, and X-ray), text, time-series, and tabular data (EHR). Unlike previous narrowly focused reviews, our study encompasses a broad array of medical data modalities and explores various generative models. Our search strategy queries databases such as Scopus, PubMed, and ArXiv, focusing on recent works from January 2021 to November 2023, excluding reviews and perspectives. This period emphasizes recent advancements beyond GANs, which have been extensively covered previously. The survey reveals insights from three key aspects: (1) Synthesis applications and purpose of synthesis, (2) generation techniques, and (3) evaluation methods. It highlights clinically valid synthesis applications, demonstrating the potential of synthetic data to tackle diverse clinical requirements. While conditional models incorporating class labels, segmentation masks and image translations are prevalent, there is a gap in utilizing prior clinical knowledge and patient-specific context, suggesting a need for more personalized synthesis approaches and emphasizing the importance of tailoring generative approaches to the unique characteristics of medical data. Additionally, there is a significant gap in using synthetic data beyond augmentation, such as for validation and evaluation of downstream medical AI models. The survey uncovers that the lack of standardized evaluation methodologies tailored to medical images is a barrier to clinical application, underscoring the need for in-depth evaluation approaches, benchmarking, and comparative studies to promote openness and collaboration.

Pointwise visual field estimation from optical coherence tomography in glaucoma: a structure-function analysis using deep learning

Jun 07, 2021

Abstract:Background/Aims: Standard Automated Perimetry (SAP) is the gold standard to monitor visual field (VF) loss in glaucoma management, but is prone to intra-subject variability. We developed and validated a deep learning (DL) regression model that estimates pointwise and overall VF loss from unsegmented optical coherence tomography (OCT) scans. Methods: Eight DL regression models were trained with various retinal imaging modalities: circumpapillary OCT at 3.5mm, 4.1mm, 4.7mm diameter, and scanning laser ophthalmoscopy (SLO) en face images to estimate mean deviation (MD) and 52 threshold values. This retrospective study used data from patients who underwent a complete glaucoma examination, including a reliable Humphrey Field Analyzer (HFA) 24-2 SITA Standard VF exam and a SPECTRALIS OCT scan using the Glaucoma Module Premium Edition. Results: A total of 1378 matched OCT-VF pairs of 496 patients (863 eyes) were included for training and evaluation of the DL models. Average sample MD was -7.53dB (from -33.8dB to +2.0dB). For 52 VF threshold values estimation, the circumpapillary OCT scan with the largest radius (4.7mm) achieved the best performance among all individual models (Pearson r=0.77, 95% CI=[0.72-0.82]). For MD, prediction averaging of OCT-trained models (3.5mm, 4.1mm, 4.7mm) resulted in a Pearson r of 0.78 [0.73-0.83] on the validation set and comparable performance on the test set (Pearson r=0.79 [0.75-0.82]). Conclusion: DL on unsegmented OCT scans accurately predicts pointwise and mean deviation of 24-2 VF in glaucoma patients. Automated VF from unsegmented OCT could be a solution for patients unable to produce reliable perimetry results.

Glaucoma detection beyond the optic disc: The importance of the peripapillary region using explainable deep learning

Mar 22, 2021

Abstract:Today, a large number of glaucoma cases remain undetected, resulting in irreversible blindness. In a quest for cost-effective screening, deep learning-based methods are being evaluated to detect glaucoma from color fundus images. Although unprecedented sensitivity and specificity values are reported, recent glaucoma detection deep learning models lack in decision transparency. Here, we propose a methodology that advances explainable deep learning in the field of glaucoma detection and vertical cup-disc ratio (VCDR), an important risk factor. We trained and evaluated a total of 64 deep learning models using fundus images that undergo a certain cropping policy. We defined the circular crop radius as a percentage of image size, centered on the optic nerve head (ONH), with an equidistant spaced range from 10%-60% (ONH crop policy). The inverse of the cropping mask was also applied to quantify the performance of models trained on ONH information exclusively (periphery crop policy). The performance of the models evaluated on original images resulted in an area under the curve (AUC) of 0.94 [95% CI: 0.92-0.96] for glaucoma detection, and a coefficient of determination (R^2) equal to 77% [95% CI: 0.77-0.79] for VCDR estimation. Models that were trained on images with absence of the ONH are still able to obtain significant performance (0.88 [95% CI: 0.85-0.90] AUC for glaucoma detection and 37% [95% CI: 0.35-0.40] R^2 score for VCDR estimation in the most extreme setup of 60% ONH crop). We validated our glaucoma detection models on a recent public data set (REFUGE) that contains images captured with a different camera, still achieving an AUC of 0.80 [95% CI: 0.76-0.84] when ONH crop policy of 60% image size was applied. Our findings provide the first irrefutable evidence that deep learning can detect glaucoma from fundus image regions outside the ONH.

Pathological myopia classification with simultaneous lesion segmentation using deep learning

Jun 04, 2020

Abstract:This investigation reports on the results of convolutional neural networks developed for the recently introduced PathologicAL Myopia (PALM) dataset, which consists of 1200 fundus images. We propose a new Optic Nerve Head (ONH)-based prediction enhancement for the segmentation of atrophy and fovea. Models trained with 400 available training images achieved an AUC of 0.9867 for pathological myopia classification, and a Euclidean distance of 58.27 pixels on the fovea localization task, evaluated on a test set of 400 images. Dice and F1 metrics for semantic segmentation of lesions scored 0.9303 and 0.9869 on optic disc, 0.8001 and 0.9135 on retinal atrophy, and 0.8073 and 0.7059 on retinal detachment, respectively. Our work was acknowledged with an award in the context of the "PathologicAL Myopia detection from retinal images" challenge held during the IEEE International Symposium on Biomedical Imaging (April 2019). Considering that (pathological) myopia cases are often identified as false positives and negatives in classification systems for glaucoma, we envision that the current work could aid in future research to discriminate between glaucomatous and highly-myopic eyes, complemented by the localization and segmentation of landmarks such as fovea, optic disc and atrophy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge