Bao-liang Lu

Document-level Neural Machine Translation with Inter-Sentence Attention

Oct 31, 2019

Abstract:Standard neural machine translation (NMT) is on the assumption of document-level context independent. Most existing document-level NMT methods only focus on briefly introducing document-level information but fail to concern about selecting the most related part inside document context. The capacity of memory network for detecting the most relevant part of the current sentence from the memory provides a natural solution for the requirement of modeling document-level context by document-level NMT. In this work, we propose a Transformer NMT system with associated memory network (AMN) to both capture the document-level context and select the most salient part related to the concerned translation from the memory. Experiments on several tasks show that the proposed method significantly improves the NMT performance over strong Transformer baselines and other related studies.

Semi-supervised Bayesian Deep Multi-modal Emotion Recognition

Apr 25, 2017

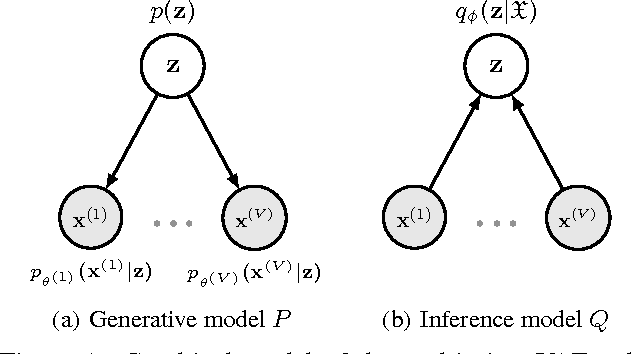

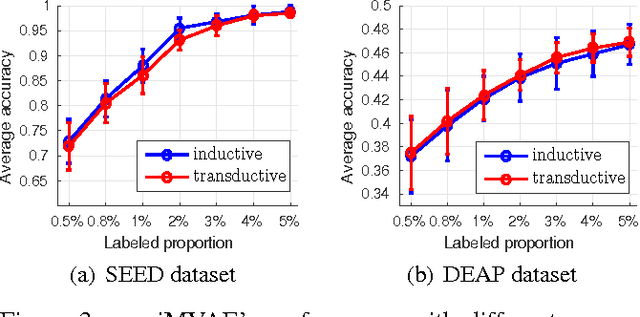

Abstract:In emotion recognition, it is difficult to recognize human's emotional states using just a single modality. Besides, the annotation of physiological emotional data is particularly expensive. These two aspects make the building of effective emotion recognition model challenging. In this paper, we first build a multi-view deep generative model to simulate the generative process of multi-modality emotional data. By imposing a mixture of Gaussians assumption on the posterior approximation of the latent variables, our model can learn the shared deep representation from multiple modalities. To solve the labeled-data-scarcity problem, we further extend our multi-view model to semi-supervised learning scenario by casting the semi-supervised classification problem as a specialized missing data imputation task. Our semi-supervised multi-view deep generative framework can leverage both labeled and unlabeled data from multiple modalities, where the weight factor for each modality can be learned automatically. Compared with previous emotion recognition methods, our method is more robust and flexible. The experiments conducted on two real multi-modal emotion datasets have demonstrated the superiority of our framework over a number of competitors.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge