Balázs Pejó

FRIDA: Free-Rider Detection using Privacy Attacks

Oct 07, 2024

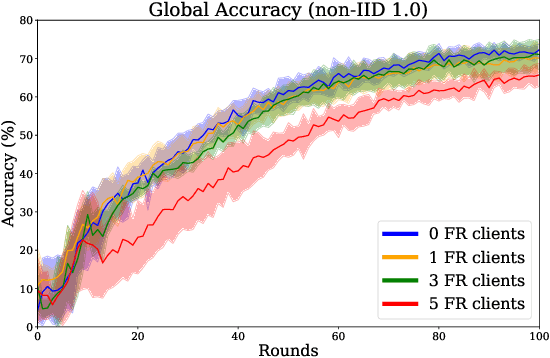

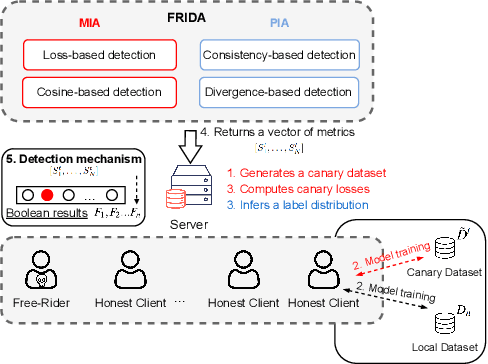

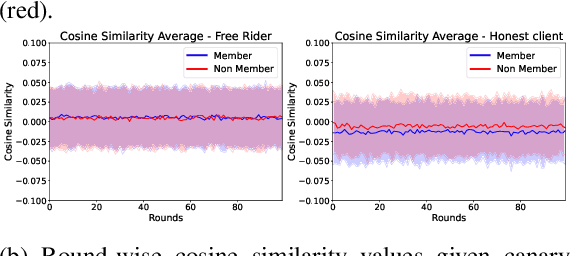

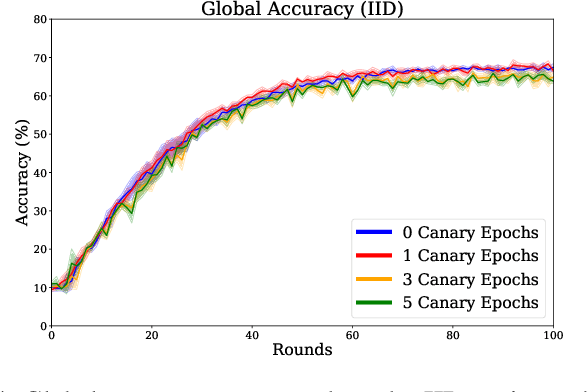

Abstract:Federated learning is increasingly popular as it enables multiple parties with limited datasets and resources to train a high-performing machine learning model collaboratively. However, similarly to other collaborative systems, federated learning is vulnerable to free-riders -- participants who do not contribute to the training but still benefit from the shared model. Free-riders not only compromise the integrity of the learning process but also slow down the convergence of the global model, resulting in increased costs for the honest participants. To address this challenge, we propose FRIDA: free-rider detection using privacy attacks, a framework that leverages inference attacks to detect free-riders. Unlike traditional methods that only capture the implicit effects of free-riding, FRIDA directly infers details of the underlying training datasets, revealing characteristics that indicate free-rider behaviour. Through extensive experiments, we demonstrate that membership and property inference attacks are effective for this purpose. Our evaluation shows that FRIDA outperforms state-of-the-art methods, especially in non-IID settings.

Industry-Scale Orchestrated Federated Learning for Drug Discovery

Oct 17, 2022

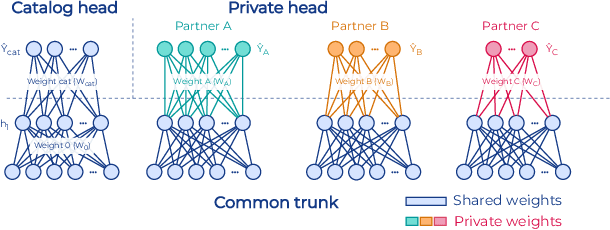

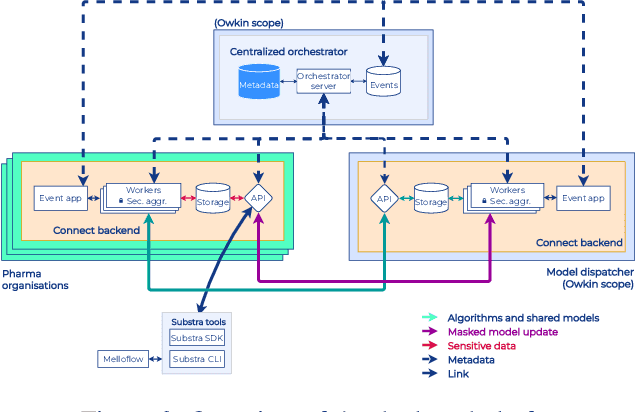

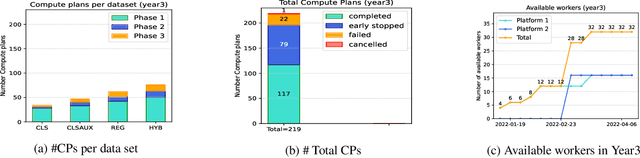

Abstract:To apply federated learning to drug discovery we developed a novel platform in the context of European Innovative Medicines Initiative (IMI) project MELLODDY (grant n{\deg}831472), which was comprised of 10 pharmaceutical companies, academic research labs, large industrial companies and startups. To the best of our knowledge, The MELLODDY platform was the first industry-scale platform to enable the creation of a global federated model for drug discovery without sharing the confidential data sets of the individual partners. The federated model was trained on the platform by aggregating the gradients of all contributing partners in a cryptographic, secure way following each training iteration. The platform was deployed on an Amazon Web Services (AWS) multi-account architecture running Kubernetes clusters in private subnets. Organisationally, the roles of the different partners were codified as different rights and permissions on the platform and administrated in a decentralized way. The MELLODDY platform generated new scientific discoveries which are described in a companion paper.

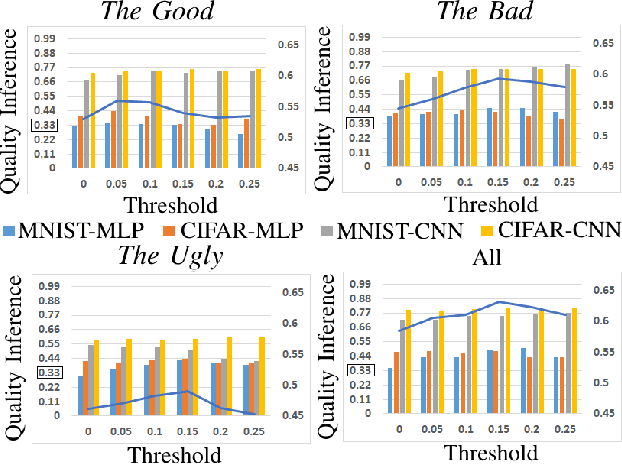

The Good, The Bad, and The Ugly: Quality Inference in Federated Learning

Jul 13, 2020

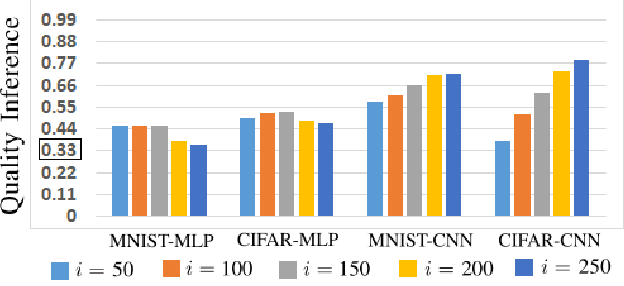

Abstract:Collaborative machine learning algorithms are developed both for efficiency reasons and to ensure the privacy protection of sensitive data used for processing. Federated learning is the most popular of these methods, where 1) learning is done locally, and 2) only a subset of the participants contribute in each training round. Despite of no data is shared explicitly, recent studies showed that models trained with FL could potentially still leak some information. In this paper we focus on the quality property of the datasets and investigate whether the leaked information could be connected to specific participants. Via a differential attack we analyze the information leakage using a few simple metrics, and show that reconstruction of the quality ordering among the training participants' datasets is possible. Our scoring rules are only using an oracle access to a test dataset and no further background information or computational power. We demonstrate two implications of such a quality ordering leakage: 1) we utilized it to increase the accuracy of the model by weighting the participant's updates, and 2) using it to detect misbehaving participants.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge