FRIDA: Free-Rider Detection using Privacy Attacks

Paper and Code

Oct 07, 2024

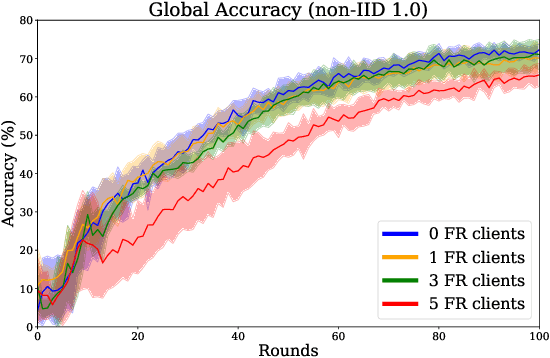

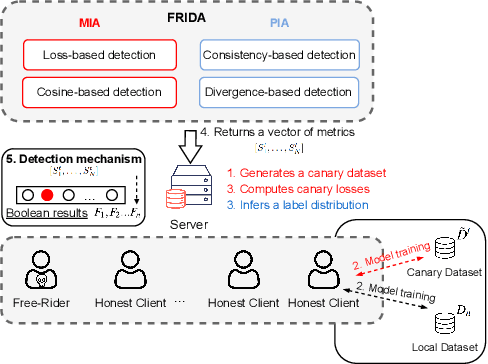

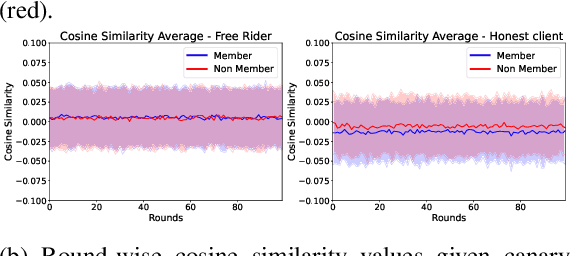

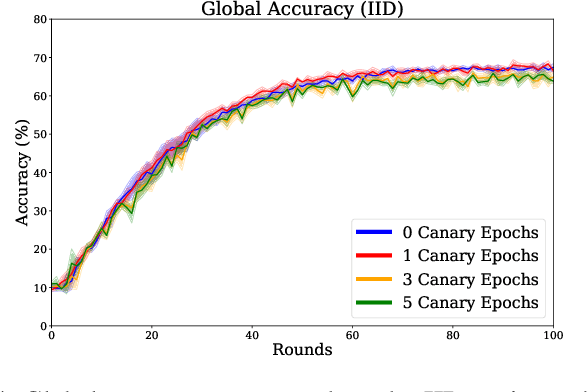

Federated learning is increasingly popular as it enables multiple parties with limited datasets and resources to train a high-performing machine learning model collaboratively. However, similarly to other collaborative systems, federated learning is vulnerable to free-riders -- participants who do not contribute to the training but still benefit from the shared model. Free-riders not only compromise the integrity of the learning process but also slow down the convergence of the global model, resulting in increased costs for the honest participants. To address this challenge, we propose FRIDA: free-rider detection using privacy attacks, a framework that leverages inference attacks to detect free-riders. Unlike traditional methods that only capture the implicit effects of free-riding, FRIDA directly infers details of the underlying training datasets, revealing characteristics that indicate free-rider behaviour. Through extensive experiments, we demonstrate that membership and property inference attacks are effective for this purpose. Our evaluation shows that FRIDA outperforms state-of-the-art methods, especially in non-IID settings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge