Bai Chen

for the Alzheimer's Disease Neuroimaging Initiative

Adaptive Critical Subgraph Mining for Cognitive Impairment Conversion Prediction with T1-MRI-based Brain Network

Mar 20, 2024

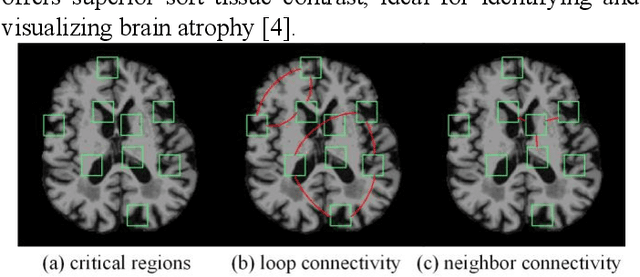

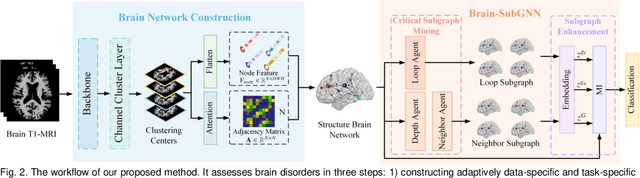

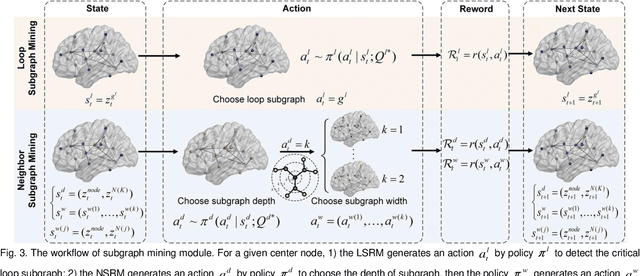

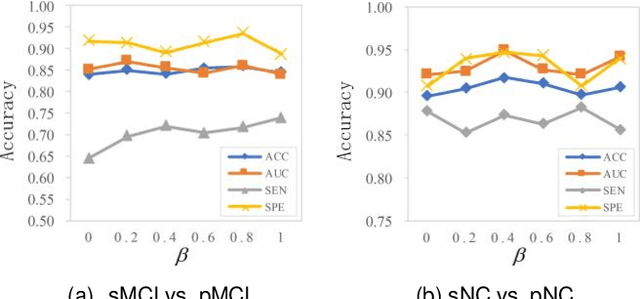

Abstract:Prediction the conversion to early-stage dementia is critical for mitigating its progression but remains challenging due to subtle cognitive impairments and structural brain changes. Traditional T1-weighted magnetic resonance imaging (T1-MRI) research focus on identifying brain atrophy regions but often fails to address the intricate connectivity between them. This limitation underscores the necessity of focuing on inter-regional connectivity for a comprehensive understand of the brain's complex network. Moreover, there is a pressing demand for methods that adaptively preserve and extract critical information, particularly specialized subgraph mining techniques for brain networks. These are essential for developing high-quality feature representations that reveal critical spatial impacts of structural brain changes and its topology. In this paper, we propose Brain-SubGNN, a novel graph representation network to mine and enhance critical subgraphs based on T1-MRI. This network provides a subgraph-level interpretation, enhancing interpretability and insights for graph analysis. The process begins by extracting node features and a correlation matrix between nodes to construct a task-oriented brain network. Brain-SubGNN then adaptively identifies and enhances critical subgraphs, capturing both loop and neighbor subgraphs. This method reflects the loop topology and local changes, indicative of long-range connections, and maintains local and global brain attributes. Extensive experiments validate the effectiveness and advantages of Brain-SubGNN, demonstrating its potential as a powerful tool for understanding and diagnosing early-stage dementia. Source code is available at https://github.com/Leng-10/Brain-SubGNN.

PointACL:Adversarial Contrastive Learning for Robust Point Clouds Representation under Adversarial Attack

Sep 14, 2022

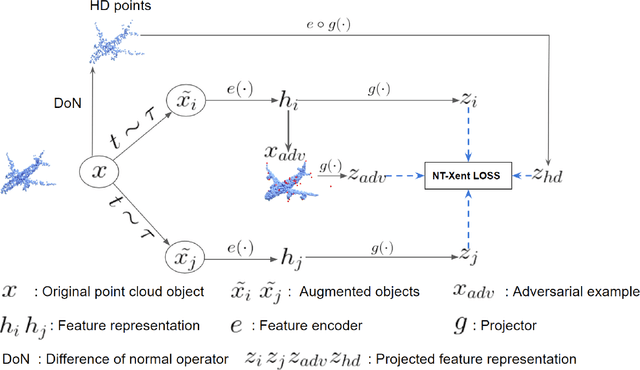

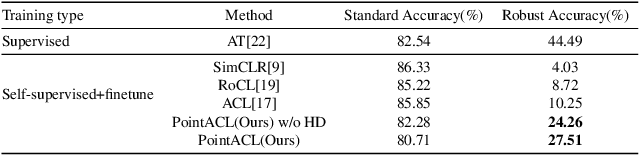

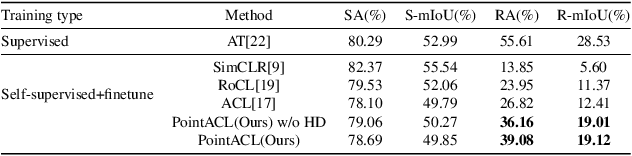

Abstract:Despite recent success of self-supervised based contrastive learning model for 3D point clouds representation, the adversarial robustness of such pre-trained models raised concerns. Adversarial contrastive learning (ACL) is considered an effective way to improve the robustness of pre-trained models. In contrastive learning, the projector is considered an effective component for removing unnecessary feature information during contrastive pretraining and most ACL works also use contrastive loss with projected feature representations to generate adversarial examples in pretraining, while "unprojected " feature representations are used in generating adversarial inputs during inference.Because of the distribution gap between projected and "unprojected" features, their models are constrained of obtaining robust feature representations for downstream tasks. We introduce a new method to generate high-quality 3D adversarial examples for adversarial training by utilizing virtual adversarial loss with "unprojected" feature representations in contrastive learning framework. We present our robust aware loss function to train self-supervised contrastive learning framework adversarially. Furthermore, we find selecting high difference points with the Difference of Normal (DoN) operator as additional input for adversarial self-supervised contrastive learning can significantly improve the adversarial robustness of the pre-trained model. We validate our method, PointACL on downstream tasks, including 3D classification and 3D segmentation with multiple datasets. It obtains comparable robust accuracy over state-of-the-art contrastive adversarial learning methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge