Arnaud Woiselle

Self-Supervised Learning for Real-World Object Detection: a Survey

Oct 09, 2024

Abstract:Self-Supervised Learning (SSL) has emerged as a promising approach in computer vision, enabling networks to learn meaningful representations from large unlabeled datasets. SSL methods fall into two main categories: instance discrimination and Masked Image Modeling (MIM). While instance discrimination is fundamental to SSL, it was originally designed for classification and may be less effective for object detection, particularly for small objects. In this survey, we focus on SSL methods specifically tailored for real-world object detection, with an emphasis on detecting small objects in complex environments. Unlike previous surveys, we offer a detailed comparison of SSL strategies, including object-level instance discrimination and MIM methods, and assess their effectiveness for small object detection using both CNN and ViT-based architectures. Specifically, our benchmark is performed on the widely-used COCO dataset, as well as on a specialized real-world dataset focused on vehicle detection in infrared remote sensing imagery. We also assess the impact of pre-training on custom domain-specific datasets, highlighting how certain SSL strategies are better suited for handling uncurated data. Our findings highlight that instance discrimination methods perform well with CNN-based encoders, while MIM methods are better suited for ViT-based architectures and custom dataset pre-training. This survey provides a practical guide for selecting optimal SSL strategies, taking into account factors such as backbone architecture, object size, and custom pre-training requirements. Ultimately, we show that choosing an appropriate SSL pre-training strategy, along with a suitable encoder, significantly enhances performance in real-world object detection, particularly for small object detection in frugal settings.

Robust infrared small target detection using self-supervised and a contrario paradigms

Oct 09, 2024

Abstract:Detecting small targets in infrared images poses significant challenges in defense applications due to the presence of complex backgrounds and the small size of the targets. Traditional object detection methods often struggle to balance high detection rates with low false alarm rates, especially when dealing with small objects. In this paper, we introduce a novel approach that combines a contrario paradigm with Self-Supervised Learning (SSL) to improve Infrared Small Target Detection (IRSTD). On the one hand, the integration of an a contrario criterion into a YOLO detection head enhances feature map responses for small and unexpected objects while effectively controlling false alarms. On the other hand, we explore SSL techniques to overcome the challenges of limited annotated data, common in IRSTD tasks. Specifically, we benchmark several representative SSL strategies for their effectiveness in improving small object detection performance. Our findings show that instance discrimination methods outperform masked image modeling strategies when applied to YOLO-based small object detection. Moreover, the combination of the a contrario and SSL paradigms leads to significant performance improvements, narrowing the gap with state-of-the-art segmentation methods and even outperforming them in frugal settings. This two-pronged approach offers a robust solution for improving IRSTD performance, particularly under challenging conditions.

$\textit{A Contrario}$ Paradigm for YOLO-based Infrared Small Target Detection

Feb 03, 2024

Abstract:Detecting small to tiny targets in infrared images is a challenging task in computer vision, especially when it comes to differentiating these targets from noisy or textured backgrounds. Traditional object detection methods such as YOLO struggle to detect tiny objects compared to segmentation neural networks, resulting in weaker performance when detecting small targets. To reduce the number of false alarms while maintaining a high detection rate, we introduce an $\textit{a contrario}$ decision criterion into the training of a YOLO detector. The latter takes advantage of the $\textit{unexpectedness}$ of small targets to discriminate them from complex backgrounds. Adding this statistical criterion to a YOLOv7-tiny bridges the performance gap between state-of-the-art segmentation methods for infrared small target detection and object detection networks. It also significantly increases the robustness of YOLO towards few-shot settings.

Deep learning-based deconvolution for interferometric radio transient reconstruction

Jun 24, 2023

Abstract:Radio astronomy is currently thriving with new large ground-based radio telescopes coming online in preparation for the upcoming Square Kilometre Array (SKA). Facilities like LOFAR, MeerKAT/SKA, ASKAP/SKA, and the future SKA-LOW bring tremendous sensitivity in time and frequency, improved angular resolution, and also high-rate data streams that need to be processed. They enable advanced studies of radio transients, volatile by nature, that can be detected or missed in the data. These transients are markers of high-energy accelerations of electrons and manifest in a wide range of temporal scales. Usually studied with dynamic spectroscopy of time series analysis, there is a motivation to search for such sources in large interferometric datasets. This requires efficient and robust signal reconstruction algorithms. To correctly account for the temporal dependency of the data, we improve the classical image deconvolution inverse problem by adding the temporal dependency in the reconstruction problem. Then, we introduce two novel neural network architectures that can do both spatial and temporal modeling of the data and the instrumental response. Then, we simulate representative time-dependent image cubes of point source distributions and realistic telescope pointings of MeerKAT to generate toy models to build the training, validation, and test datasets. Finally, based on the test data, we evaluate the source profile reconstruction performance of the proposed methods and classical image deconvolution algorithm CLEAN applied frame-by-frame. In the presence of increasing noise level in data frame, the proposed methods display a high level of robustness compared to frame-by-frame imaging with CLEAN. The deconvolved image cubes bring a factor of 3 improvement in fidelity of the recovered temporal profiles and a factor of 2 improvement in background denoising.

Deep-NFA: a Deep $\textit{a contrario}$ Framework for Small Object Detection

Mar 02, 2023

Abstract:The detection of small objects is a challenging task in computer vision. Conventional object detection methods have difficulty in finding the balance between high detection and low false alarm rates. In the literature, some methods have addressed this issue by enhancing the feature map responses, but without guaranteeing robustness with respect to the number of false alarms induced by background elements. To tackle this problem, we introduce an $\textit{a contrario}$ decision criterion into the learning process to take into account the unexpectedness of small objects. This statistic criterion enhances the feature map responses while controlling the number of false alarms (NFA) and can be integrated into any semantic segmentation neural network. Our add-on NFA module not only allows us to obtain competitive results for small target and crack detection tasks respectively, but also leads to more robust and interpretable results.

Stable Long-Term Recurrent Video Super-Resolution

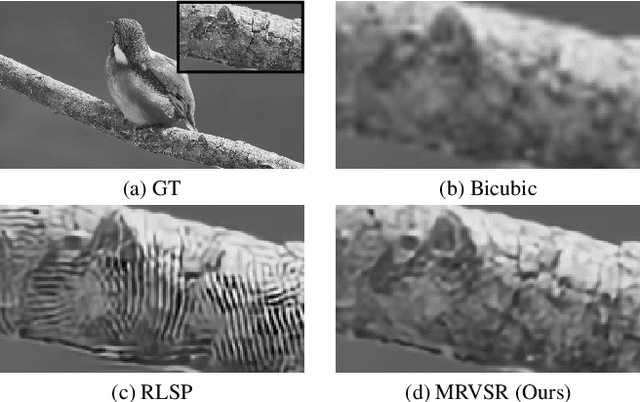

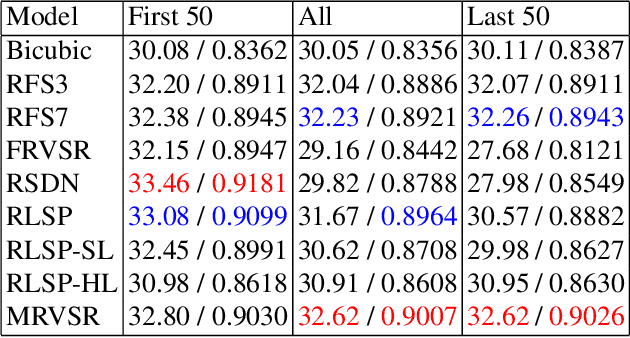

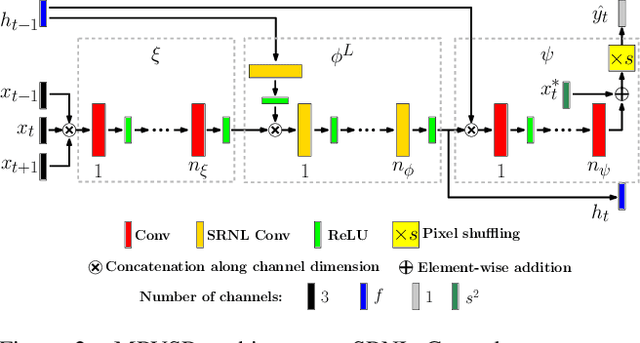

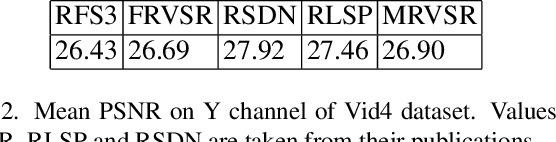

Dec 16, 2021

Abstract:Recurrent models have gained popularity in deep learning (DL) based video super-resolution (VSR), due to their increased computational efficiency, temporal receptive field and temporal consistency compared to sliding-window based models. However, when inferring on long video sequences presenting low motion (i.e. in which some parts of the scene barely move), recurrent models diverge through recurrent processing, generating high frequency artifacts. To the best of our knowledge, no study about VSR pointed out this instability problem, which can be critical for some real-world applications. Video surveillance is a typical example where such artifacts would occur, as both the camera and the scene stay static for a long time. In this work, we expose instabilities of existing recurrent VSR networks on long sequences with low motion. We demonstrate it on a new long sequence dataset Quasi-Static Video Set, that we have created. Finally, we introduce a new framework of recurrent VSR networks that is both stable and competitive, based on Lipschitz stability theory. We propose a new recurrent VSR network, coined Middle Recurrent Video Super-Resolution (MRVSR), based on this framework. We empirically show its competitive performance on long sequences with low motion.

Deep Unrolled Network for Video Super-Resolution

Feb 23, 2021

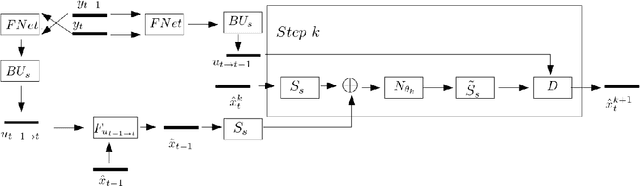

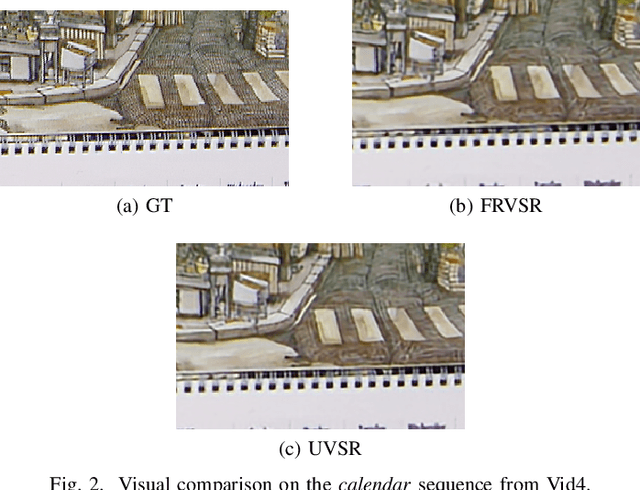

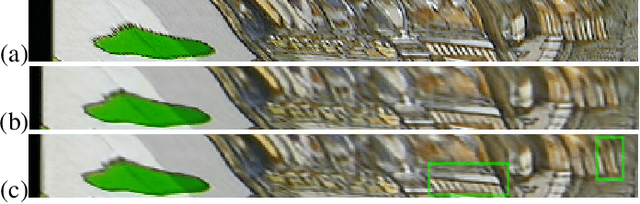

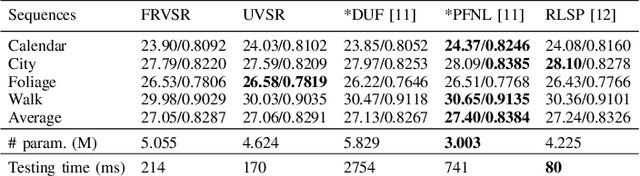

Abstract:Video super-resolution (VSR) aims to reconstruct a sequence of high-resolution (HR) images from their corresponding low-resolution (LR) versions. Traditionally, solving a VSR problem has been based on iterative algorithms that can exploit prior knowledge on image formation and assumptions on the motion. However, these classical methods struggle at incorporating complex statistics from natural images. Furthermore, VSR has recently benefited from the improvement brought by deep learning (DL) algorithms. These techniques can efficiently learn spatial patterns from large collections of images. Yet, they fail to incorporate some knowledge about the image formation model, which limits their flexibility. Unrolled optimization algorithms, developed for inverse problems resolution, allow to include prior information into deep learning architectures. They have been used mainly for single image restoration tasks. Adapting an unrolled neural network structure can bring the following benefits. First, this may increase performance of the super-resolution task. Then, this gives neural networks better interpretability. Finally, this allows flexibility in learning a single model to nonblindly deal with multiple degradations. In this paper, we propose a new VSR neural network based on unrolled optimization techniques and discuss its performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge