Argyris Mouzakis

Optimal Differentially Private Sampling of Unbounded Gaussians

Mar 03, 2025Abstract:We provide the first $\widetilde{\mathcal{O}}\left(d\right)$-sample algorithm for sampling from unbounded Gaussian distributions under the constraint of $\left(\varepsilon, \delta\right)$-differential privacy. This is a quadratic improvement over previous results for the same problem, settling an open question of Ghazi, Hu, Kumar, and Manurangsi.

Private Mean Estimation with Person-Level Differential Privacy

May 30, 2024Abstract:We study differentially private (DP) mean estimation in the case where each person holds multiple samples. Commonly referred to as the "user-level" setting, DP here requires the usual notion of distributional stability when all of a person's datapoints can be modified. Informally, if $n$ people each have $m$ samples from an unknown $d$-dimensional distribution with bounded $k$-th moments, we show that \[n = \tilde \Theta\left(\frac{d}{\alpha^2 m} + \frac{d }{ \alpha m^{1/2} \varepsilon} + \frac{d}{\alpha^{k/(k-1)} m \varepsilon} + \frac{d}{\varepsilon}\right)\] people are necessary and sufficient to estimate the mean up to distance $\alpha$ in $\ell_2$-norm under $\varepsilon$-differential privacy (and its common relaxations). In the multivariate setting, we give computationally efficient algorithms under approximate DP (with slightly degraded sample complexity) and computationally inefficient algorithms under pure DP, and our nearly matching lower bounds hold for the most permissive case of approximate DP. Our computationally efficient estimators are based on the well known noisy-clipped-mean approach, but the analysis for our setting requires new bounds on the tails of sums of independent, vector-valued, bounded-moments random variables, and a new argument for bounding the bias introduced by clipping.

Not All Learnable Distribution Classes are Privately Learnable

Feb 05, 2024Abstract:We give an example of a class of distributions that is learnable in total variation distance with a finite number of samples, but not learnable under $(\varepsilon, \delta)$-differential privacy. This refutes a conjecture of Ashtiani.

A Bias-Variance-Privacy Trilemma for Statistical Estimation

Jan 30, 2023Abstract:The canonical algorithm for differentially private mean estimation is to first clip the samples to a bounded range and then add noise to their empirical mean. Clipping controls the sensitivity and, hence, the variance of the noise that we add for privacy. But clipping also introduces statistical bias. We prove that this tradeoff is inherent: no algorithm can simultaneously have low bias, low variance, and low privacy loss for arbitrary distributions. On the positive side, we show that unbiased mean estimation is possible under approximate differential privacy if we assume that the distribution is symmetric. Furthermore, we show that, even if we assume that the data is sampled from a Gaussian, unbiased mean estimation is impossible under pure or concentrated differential privacy.

New Lower Bounds for Private Estimation and a Generalized Fingerprinting Lemma

May 18, 2022Abstract:We prove new lower bounds for statistical estimation tasks under the constraint of $(\varepsilon, \delta)$-differential privacy. First, we provide tight lower bounds for private covariance estimation of Gaussian distributions. We show that estimating the covariance matrix in Frobenius norm requires $\Omega(d^2)$ samples, and in spectral norm requires $\Omega(d^{3/2})$ samples, both matching upper bounds up to logarithmic factors. We prove these bounds via our main technical contribution, a broad generalization of the fingerprinting method to exponential families. Additionally, using the private Assouad method of Acharya, Sun, and Zhang, we show a tight $\Omega(d/(\alpha^2 \varepsilon))$ lower bound for estimating the mean of a distribution with bounded covariance to $\alpha$-error in $\ell_2$-distance. Prior known lower bounds for all these problems were either polynomially weaker or held under the stricter condition of $(\varepsilon,0)$-differential privacy.

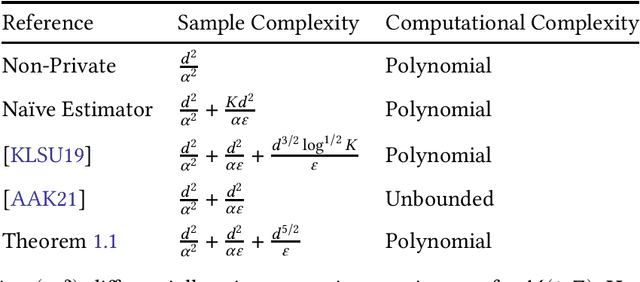

A Private and Computationally-Efficient Estimator for Unbounded Gaussians

Nov 08, 2021

Abstract:We give the first polynomial-time, polynomial-sample, differentially private estimator for the mean and covariance of an arbitrary Gaussian distribution $\mathcal{N}(\mu,\Sigma)$ in $\mathbb{R}^d$. All previous estimators are either nonconstructive, with unbounded running time, or require the user to specify a priori bounds on the parameters $\mu$ and $\Sigma$. The primary new technical tool in our algorithm is a new differentially private preconditioner that takes samples from an arbitrary Gaussian $\mathcal{N}(0,\Sigma)$ and returns a matrix $A$ such that $A \Sigma A^T$ has constant condition number.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge