Apu Shah

Beyond Human-Only: Evaluating Human-Machine Collaboration for Collecting High-Quality Translation Data

Oct 14, 2024

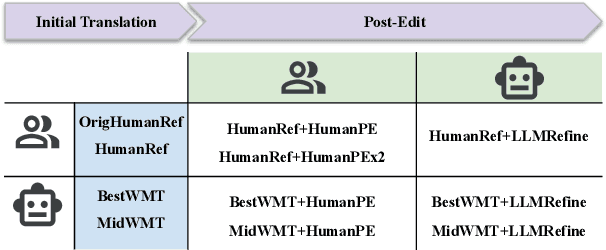

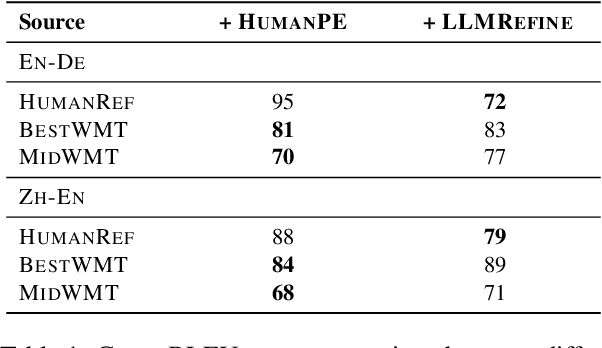

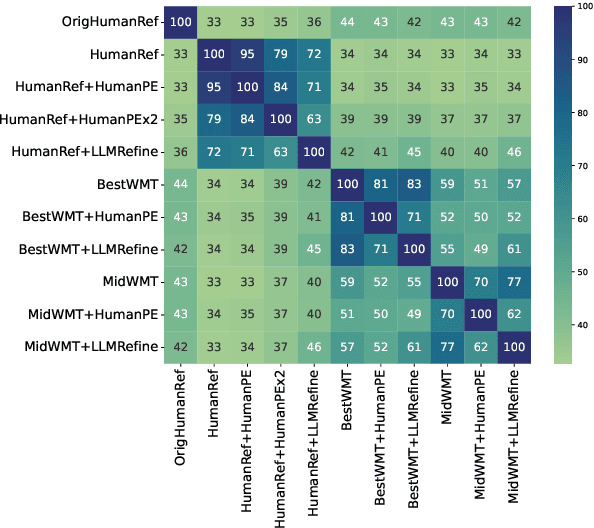

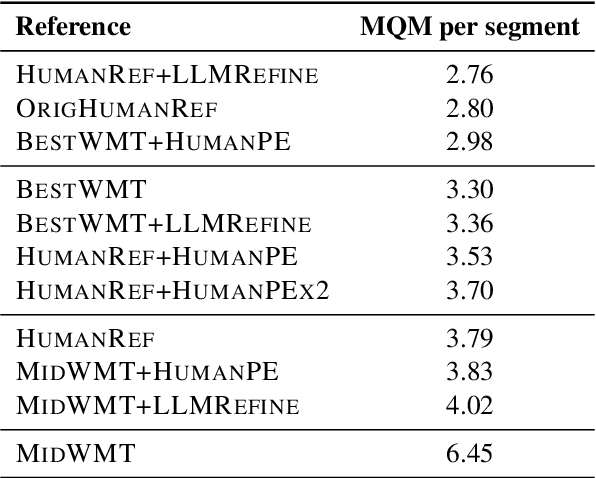

Abstract:Collecting high-quality translations is crucial for the development and evaluation of machine translation systems. However, traditional human-only approaches are costly and slow. This study presents a comprehensive investigation of 11 approaches for acquiring translation data, including human-only, machineonly, and hybrid approaches. Our findings demonstrate that human-machine collaboration can match or even exceed the quality of human-only translations, while being more cost-efficient. Error analysis reveals the complementary strengths between human and machine contributions, highlighting the effectiveness of collaborative methods. Cost analysis further demonstrates the economic benefits of human-machine collaboration methods, with some approaches achieving top-tier quality at around 60% of the cost of traditional methods. We release a publicly available dataset containing nearly 18,000 segments of varying translation quality with corresponding human ratings to facilitate future research.

They, Them, Theirs: Rewriting with Gender-Neutral English

Feb 12, 2021

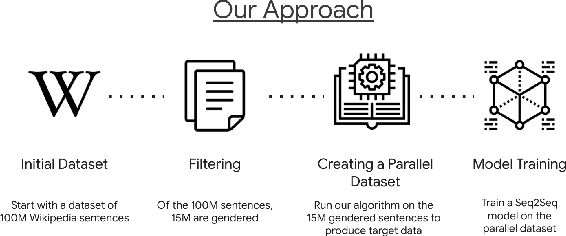

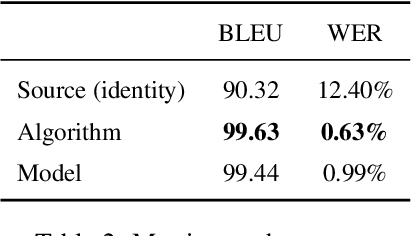

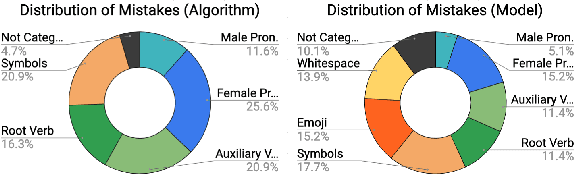

Abstract:Responsible development of technology involves applications being inclusive of the diverse set of users they hope to support. An important part of this is understanding the many ways to refer to a person and being able to fluently change between the different forms as needed. We perform a case study on the singular they, a common way to promote gender inclusion in English. We define a re-writing task, create an evaluation benchmark, and show how a model can be trained to produce gender-neutral English with <1% word error rate with no human-labeled data. We discuss the practical applications and ethical considerations of the task, providing direction for future work into inclusive natural language systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge