Anu Jagannath

Bluetooth and WiFi Dataset for Real World RF Fingerprinting of Commercial Devices

Mar 15, 2023Abstract:RF fingerprinting is emerging as a physical layer security scheme to identify illegitimate and/or unauthorized emitters sharing the RF spectrum. However, due to the lack of publicly accessible real-world datasets, most research focuses on generating synthetic waveforms with software-defined radios (SDRs) which are not suited for practical deployment settings. On other hand, the limited datasets that are available focus only on chipsets that generate only one kind of waveform. Commercial off-the-shelf (COTS) combo chipsets that support two wireless standards (for example WiFi and Bluetooth) over a shared dual-band antenna such as those found in laptops, adapters, wireless chargers, Raspberry Pis, among others are becoming ubiquitous in the IoT realm. Hence, to keep up with the modern IoT environment, there is a pressing need for real-world open datasets capturing emissions from these combo chipsets transmitting heterogeneous communication protocols. To this end, we capture the first known emissions from the COTS IoT chipsets transmitting WiFi and Bluetooth under two different time frames. The different time frames are essential to rigorously evaluate the generalization capability of the models. To ensure widespread use, each capture within the comprehensive 72 GB dataset is long enough (40 MSamples) to support diverse input tensor lengths and formats. Finally, the dataset also comprises emissions at varying signal powers to account for the feeble to high signal strength emissions as encountered in a real-world setting.

Generalization of Deep Reinforcement Learning for Jammer-Resilient Frequency and Power Allocation

Feb 04, 2023Abstract:We tackle the problem of joint frequency and power allocation while emphasizing the generalization capability of a deep reinforcement learning model. Most of the existing methods solve reinforcement learning-based wireless problems for a specific pre-determined wireless network scenario. The performance of a trained agent tends to be very specific to the network and deteriorates when used in a different network operating scenario (e.g., different in size, neighborhood, and mobility, among others). We demonstrate our approach to enhance training to enable a higher generalization capability during inference of the deployed model in a distributed multi-agent setting in a hostile jamming environment. With all these, we show the improved training and inference performance of the proposed methods when tested on previously unseen simulated wireless networks of different sizes and architectures. More importantly, to prove practical impact, the end-to-end solution was implemented on the embedded software-defined radio and validated using over-the-air evaluation.

Embedding-Assisted Attentional Deep Learning for Real-World RF Fingerprinting of Bluetooth

Sep 22, 2022

Abstract:A scalable and computationally efficient framework is designed to fingerprint real-world Bluetooth devices. We propose an embedding-assisted attentional framework (Mbed-ATN) suitable for fingerprinting actual Bluetooth devices. Its generalization capability is analyzed in different settings and the effect of sample length and anti-aliasing decimation is demonstrated. The embedding module serves as a dimensionality reduction unit that maps the high dimensional 3D input tensor to a 1D feature vector for further processing by the ATN module. Furthermore, unlike the prior research in this field, we closely evaluate the complexity of the model and test its fingerprinting capability with real-world Bluetooth dataset collected under a different time frame and experimental setting while being trained on another. Our study reveals 7.3x and 65.2x lesser memory usage with Mbed-ATN architecture in contrast to Oracle at input sample lengths of M=10 kS and M=100 kS respectively. Further, the proposed Mbed-ATN showcases 16.9X fewer FLOPs and 7.5x lesser trainable parameters when compared to Oracle. Finally, we show that when subject to anti-aliasing decimation and at greater input sample lengths of 1 MS, the proposed Mbed-ATN framework results in a 5.32x higher TPR, 37.9% fewer false alarms, and 6.74x higher accuracy under the challenging real-world setting.

RF Fingerprinting Needs Attention: Multi-task Approach for Real-World WiFi and Bluetooth

Sep 07, 2022

Abstract:A novel cross-domain attentional multi-task architecture - xDom - for robust real-world wireless radio frequency (RF) fingerprinting is presented in this work. To the best of our knowledge, this is the first time such comprehensive attention mechanism is applied to solve RF fingerprinting problem. In this paper, we resort to real-world IoT WiFi and Bluetooth (BT) emissions (instead of synthetic waveform generation) in a rich multipath and unavoidable interference environment in an indoor experimental testbed. We show the impact of the time-frame of capture by including waveforms collected over a span of months and demonstrate the same time-frame and multiple time-frame fingerprinting evaluations. The effectiveness of resorting to a multi-task architecture is also experimentally proven by conducting single-task and multi-task model analyses. Finally, we demonstrate the significant gain in performance achieved with the proposed xDom architecture by benchmarking against a well-known state-of-the-art model for fingerprinting. Specifically, we report performance improvements by up to 59.3% and 4.91x under single-task WiFi and BT fingerprinting respectively, and up to 50.5% increase in fingerprinting accuracy under the multi-task setting.

Distributed Transmission Control for Wireless Networks using Multi-Agent Reinforcement Learning

May 13, 2022

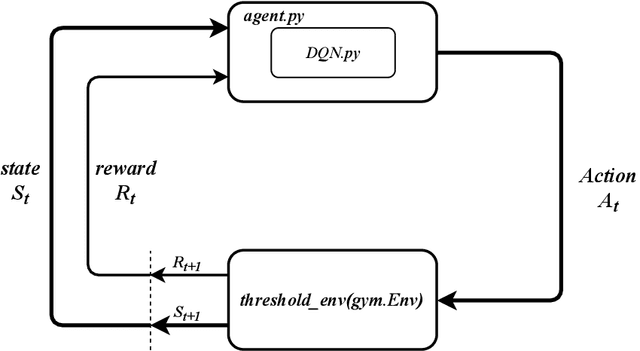

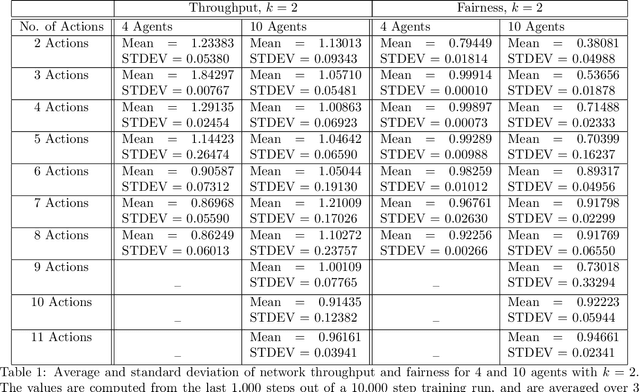

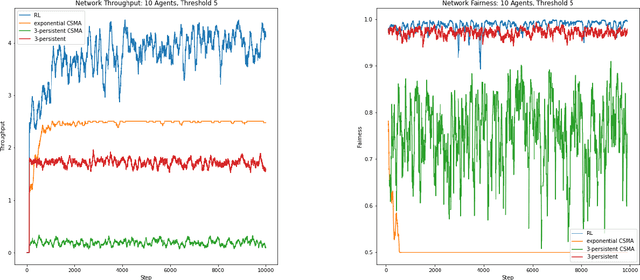

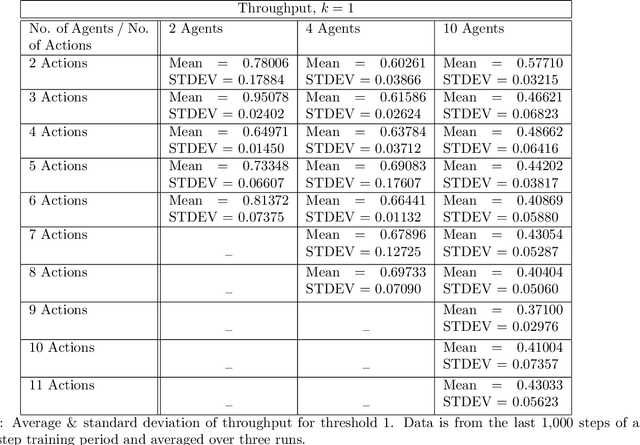

Abstract:We examine the problem of transmission control, i.e., when to transmit, in distributed wireless communications networks through the lens of multi-agent reinforcement learning. Most other works using reinforcement learning to control or schedule transmissions use some centralized control mechanism, whereas our approach is fully distributed. Each transmitter node is an independent reinforcement learning agent and does not have direct knowledge of the actions taken by other agents. We consider the case where only a subset of agents can successfully transmit at a time, so each agent must learn to act cooperatively with other agents. An agent may decide to transmit a certain number of steps into the future, but this decision is not communicated to the other agents, so it the task of the individual agents to attempt to transmit at appropriate times. We achieve this collaborative behavior through studying the effects of different actions spaces. We are agnostic to the physical layer, which makes our approach applicable to many types of networks. We submit that approaches similar to ours may be useful in other domains that use multi-agent reinforcement learning with independent agents.

Digital Twin Virtualization with Machine Learning for IoT and Beyond 5G Networks: Research Directions for Security and Optimal Control

Apr 10, 2022

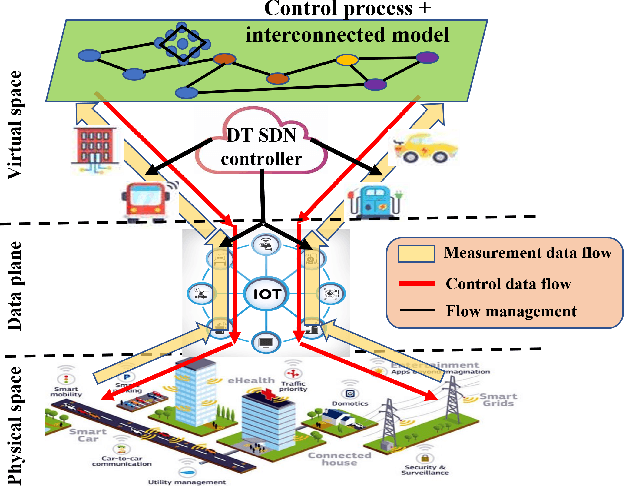

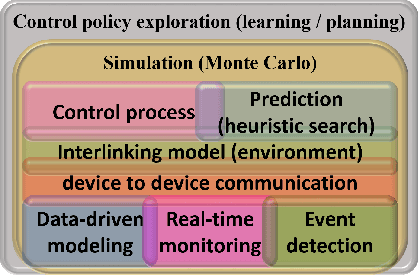

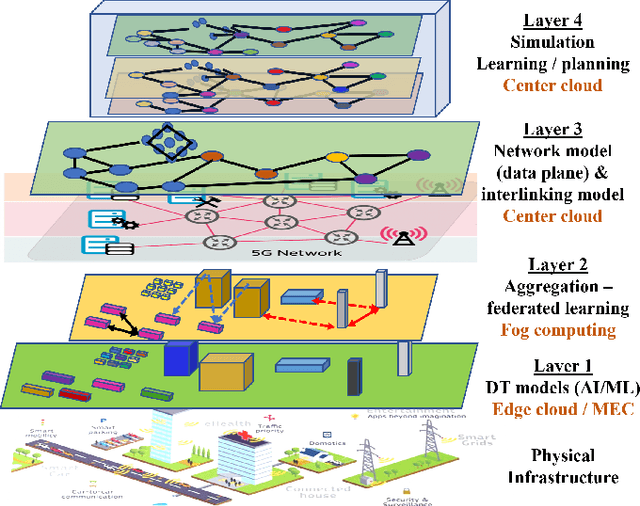

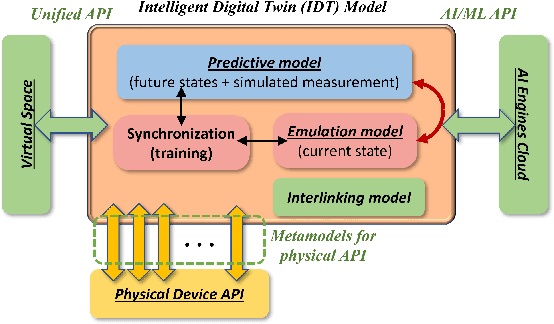

Abstract:Digital twin (DT) technologies have emerged as a solution for real-time data-driven modeling of cyber physical systems (CPS) using the vast amount of data available by Internet of Things (IoT) networks. In this position paper, we elucidate unique characteristics and capabilities of a DT framework that enables realization of such promises as online learning of a physical environment, real-time monitoring of assets, Monte Carlo heuristic search for predictive prevention, on-policy, and off-policy reinforcement learning in real-time. We establish a conceptual layered architecture for a DT framework with decentralized implementation on cloud computing and enabled by artificial intelligence (AI) services for modeling, event detection, and decision-making processes. The DT framework separates the control functions, deployed as a system of logically centralized process, from the physical devices under control, much like software-defined networking (SDN) in fifth generation (5G) wireless networks. We discuss the moment of the DT framework in facilitating implementation of network-based control processes and its implications for critical infrastructure. To clarify the significance of DT in lowering the risk of development and deployment of innovative technologies on existing system, we discuss the application of implementing zero trust architecture (ZTA) as a necessary security framework in future data-driven communication networks.

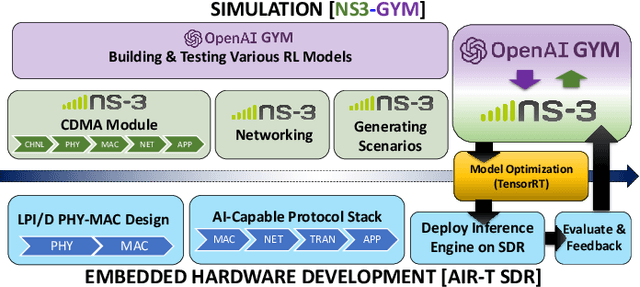

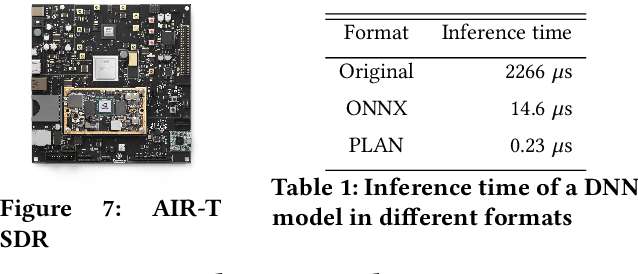

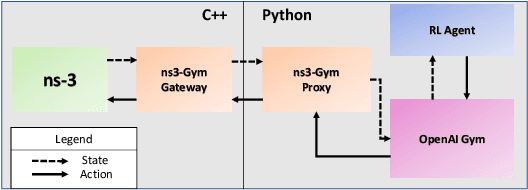

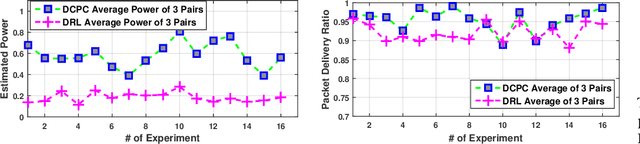

MR-iNet Gym: Framework for Edge Deployment of Deep Reinforcement Learning on Embedded Software Defined Radio

Apr 09, 2022

Abstract:Dynamic resource allocation plays a critical role in the next generation of intelligent wireless communication systems. Machine learning has been leveraged as a powerful tool to make strides in this domain. In most cases, the progress has been limited to simulations due to the challenging nature of hardware deployment of these solutions. In this paper, for the first time, we design and deploy deep reinforcement learning (DRL)-based power control agents on the GPU embedded software defined radios (SDRs). To this end, we propose an end-to-end framework (MR-iNet Gym) where the simulation suite and the embedded SDR development work cohesively to overcome real-world implementation hurdles. To prove feasibility, we consider the problem of distributed power control for code-division multiple access (DS-CDMA)-based LPI/D transceivers. We first build a DS-CDMA ns3 module that interacts with the OpenAI Gym environment. Next, we train the power control DRL agents in this ns3-gym simulation environment in a scenario that replicates our hardware testbed. Next, for edge (embedded on-device) deployment, the trained models are optimized for real-time operation without loss of performance. Hardware-based evaluation verifies the efficiency of DRL agents over traditional distributed constrained power control (DCPC) algorithm. More significantly, as the primary goal, this is the first work that has established the feasibility of deploying DRL to provide optimized distributed resource allocation for next-generation of GPU-embedded radios.

Deep neural network goes lighter: A case study of deep compression techniques on automatic RF modulation recognition for Beyond 5G networks

Apr 09, 2022

Abstract:Automatic RF modulation recognition is a primary signal intelligence (SIGINT) technique that serves as a physical layer authentication enabler and automated signal processing scheme for the beyond 5G and military networks. Most existing works rely on adopting deep neural network architectures to enable RF modulation recognition. The application of deep compression for the wireless domain, especially automatic RF modulation classification, is still in its infancy. Lightweight neural networks are key to sustain edge computation capability on resource-constrained platforms. In this letter, we provide an in-depth view of the state-of-the-art deep compression and acceleration techniques with an emphasis on edge deployment for beyond 5G networks. Finally, we present an extensive analysis of the representative acceleration approaches as a case study on automatic radar modulation classification and evaluate them in terms of the computational metrics.

Multi-task Learning Approach for Modulation and Wireless Signal Classification for 5G and Beyond: Edge Deployment via Model Compression

Feb 26, 2022

Abstract:Future communication networks must address the scarce spectrum to accommodate extensive growth of heterogeneous wireless devices. Wireless signal recognition is becoming increasingly more significant for spectrum monitoring, spectrum management, secure communications, among others. Consequently, comprehensive spectrum awareness on the edge has the potential to serve as a key enabler for the emerging beyond 5G networks. State-of-the-art studies in this domain have (i) only focused on a single task - modulation or signal (protocol) classification - which in many cases is insufficient information for a system to act on, (ii) consider either radar or communication waveforms (homogeneous waveform category), and (iii) does not address edge deployment during neural network design phase. In this work, for the first time in the wireless communication domain, we exploit the potential of deep neural networks based multi-task learning (MTL) framework to simultaneously learn modulation and signal classification tasks while considering heterogeneous wireless signals such as radar and communication waveforms in the electromagnetic spectrum. The proposed MTL architecture benefits from the mutual relation between the two tasks in improving the classification accuracy as well as the learning efficiency with a lightweight neural network model. We additionally include experimental evaluations of the model with over-the-air collected samples and demonstrate first-hand insight on model compression along with deep learning pipeline for deployment on resource-constrained edge devices. We demonstrate significant computational, memory, and accuracy improvement of the proposed model over two reference architectures. In addition to modeling a lightweight MTL model suitable for resource-constrained embedded radio platforms, we provide a comprehensive heterogeneous wireless signals dataset for public use.

A Comprehensive Survey on Radio Frequency (RF) Fingerprinting: Traditional Approaches, Deep Learning, and Open Challenges

Jan 03, 2022

Abstract:Fifth generation (5G) networks and beyond envisions massive Internet of Things (IoT) rollout to support disruptive applications such as extended reality (XR), augmented/virtual reality (AR/VR), industrial automation, autonomous driving, and smart everything which brings together massive and diverse IoT devices occupying the radio frequency (RF) spectrum. Along with spectrum crunch and throughput challenges, such a massive scale of wireless devices exposes unprecedented threat surfaces. RF fingerprinting is heralded as a candidate technology that can be combined with cryptographic and zero-trust security measures to ensure data privacy, confidentiality, and integrity in wireless networks. Motivated by the relevance of this subject in the future communication networks, in this work, we present a comprehensive survey of RF fingerprinting approaches ranging from a traditional view to the most recent deep learning (DL) based algorithms. Existing surveys have mostly focused on a constrained presentation of the wireless fingerprinting approaches, however, many aspects remain untold. In this work, however, we mitigate this by addressing every aspect - background on signal intelligence (SIGINT), applications, relevant DL algorithms, systematic literature review of RF fingerprinting techniques spanning the past two decades, discussion on datasets, and potential research avenues - necessary to elucidate this topic to the reader in an encyclopedic manner.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge