Kian Hamedani

Cindy

MR-iNet Gym: Framework for Edge Deployment of Deep Reinforcement Learning on Embedded Software Defined Radio

Apr 09, 2022

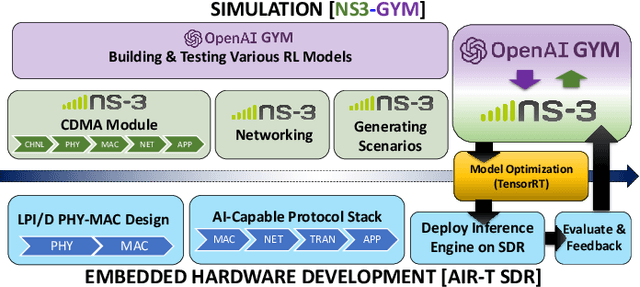

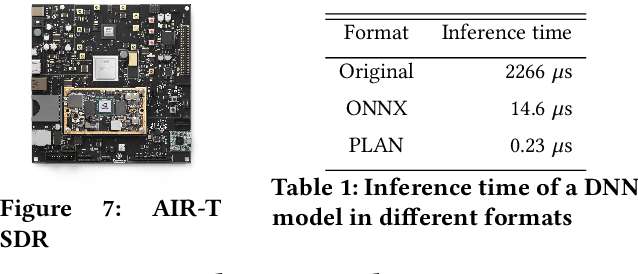

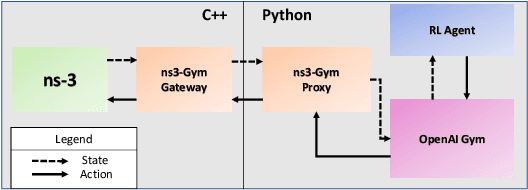

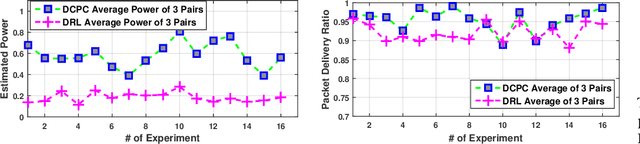

Abstract:Dynamic resource allocation plays a critical role in the next generation of intelligent wireless communication systems. Machine learning has been leveraged as a powerful tool to make strides in this domain. In most cases, the progress has been limited to simulations due to the challenging nature of hardware deployment of these solutions. In this paper, for the first time, we design and deploy deep reinforcement learning (DRL)-based power control agents on the GPU embedded software defined radios (SDRs). To this end, we propose an end-to-end framework (MR-iNet Gym) where the simulation suite and the embedded SDR development work cohesively to overcome real-world implementation hurdles. To prove feasibility, we consider the problem of distributed power control for code-division multiple access (DS-CDMA)-based LPI/D transceivers. We first build a DS-CDMA ns3 module that interacts with the OpenAI Gym environment. Next, we train the power control DRL agents in this ns3-gym simulation environment in a scenario that replicates our hardware testbed. Next, for edge (embedded on-device) deployment, the trained models are optimized for real-time operation without loss of performance. Hardware-based evaluation verifies the efficiency of DRL agents over traditional distributed constrained power control (DCPC) algorithm. More significantly, as the primary goal, this is the first work that has established the feasibility of deploying DRL to provide optimized distributed resource allocation for next-generation of GPU-embedded radios.

Adversarial Classification of the Attacks on Smart Grids Using Game Theory and Deep Learning

Jun 06, 2021

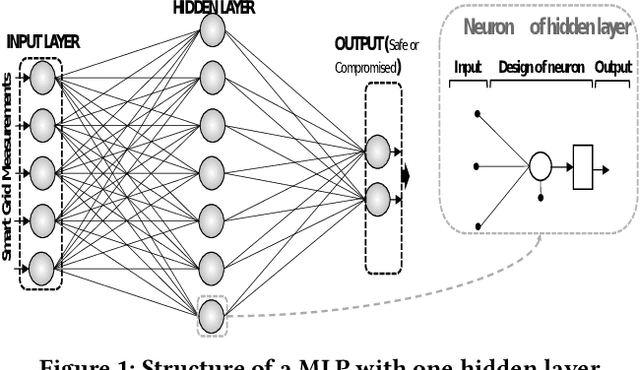

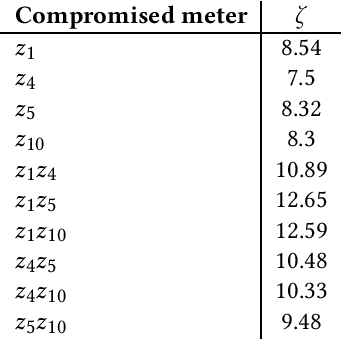

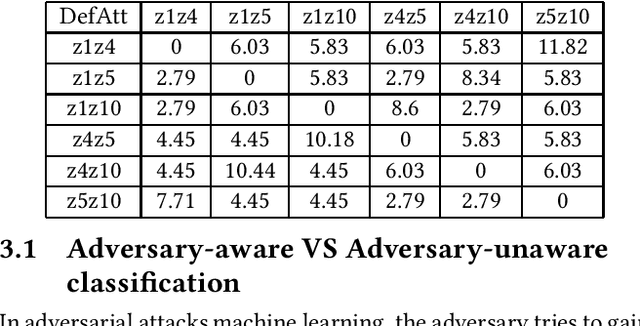

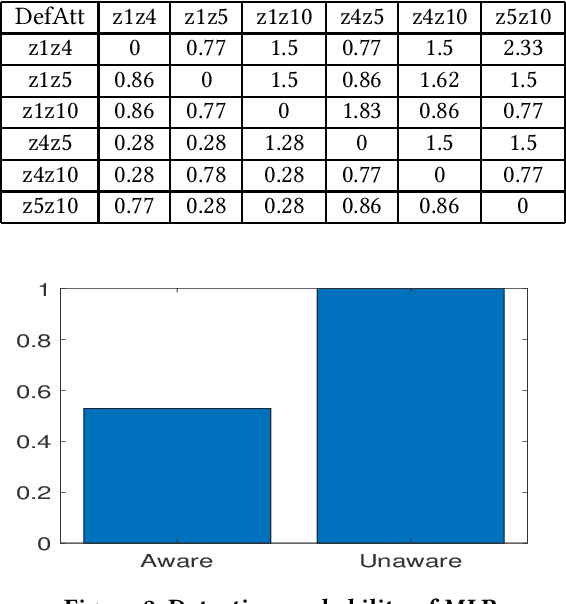

Abstract:Smart grids are vulnerable to cyber-attacks. This paper proposes a game-theoretic approach to evaluate the variations caused by an attacker on the power measurements. Adversaries can gain financial benefits through the manipulation of the meters of smart grids. On the other hand, there is a defender that tries to maintain the accuracy of the meters. A zero-sum game is used to model the interactions between the attacker and defender. In this paper, two different defenders are used and the effectiveness of each defender in different scenarios is evaluated. Multi-layer perceptrons (MLPs) and traditional state estimators are the two defenders that are studied in this paper. The utility of the defender is also investigated in adversary-aware and adversary-unaware situations. Our simulations suggest that the utility which is gained by the adversary drops significantly when the MLP is used as the defender. It will be shown that the utility of the defender is variant in different scenarios, based on the defender that is being used. In the end, we will show that this zero-sum game does not yield a pure strategy, and the mixed strategy of the game is calculated.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge