Antonis Karakottas

Geo-NVS-w: Geometry-Aware Novel View Synthesis In-the-Wild with an SDF Renderer

Jan 13, 2026Abstract:We introduce Geo-NVS-w, a geometry-aware framework for high-fidelity novel view synthesis from unstructured, in-the-wild image collections. While existing in-the-wild methods already excel at novel view synthesis, they often lack geometric grounding on complex surfaces, sometimes producing results that contain inconsistencies. Geo-NVS-w addresses this limitation by leveraging an underlying geometric representation based on a Signed Distance Function (SDF) to guide the rendering process. This is complemented by a novel Geometry-Preservation Loss which ensures that fine structural details are preserved. Our framework achieves competitive rendering performance, while demonstrating a 4-5x reduction reduction in energy consumption compared to similar methods. We demonstrate that Geo-NVS-w is a robust method for in-the-wild NVS, yielding photorealistic results with sharp, geometrically coherent details.

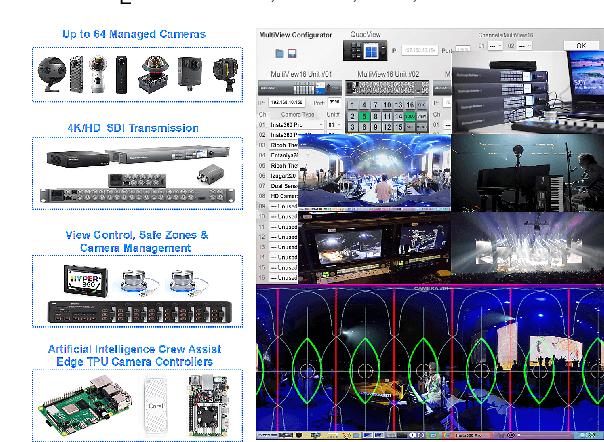

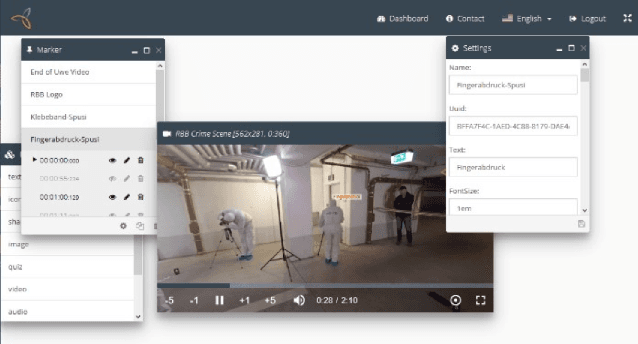

Hyper360 -- a Next Generation Toolset for Immersive Media

Aug 01, 2021

Abstract:Spherical 360{\deg} video is a novel media format, rapidly becoming adopted in media production and consumption of immersive media. Due to its novelty, there is a lack of tools for producing highly engaging interactive 360{\deg} video for consumption on a multitude of platforms. In this work, we describe the work done so far in the Hyper360 project on tools for mixed 360{\deg} video and 3D content. Furthermore, the first pilots which have been produced with the Hyper360 tools and results of the audience assessment of the produced pilots are presented.

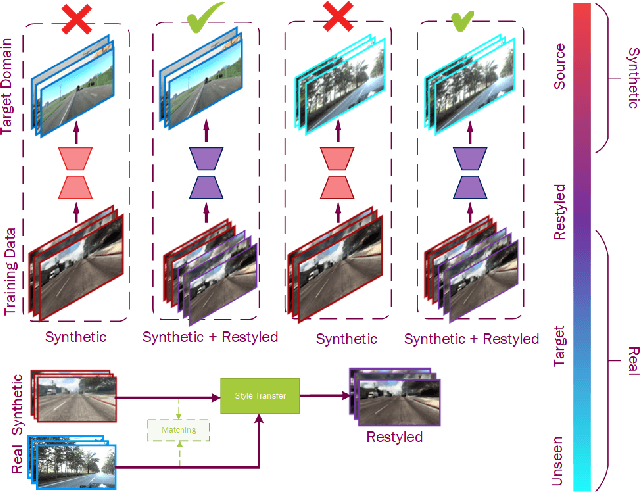

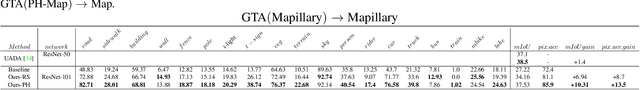

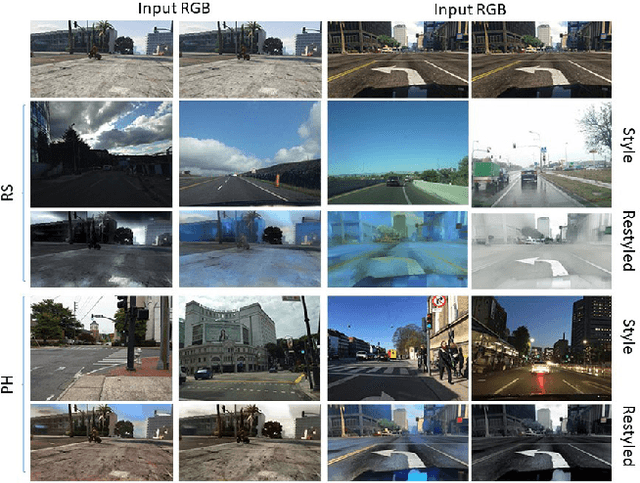

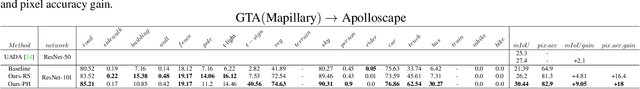

Restyling Data: Application to Unsupervised Domain Adaptation

Sep 24, 2019

Abstract:Machine learning is driven by data, yet while their availability is constantly increasing, training data require laborious, time consuming and error-prone labelling or ground truth acquisition, which in some cases is very difficult or even impossible. Recent works have resorted to synthetic data generation, but the inferior performance of models trained on synthetic data when applied to the real world, introduced the challenge of unsupervised domain adaptation. In this work we investigate an unsupervised domain adaptation technique that descends from another perspective, in order to avoid the complexity of adversarial training and cycle consistencies. We exploit the recent advances in photorealistic style transfer and take a fully data driven approach. While this concept is already implicitly formulated within the intricate objectives of domain adaptation GANs, we take an explicit approach and apply it directly as data pre-processing. The resulting technique is scalable, efficient and easy to implement, offers competitive performance to the complex state-of-the-art alternatives and can open up new pathways for domain adaptation.

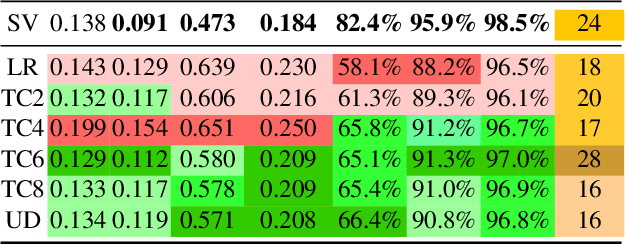

Spherical View Synthesis for Self-Supervised 360 Depth Estimation

Sep 17, 2019

Abstract:Learning based approaches for depth perception are limited by the availability of clean training data. This has led to the utilization of view synthesis as an indirect objective for learning depth estimation using efficient data acquisition procedures. Nonetheless, most research focuses on pinhole based monocular vision, with scarce works presenting results for omnidirectional input. In this work, we explore spherical view synthesis for learning monocular 360 depth in a self-supervised manner and demonstrate its feasibility. Under a purely geometrically derived formulation we present results for horizontal and vertical baselines, as well as for the trinocular case. Further, we show how to better exploit the expressiveness of traditional CNNs when applied to the equirectangular domain in an efficient manner. Finally, given the availability of ground truth depth data, our work is uniquely positioned to compare view synthesis against direct supervision in a consistent and fair manner. The results indicate that alternative research directions might be better suited to enable higher quality depth perception. Our data, models and code are publicly available at https://vcl3d.github.io/SphericalViewSynthesis/.

$360^o$ Surface Regression with a Hyper-Sphere Loss

Sep 16, 2019

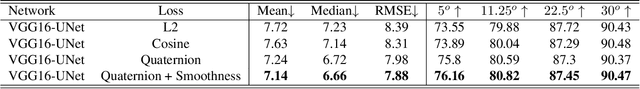

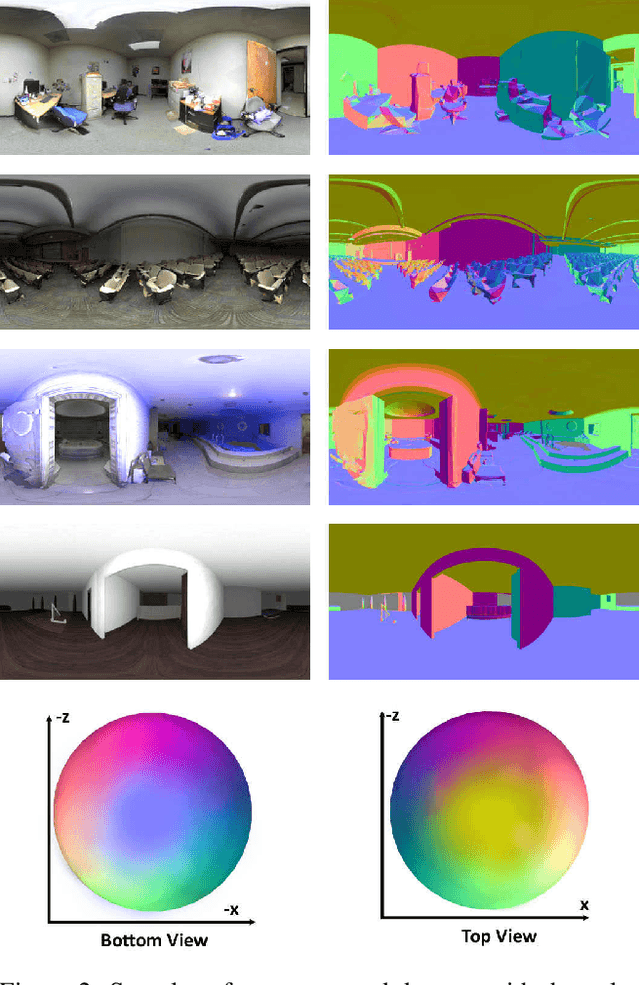

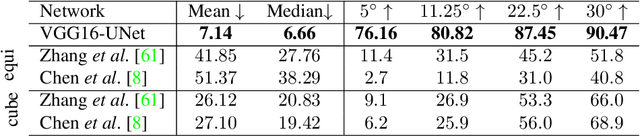

Abstract:Omnidirectional vision is becoming increasingly relevant as more efficient $360^o$ image acquisition is now possible. However, the lack of annotated $360^o$ datasets has hindered the application of deep learning techniques on spherical content. This is further exaggerated on tasks where ground truth acquisition is difficult, such as monocular surface estimation. While recent research approaches on the 2D domain overcome this challenge by relying on generating normals from depth cues using RGB-D sensors, this is very difficult to apply on the spherical domain. In this work, we address the unavailability of sufficient $360^o$ ground truth normal data, by leveraging existing 3D datasets and remodelling them via rendering. We present a dataset of $360^o$ images of indoor spaces with their corresponding ground truth surface normal, and train a deep convolutional neural network (CNN) on the task of monocular 360 surface estimation. We achieve this by minimizing a novel angular loss function defined on the hyper-sphere using simple quaternion algebra. We put an effort to appropriately compare with other state of the art methods trained on planar datasets and finally, present the practical applicability of our trained model on a spherical image re-lighting task using completely unseen data by qualitatively showing the promising generalization ability of our dataset and model. The dataset is available at: vcl3d.github.io/HyperSphereSurfaceRegression.

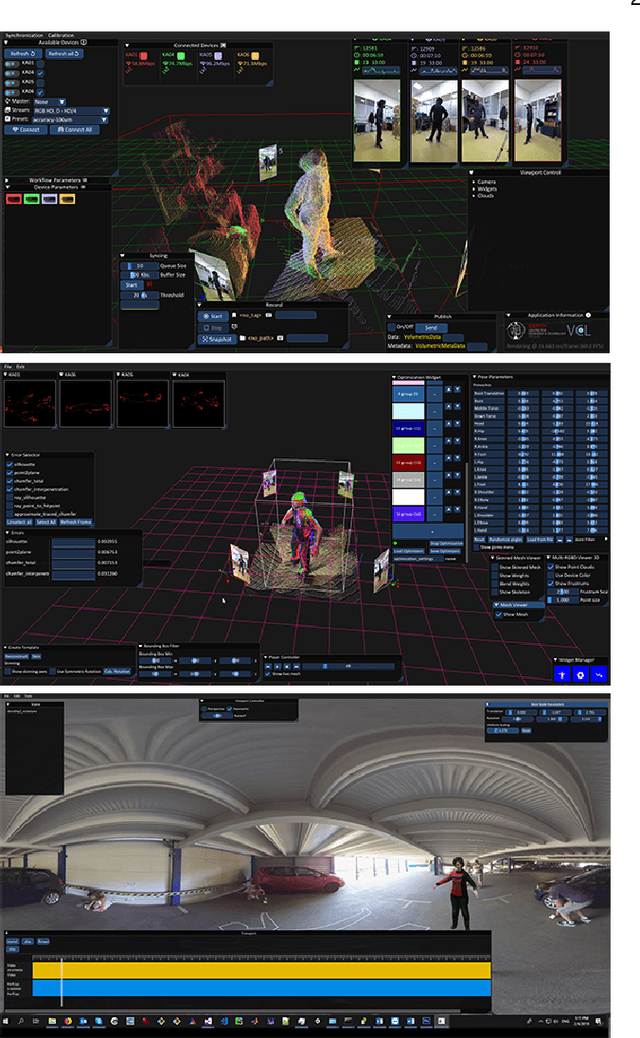

A Low-Cost, Flexible and Portable Volumetric Capturing System

Sep 03, 2019

Abstract:Multi-view capture systems are complex systems to engineer. They require technical knowledge to install and intricate processes to setup related mainly to the sensors' spatial alignment (i.e. external calibration). However, with the ongoing developments in new production methods, we are now at a position where the production of high quality realistic 3D assets is possible even with commodity sensors. Nonetheless, the capturing systems developed with these methods are heavily intertwined with the methods themselves, relying on custom solutions and seldom - if not at all - publicly available. In light of this, we design, develop and publicly offer a multi-view capture system based on the latest RGB-D sensor technology. For our system, we develop a portable and easy-to-use external calibration method that greatly reduces the effort and knowledge required, as well as simplify the overall process.

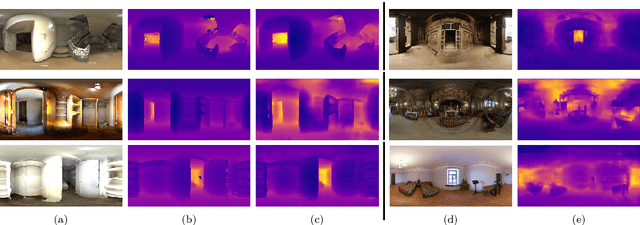

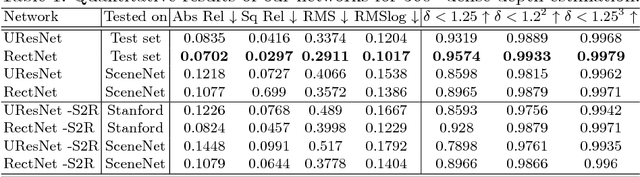

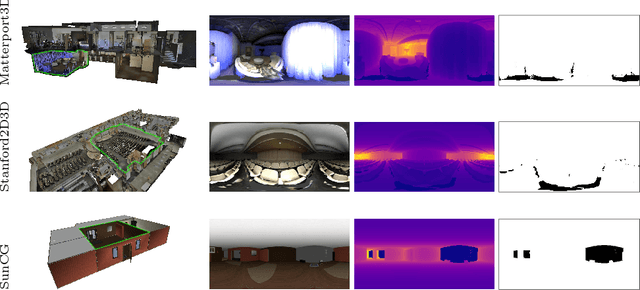

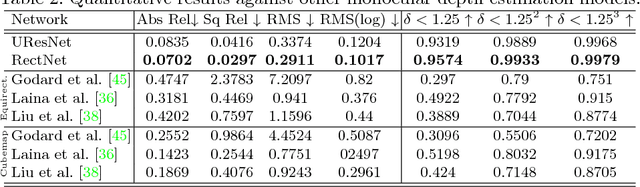

OmniDepth: Dense Depth Estimation for Indoors Spherical Panoramas

Jul 25, 2018

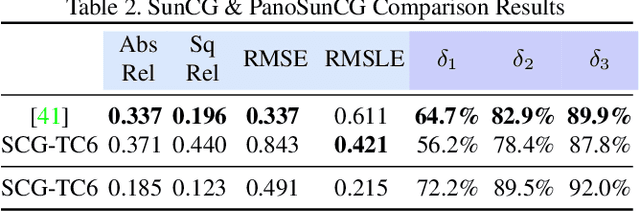

Abstract:Recent work on depth estimation up to now has only focused on projective images ignoring 360 content which is now increasingly and more easily produced. We show that monocular depth estimation models trained on traditional images produce sub-optimal results on omnidirectional images, showcasing the need for training directly on 360 datasets, which however, are hard to acquire. In this work, we circumvent the challenges associated with acquiring high quality 360 datasets with ground truth depth annotations, by re-using recently released large scale 3D datasets and re-purposing them to 360 via rendering. This dataset, which is considerably larger than similar projective datasets, is publicly offered to the community to enable future research in this direction. We use this dataset to learn in an end-to-end fashion the task of depth estimation from 360 images. We show promising results in our synthesized data as well as in unseen realistic images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge