Anthony Hunter

Rule-Guided Feedback: Enhancing Reasoning by Enforcing Rule Adherence in Large Language Models

Mar 14, 2025

Abstract:In this paper, we introduce Rule-Guided Feedback (RGF), a framework designed to enhance Large Language Model (LLM) performance through structured rule adherence and strategic information seeking. RGF implements a teacher-student paradigm where rule-following is forced through established guidelines. Our framework employs a Teacher model that rigorously evaluates each student output against task-specific rules, providing constructive guidance rather than direct answers when detecting deviations. This iterative feedback loop serves two crucial purposes: maintaining solutions within defined constraints and encouraging proactive information seeking to resolve uncertainties. We evaluate RGF on diverse tasks including Checkmate-in-One puzzles, Sonnet Writing, Penguins-In-a-Table classification, GSM8k, and StrategyQA. Our findings suggest that structured feedback mechanisms can significantly enhance LLMs' performance across various domains.

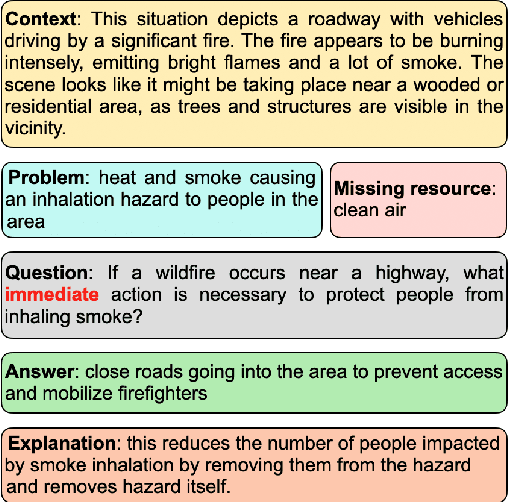

RESPONSE: Benchmarking the Ability of Language Models to Undertake Commonsense Reasoning in Crisis Situation

Mar 14, 2025

Abstract:An interesting class of commonsense reasoning problems arises when people are faced with natural disasters. To investigate this topic, we present \textsf{RESPONSE}, a human-curated dataset containing 1789 annotated instances featuring 6037 sets of questions designed to assess LLMs' commonsense reasoning in disaster situations across different time frames. The dataset includes problem descriptions, missing resources, time-sensitive solutions, and their justifications, with a subset validated by environmental engineers. Through both automatic metrics and human evaluation, we compare LLM-generated recommendations against human responses. Our findings show that even state-of-the-art models like GPT-4 achieve only 37\% human-evaluated correctness for immediate response actions, highlighting significant room for improvement in LLMs' ability for commonsense reasoning in crises.

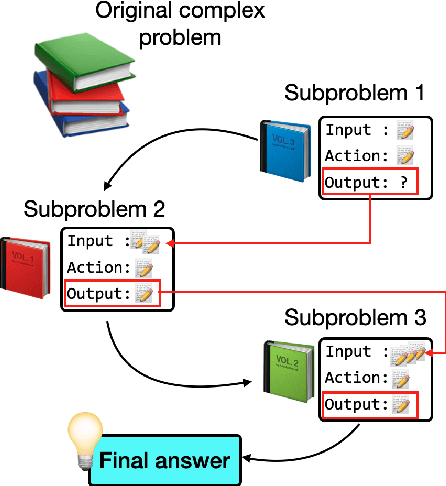

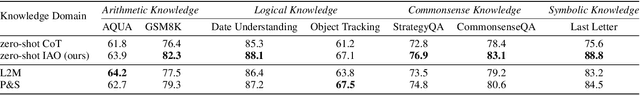

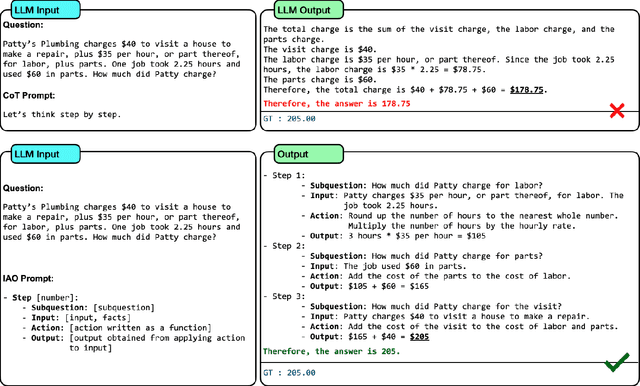

IAO Prompting: Making Knowledge Flow Explicit in LLMs through Structured Reasoning Templates

Feb 05, 2025

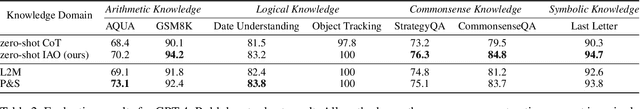

Abstract:While Large Language Models (LLMs) demonstrate impressive reasoning capabilities, understanding and validating their knowledge utilization remains challenging. Chain-of-thought (CoT) prompting partially addresses this by revealing intermediate reasoning steps, but the knowledge flow and application remain implicit. We introduce IAO (Input-Action-Output) prompting, a structured template-based method that explicitly models how LLMs access and apply their knowledge during complex reasoning tasks. IAO decomposes problems into sequential steps, each clearly identifying the input knowledge being used, the action being performed, and the resulting output. This structured decomposition enables us to trace knowledge flow, verify factual consistency, and identify potential knowledge gaps or misapplications. Through experiments across diverse reasoning tasks, we demonstrate that IAO not only improves zero-shot performance but also provides transparency in how LLMs leverage their stored knowledge. Human evaluation confirms that this structured approach enhances our ability to verify knowledge utilization and detect potential hallucinations or reasoning errors. Our findings provide insights into both knowledge representation within LLMs and methods for more reliable knowledge application.

An Axiomatic Study of the Evaluation of Enthymeme Decoding in Weighted Structured Argumentation

Nov 07, 2024

Abstract:An argument can be seen as a pair consisting of a set of premises and a claim supported by them. Arguments used by humans are often enthymemes, i.e., some premises are implicit. To better understand, evaluate, and compare enthymemes, it is essential to decode them, i.e., to find the missing premisses. Many enthymeme decodings are possible. We need to distinguish between reasonable decodings and unreasonable ones. However, there is currently no research in the literature on "How to evaluate decodings?". To pave the way and achieve this goal, we introduce seven criteria related to decoding, based on different research areas. Then, we introduce the notion of criterion measure, the objective of which is to evaluate a decoding with regard to a certain criterion. Since such measures need to be validated, we introduce several desirable properties for them, called axioms. Another main contribution of the paper is the construction of certain criterion measures that are validated by our axioms. Such measures can be used to identify the best enthymemes decodings.

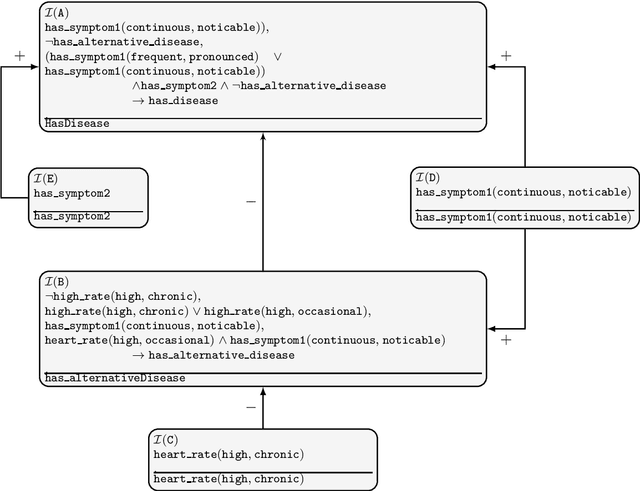

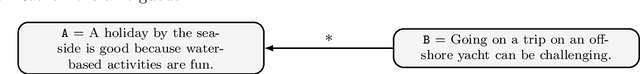

Understanding Enthymemes in Argument Maps: Bridging Argument Mining and Logic-based Argumentation

Aug 16, 2024Abstract:Argument mining is natural language processing technology aimed at identifying arguments in text. Furthermore, the approach is being developed to identify the premises and claims of those arguments, and to identify the relationships between arguments including support and attack relationships. In this paper, we assume that an argument map contains the premises and claims of arguments, and support and attack relationships between them, that have been identified by argument mining. So from a piece of text, we assume an argument map is obtained automatically by natural language processing. However, to understand and to automatically analyse that argument map, it would be desirable to instantiate that argument map with logical arguments. Once we have the logical representation of the arguments in an argument map, we can use automated reasoning to analyze the argumentation (e.g. check consistency of premises, check validity of claims, and check the labelling on each arc corresponds with thw logical arguments). We address this need by using classical logic for representing the explicit information in the text, and using default logic for representing the implicit information in the text. In order to investigate our proposal, we consider some specific options for instantiation.

Identification of Entailment and Contradiction Relations between Natural Language Sentences: A Neurosymbolic Approach

May 02, 2024

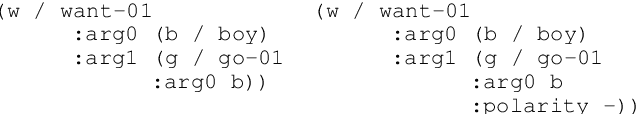

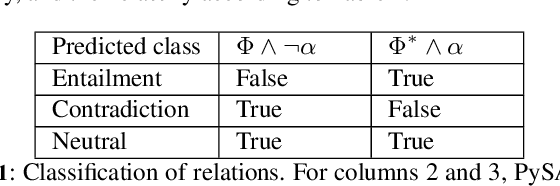

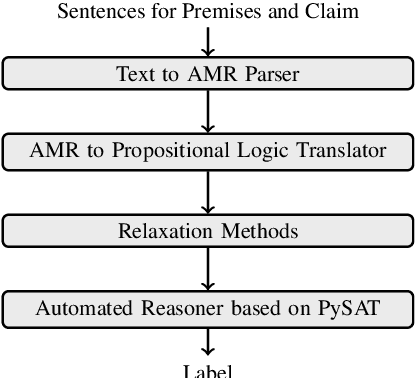

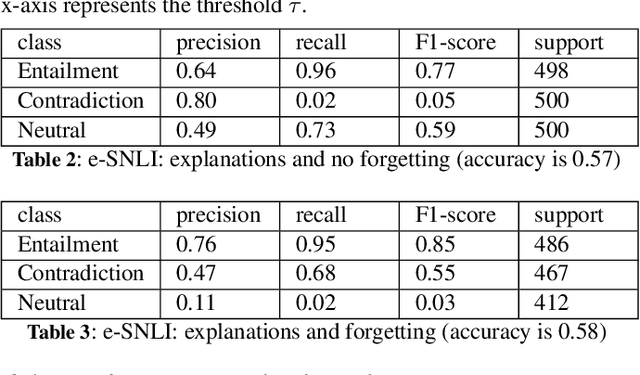

Abstract:Natural language inference (NLI), also known as Recognizing Textual Entailment (RTE), is an important aspect of natural language understanding. Most research now uses machine learning and deep learning to perform this task on specific datasets, meaning their solution is not explainable nor explicit. To address the need for an explainable approach to RTE, we propose a novel pipeline that is based on translating text into an Abstract Meaning Representation (AMR) graph. For this we use a pre-trained AMR parser. We then translate the AMR graph into propositional logic and use a SAT solver for automated reasoning. In text, often commonsense suggests that an entailment (or contradiction) relationship holds between a premise and a claim, but because different wordings are used, this is not identified from their logical representations. To address this, we introduce relaxation methods to allow replacement or forgetting of some propositions. Our experimental results show this pipeline performs well on four RTE datasets.

Unsupervised Learning of Graph from Recipes

Jan 22, 2024Abstract:Cooking recipes are one of the most readily available kinds of procedural text. They consist of natural language instructions that can be challenging to interpret. In this paper, we propose a model to identify relevant information from recipes and generate a graph to represent the sequence of actions in the recipe. In contrast with other approaches, we use an unsupervised approach. We iteratively learn the graph structure and the parameters of a $\mathsf{GNN}$ encoding the texts (text-to-graph) one sequence at a time while providing the supervision by decoding the graph into text (graph-to-text) and comparing the generated text to the input. We evaluate the approach by comparing the identified entities with annotated datasets, comparing the difference between the input and output texts, and comparing our generated graphs with those generated by state of the art methods.

PizzaCommonSense: Learning to Model Commonsense Reasoning about Intermediate Steps in Cooking Recipes

Jan 12, 2024

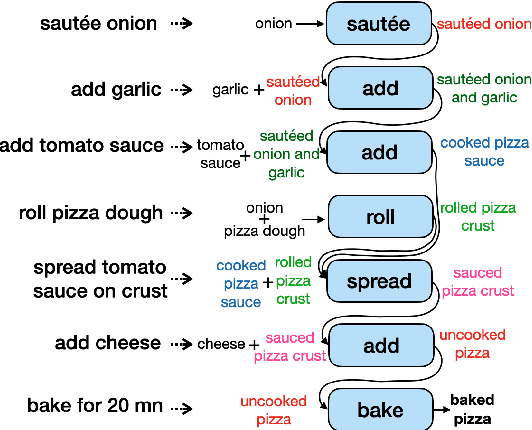

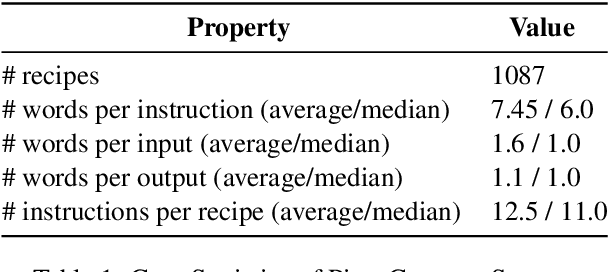

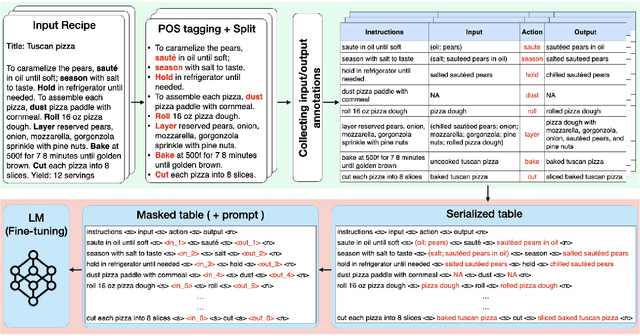

Abstract:Decoding the core of procedural texts, exemplified by cooking recipes, is crucial for intelligent reasoning and instruction automation. Procedural texts can be comprehensively defined as a sequential chain of steps to accomplish a task employing resources. From a cooking perspective, these instructions can be interpreted as a series of modifications to a food preparation, which initially comprises a set of ingredients. These changes involve transformations of comestible resources. For a model to effectively reason about cooking recipes, it must accurately discern and understand the inputs and outputs of intermediate steps within the recipe. Aiming to address this, we present a new corpus of cooking recipes enriched with descriptions of intermediate steps of the recipes that explicate the input and output for each step. We discuss the data collection process, investigate and provide baseline models based on T5 and GPT-3.5. This work presents a challenging task and insight into commonsense reasoning and procedural text generation.

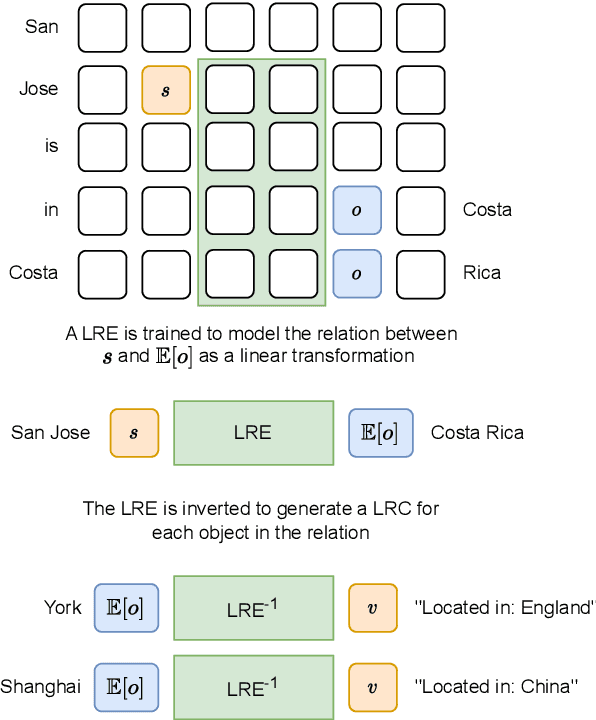

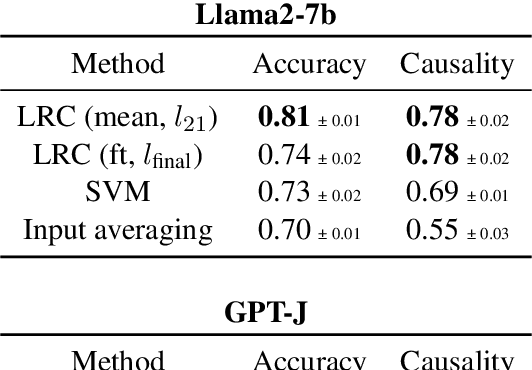

Identifying Linear Relational Concepts in Large Language Models

Nov 15, 2023

Abstract:Transformer language models (LMs) have been shown to represent concepts as directions in the latent space of hidden activations. However, for any given human-interpretable concept, how can we find its direction in the latent space? We present a technique called linear relational concepts (LRC) for finding concept directions corresponding to human-interpretable concepts at a given hidden layer in a transformer LM by first modeling the relation between subject and object as a linear relational embedding (LRE). While the LRE work was mainly presented as an exercise in understanding model representations, we find that inverting the LRE while using earlier object layers results in a powerful technique to find concept directions that both work well as a classifier and causally influence model outputs.

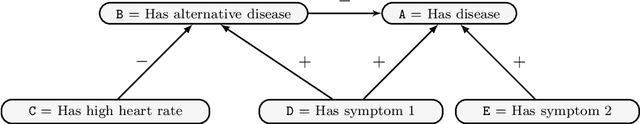

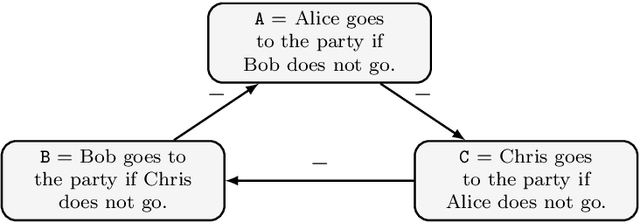

Some Options for Instantiation of Bipolar Argument Graphs with Deductive Arguments

Aug 08, 2023

Abstract:Argument graphs provide an abstract representation of an argumentative situation. A bipolar argument graph is a directed graph where each node denotes an argument, and each arc denotes the influence of one argument on another. Here we assume that the influence is supporting, attacking, or ambiguous. In a bipolar argument graph, each argument is atomic and so it has no internal structure. Yet to better understand the nature of the individual arguments, and how they interact, it is important to consider their internal structure. To address this need, this paper presents a framework based on the use of logical arguments to instantiate bipolar argument graphs, and a set of possible constraints on instantiating arguments that take into account the internal structure of the arguments, and the types of relationship between arguments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge