Anthony Bourached

GAUCHE: A Library for Gaussian Processes in Chemistry

Dec 06, 2022Abstract:We introduce GAUCHE, a library for GAUssian processes in CHEmistry. Gaussian processes have long been a cornerstone of probabilistic machine learning, affording particular advantages for uncertainty quantification and Bayesian optimisation. Extending Gaussian processes to chemical representations, however, is nontrivial, necessitating kernels defined over structured inputs such as graphs, strings and bit vectors. By defining such kernels in GAUCHE, we seek to open the door to powerful tools for uncertainty quantification and Bayesian optimisation in chemistry. Motivated by scenarios frequently encountered in experimental chemistry, we showcase applications for GAUCHE in molecular discovery and chemical reaction optimisation. The codebase is made available at https://github.com/leojklarner/gauche

Extracting associations and meanings of objects depicted in artworks through bi-modal deep networks

Mar 16, 2022

Abstract:We present a novel bi-modal system based on deep networks to address the problem of learning associations and simple meanings of objects depicted in "authored" images, such as fine art paintings and drawings. Our overall system processes both the images and associated texts in order to learn associations between images of individual objects, their identities and the abstract meanings they signify. Unlike past deep nets that describe depicted objects and infer predicates, our system identifies meaning-bearing objects ("signifiers") and their associations ("signifieds") as well as basic overall meanings for target artworks. Our system had precision of 48% and recall of 78% with an F1 metric of 0.6 on a curated set of Dutch vanitas paintings, a genre celebrated for its concentration on conveying a meaning of great import at the time of their execution. We developed and tested our system on fine art paintings but our general methods can be applied to other authored images.

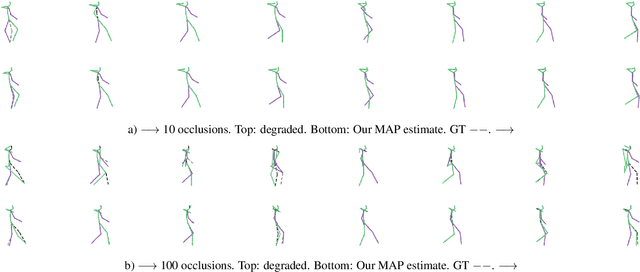

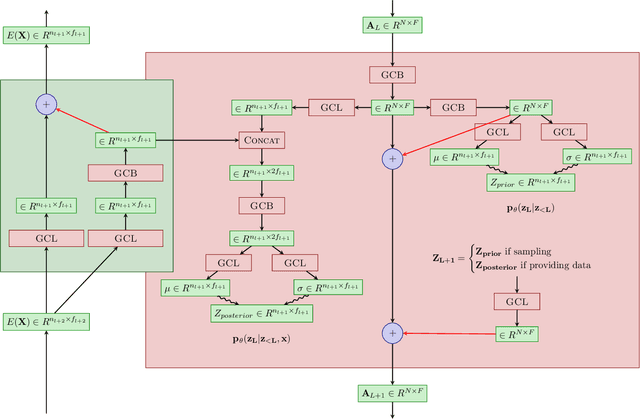

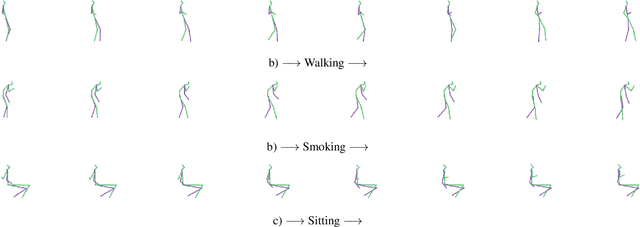

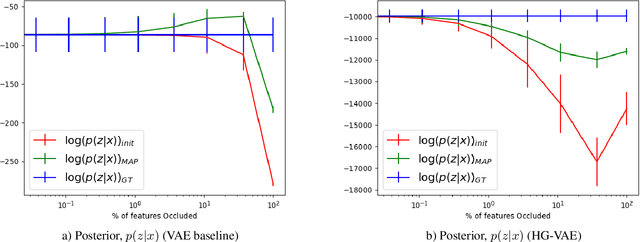

Hierarchical Graph-Convolutional Variational AutoEncoding for Generative Modelling of Human Motion

Nov 29, 2021

Abstract:Models of human motion commonly focus either on trajectory prediction or action classification but rarely both. The marked heterogeneity and intricate compositionality of human motion render each task vulnerable to the data degradation and distributional shift common to real-world scenarios. A sufficiently expressive generative model of action could in theory enable data conditioning and distributional resilience within a unified framework applicable to both tasks. Here we propose a novel architecture based on hierarchical variational autoencoders and deep graph convolutional neural networks for generating a holistic model of action over multiple time-scales. We show this Hierarchical Graph-convolutional Variational Autoencoder (HG-VAE) to be capable of generating coherent actions, detecting out-of-distribution data, and imputing missing data by gradient ascent on the model's posterior. Trained and evaluated on H3.6M and the largest collection of open source human motion data, AMASS, we show HG-VAE can facilitate downstream discriminative learning better than baseline models.

Computational identification of significant actors in paintings through symbols and attributes

Feb 04, 2021

Abstract:The automatic analysis of fine art paintings presents a number of novel technical challenges to artificial intelligence, computer vision, machine learning, and knowledge representation quite distinct from those arising in the analysis of traditional photographs. The most important difference is that many realist paintings depict stories or episodes in order to convey a lesson, moral, or meaning. One early step in automatic interpretation and extraction of meaning in artworks is the identifications of figures (actors). In Christian art, specifically, one must identify the actors in order to identify the Biblical episode or story depicted, an important step in understanding the artwork. We designed an automatic system based on deep convolutional neural networks and simple knowledge database to identify saints throughout six centuries of Christian art based in large part upon saints symbols or attributes. Our work represents initial steps in the broad task of automatic semantic interpretation of messages and meaning in fine art.

Resolution enhancement in the recovery of underdrawings via style transfer by generative adversarial deep neural networks

Jan 30, 2021

Abstract:We apply generative adversarial convolutional neural networks to the problem of style transfer to underdrawings and ghost-images in x-rays of fine art paintings with a special focus on enhancing their spatial resolution. We build upon a neural architecture developed for the related problem of synthesizing high-resolution photo-realistic image from semantic label maps. Our neural architecture achieves high resolution through a hierarchy of generators and discriminator sub-networks, working throughout a range of spatial resolutions. This coarse-to-fine generator architecture can increase the effective resolution by a factor of eight in each spatial direction, or an overall increase in number of pixels by a factor of 64. We also show that even just a few examples of human-generated image segmentations can greatly improve -- qualitatively and quantitatively -- the generated images. We demonstrate our method on works such as Leonardo's Madonna of the carnation and the underdrawing in his Virgin of the rocks, which pose several special problems in style transfer, including the paucity of representative works from which to learn and transfer style information.

Recovery of underdrawings and ghost-paintings via style transfer by deep convolutional neural networks: A digital tool for art scholars

Jan 04, 2021

Abstract:We describe the application of convolutional neural network style transfer to the problem of improved visualization of underdrawings and ghost-paintings in fine art oil paintings. Such underdrawings and hidden paintings are typically revealed by x-ray or infrared techniques which yield images that are grayscale, and thus devoid of color and full style information. Past methods for inferring color in underdrawings have been based on physical x-ray fluorescence spectral imaging of pigments in ghost-paintings and are thus expensive, time consuming, and require equipment not available in most conservation studios. Our algorithmic methods do not need such expensive physical imaging devices. Our proof-of-concept system, applied to works by Pablo Picasso and Leonardo, reveal colors and designs that respect the natural segmentation in the ghost-painting. We believe the computed images provide insight into the artist and associated oeuvre not available by other means. Our results strongly suggest that future applications based on larger corpora of paintings for training will display color schemes and designs that even more closely resemble works of the artist. For these reasons refinements to our methods should find wide use in art conservation, connoisseurship, and art analysis.

Generative Model-Enhanced Human Motion Prediction

Oct 05, 2020

Abstract:The task of predicting human motion is complicated by the natural heterogeneity and compositionality of actions, necessitating robustness to distributional shifts as far as out-of-distribution (OoD). Here we formulate a new OoD benchmark based on the Human3.6M and CMU motion capture datasets, and introduce a hybrid framework for hardening discriminative architectures to OoD failure by augmenting them with a generative model. When applied to current state-of-the-art discriminative models, we show that the proposed approach improves OoD robustness without sacrificing in-distribution performance, and can facilitate model interpretability. We suggest human motion predictors ought to be constructed with OoD challenges in mind, and provide an extensible general framework for hardening diverse discriminative architectures to extreme distributional shift. The code is available at https://github.com/bouracha/OoDMotion.

Unsupervised Videographic Analysis of Rodent Behaviour

Oct 26, 2019

Abstract:Animal behaviour is complex and the amount of data in the form of video, if extracted, is copious. Manual analysis of behaviour is massively limited by two insurmountable obstacles, the complexity of the behavioural patterns and human bias. Automated visual analysis has the potential to eliminate both of these issues and also enable continuous analysis allowing a much higher bandwidth of data collection which is vital to capture complex behaviour at many different time scales. Behaviour is not confined to a finite set modules and thus we can only model it by inferring the generative distribution. In this way unpredictable, anomalous behaviour may be considered. Here we present a method of unsupervised behavioural analysis from nothing but high definition video recordings taken from a single, fixed perspective. We demonstrate that the identification of stereotyped rodent behaviour can be extracted in this way.

Raiders of the Lost Art

Sep 10, 2019

Abstract:Neural style transfer, first proposed by Gatys et al. (2015), can be used to create novel artistic work through rendering a content image in the form of a style image. We present a novel method of reconstructing lost artwork, by applying neural style transfer to x-radiographs of artwork with secondary interior artwork beneath a primary exterior, so as to reconstruct lost artwork. Finally we reflect on AI art exhibitions and discuss the social, cultural, ethical, and philosophical impact of these technical innovations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge