Anna Schmitz

Fraunhofer Institute for Intelligent Analysis and Information Systems IAIS Sankt Augustin, Germany

Textual Data Bias Detection and Mitigation -- An Extensible Pipeline with Experimental Evaluation

Dec 12, 2025Abstract:Textual data used to train large language models (LLMs) exhibits multifaceted bias manifestations encompassing harmful language and skewed demographic distributions. Regulations such as the European AI Act require identifying and mitigating biases against protected groups in data, with the ultimate goal of preventing unfair model outputs. However, practical guidance and operationalization are lacking. We propose a comprehensive data bias detection and mitigation pipeline comprising four components that address two data bias types, namely representation bias and (explicit) stereotypes for a configurable sensitive attribute. First, we leverage LLM-generated word lists created based on quality criteria to detect relevant group labels. Second, representation bias is quantified using the Demographic Representation Score. Third, we detect and mitigate stereotypes using sociolinguistically informed filtering. Finally, we compensate representation bias through Grammar- and Context-Aware Counterfactual Data Augmentation. We conduct a two-fold evaluation using the examples of gender, religion and age. First, the effectiveness of each individual component on data debiasing is evaluated through human validation and baseline comparison. The findings demonstrate that we successfully reduce representation bias and (explicit) stereotypes in a text dataset. Second, the effect of data debiasing on model bias reduction is evaluated by bias benchmarking of several models (0.6B-8B parameters), fine-tuned on the debiased text dataset. This evaluation reveals that LLMs fine-tuned on debiased data do not consistently show improved performance on bias benchmarks, exposing critical gaps in current evaluation methodologies and highlighting the need for targeted data manipulation to address manifested model bias.

Developing trustworthy AI applications with foundation models

May 08, 2024

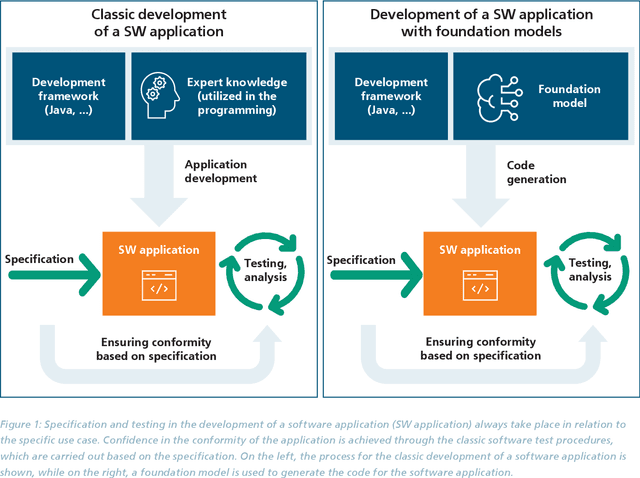

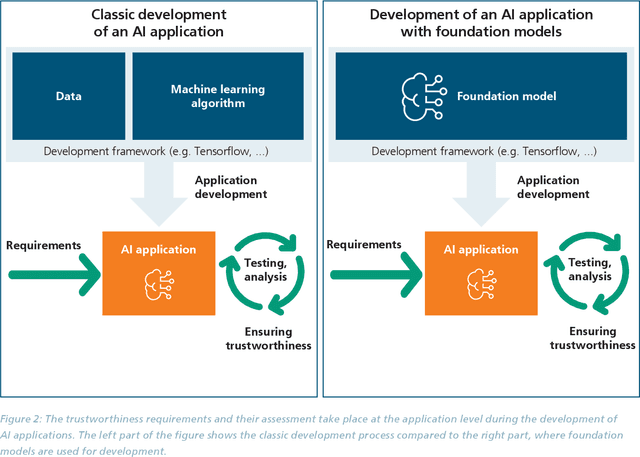

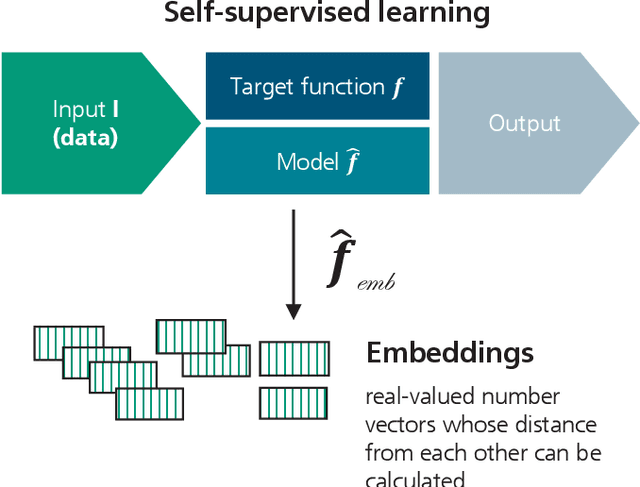

Abstract:The trustworthiness of AI applications has been the subject of recent research and is also addressed in the EU's recently adopted AI Regulation. The currently emerging foundation models in the field of text, speech and image processing offer completely new possibilities for developing AI applications. This whitepaper shows how the trustworthiness of an AI application developed with foundation models can be evaluated and ensured. For this purpose, the application-specific, risk-based approach for testing and ensuring the trustworthiness of AI applications, as developed in the 'AI Assessment Catalog - Guideline for Trustworthy Artificial Intelligence' by Fraunhofer IAIS, is transferred to the context of foundation models. Special consideration is given to the fact that specific risks of foundation models can have an impact on the AI application and must also be taken into account when checking trustworthiness. Chapter 1 of the white paper explains the fundamental relationship between foundation models and AI applications based on them in terms of trustworthiness. Chapter 2 provides an introduction to the technical construction of foundation models and Chapter 3 shows how AI applications can be developed based on them. Chapter 4 provides an overview of the resulting risks regarding trustworthiness. Chapter 5 shows which requirements for AI applications and foundation models are to be expected according to the draft of the European Union's AI Regulation and Chapter 6 finally shows the system and procedure for meeting trustworthiness requirements.

Guideline for Trustworthy Artificial Intelligence -- AI Assessment Catalog

Jun 20, 2023Abstract:Artificial Intelligence (AI) has made impressive progress in recent years and represents a key technology that has a crucial impact on the economy and society. However, it is clear that AI and business models based on it can only reach their full potential if AI applications are developed according to high quality standards and are effectively protected against new AI risks. For instance, AI bears the risk of unfair treatment of individuals when processing personal data e.g., to support credit lending or staff recruitment decisions. The emergence of these new risks is closely linked to the fact that the behavior of AI applications, particularly those based on Machine Learning (ML), is essentially learned from large volumes of data and is not predetermined by fixed programmed rules. Thus, the issue of the trustworthiness of AI applications is crucial and is the subject of numerous major publications by stakeholders in politics, business and society. In addition, there is mutual agreement that the requirements for trustworthy AI, which are often described in an abstract way, must now be made clear and tangible. One challenge to overcome here relates to the fact that the specific quality criteria for an AI application depend heavily on the application context and possible measures to fulfill them in turn depend heavily on the AI technology used. Lastly, practical assessment procedures are needed to evaluate whether specific AI applications have been developed according to adequate quality standards. This AI assessment catalog addresses exactly this point and is intended for two target groups: Firstly, it provides developers with a guideline for systematically making their AI applications trustworthy. Secondly, it guides assessors and auditors on how to examine AI applications for trustworthiness in a structured way.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge