Anna Hilsmann

Computer Vision & Graphics, Vision & Imaging Technologies, Fraunhofer Heinrich-Hertz-Institute HHI, Berlin

CGS-GAN: 3D Consistent Gaussian Splatting GANs for High Resolution Human Head Synthesis

May 23, 2025Abstract:Recently, 3D GANs based on 3D Gaussian splatting have been proposed for high quality synthesis of human heads. However, existing methods stabilize training and enhance rendering quality from steep viewpoints by conditioning the random latent vector on the current camera position. This compromises 3D consistency, as we observe significant identity changes when re-synthesizing the 3D head with each camera shift. Conversely, fixing the camera to a single viewpoint yields high-quality renderings for that perspective but results in poor performance for novel views. Removing view-conditioning typically destabilizes GAN training, often causing the training to collapse. In response to these challenges, we introduce CGS-GAN, a novel 3D Gaussian Splatting GAN framework that enables stable training and high-quality 3D-consistent synthesis of human heads without relying on view-conditioning. To ensure training stability, we introduce a multi-view regularization technique that enhances generator convergence with minimal computational overhead. Additionally, we adapt the conditional loss used in existing 3D Gaussian splatting GANs and propose a generator architecture designed to not only stabilize training but also facilitate efficient rendering and straightforward scaling, enabling output resolutions up to $2048^2$. To evaluate the capabilities of CGS-GAN, we curate a new dataset derived from FFHQ. This dataset enables very high resolutions, focuses on larger portions of the human head, reduces view-dependent artifacts for improved 3D consistency, and excludes images where subjects are obscured by hands or other objects. As a result, our approach achieves very high rendering quality, supported by competitive FID scores, while ensuring consistent 3D scene generation. Check our our project page here: https://fraunhoferhhi.github.io/cgs-gan/

AppleGrowthVision: A large-scale stereo dataset for phenological analysis, fruit detection, and 3D reconstruction in apple orchards

May 20, 2025Abstract:Deep learning has transformed computer vision for precision agriculture, yet apple orchard monitoring remains limited by dataset constraints. The lack of diverse, realistic datasets and the difficulty of annotating dense, heterogeneous scenes. Existing datasets overlook different growth stages and stereo imagery, both essential for realistic 3D modeling of orchards and tasks like fruit localization, yield estimation, and structural analysis. To address these gaps, we present AppleGrowthVision, a large-scale dataset comprising two subsets. The first includes 9,317 high resolution stereo images collected from a farm in Brandenburg (Germany), covering six agriculturally validated growth stages over a full growth cycle. The second subset consists of 1,125 densely annotated images from the same farm in Brandenburg and one in Pillnitz (Germany), containing a total of 31,084 apple labels. AppleGrowthVision provides stereo-image data with agriculturally validated growth stages, enabling precise phenological analysis and 3D reconstructions. Extending MinneApple with our data improves YOLOv8 performance by 7.69 % in terms of F1-score, while adding it to MinneApple and MAD boosts Faster R-CNN F1-score by 31.06 %. Additionally, six BBCH stages were predicted with over 95 % accuracy using VGG16, ResNet152, DenseNet201, and MobileNetv2. AppleGrowthVision bridges the gap between agricultural science and computer vision, by enabling the development of robust models for fruit detection, growth modeling, and 3D analysis in precision agriculture. Future work includes improving annotation, enhancing 3D reconstruction, and extending multimodal analysis across all growth stages.

Intraoperative perfusion assessment by continuous, low-latency hyperspectral light-field imaging: development, methodology, and clinical application

Apr 15, 2025Abstract:Accurate assessment of tissue perfusion is crucial in visceral surgery, especially during anastomosis. Currently, subjective visual judgment is commonly employed in clinical settings. Hyperspectral imaging (HSI) offers a non-invasive, quantitative alternative. However, HSI imaging lacks continuous integration into the clinical workflow. This study presents a hyperspectral light field system for intraoperative tissue oxygen saturation (SO2) analysis and visualization. We present a correlation method for determining SO2 saturation with low computational demands. We demonstrate clinical application, with our results aligning with the perfusion boundaries determined by the surgeon. We perform and compare continuous perfusion analysis using two hyperspectral cameras (Cubert S5, Cubert X20), achieving processing times of < 170 ms and < 400 ms, respectively. We discuss camera characteristics, system parameters, and the suitability for clinical use and real-time applications.

* 6 pages, 4 figures

Improving Adaptive Density Control for 3D Gaussian Splatting

Mar 18, 2025Abstract:3D Gaussian Splatting (3DGS) has become one of the most influential works in the past year. Due to its efficient and high-quality novel view synthesis capabilities, it has been widely adopted in many research fields and applications. Nevertheless, 3DGS still faces challenges to properly manage the number of Gaussian primitives that are used during scene reconstruction. Following the adaptive density control (ADC) mechanism of 3D Gaussian Splatting, new Gaussians in under-reconstructed regions are created, while Gaussians that do not contribute to the rendering quality are pruned. We observe that those criteria for densifying and pruning Gaussians can sometimes lead to worse rendering by introducing artifacts. We especially observe under-reconstructed background or overfitted foreground regions. To encounter both problems, we propose three new improvements to the adaptive density control mechanism. Those include a correction for the scene extent calculation that does not only rely on camera positions, an exponentially ascending gradient threshold to improve training convergence, and significance-aware pruning strategy to avoid background artifacts. With these adaptions, we show that the rendering quality improves while using the same number of Gaussians primitives. Furthermore, with our improvements, the training converges considerably faster, allowing for more than twice as fast training times while yielding better quality than 3DGS. Finally, our contributions are easily compatible with most existing derivative works of 3DGS making them relevant for future works.

Improving Geometric Consistency for 360-Degree Neural Radiance Fields in Indoor Scenarios

Mar 17, 2025

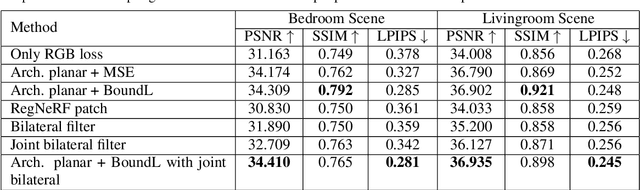

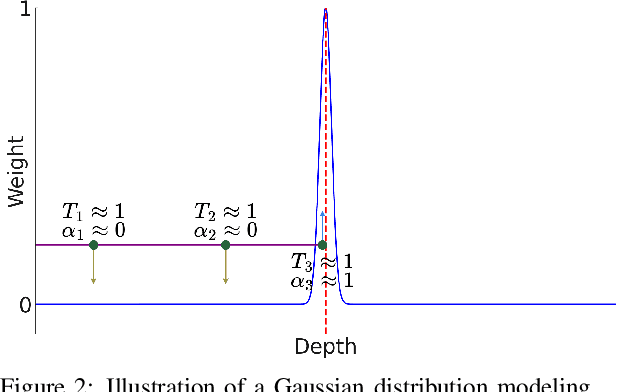

Abstract:Photo-realistic rendering and novel view synthesis play a crucial role in human-computer interaction tasks, from gaming to path planning. Neural Radiance Fields (NeRFs) model scenes as continuous volumetric functions and achieve remarkable rendering quality. However, NeRFs often struggle in large, low-textured areas, producing cloudy artifacts known as ''floaters'' that reduce scene realism, especially in indoor environments with featureless architectural surfaces like walls, ceilings, and floors. To overcome this limitation, prior work has integrated geometric constraints into the NeRF pipeline, typically leveraging depth information derived from Structure from Motion or Multi-View Stereo. Yet, conventional RGB-feature correspondence methods face challenges in accurately estimating depth in textureless regions, leading to unreliable constraints. This challenge is further complicated in 360-degree ''inside-out'' views, where sparse visual overlap between adjacent images further hinders depth estimation. In order to address these issues, we propose an efficient and robust method for computing dense depth priors, specifically tailored for large low-textured architectural surfaces in indoor environments. We introduce a novel depth loss function to enhance rendering quality in these challenging, low-feature regions, while complementary depth-patch regularization further refines depth consistency across other areas. Experiments with Instant-NGP on two synthetic 360-degree indoor scenes demonstrate improved visual fidelity with our method compared to standard photometric loss and Mean Squared Error depth supervision.

Automatic Drywall Analysis for Progress Tracking and Quality Control in Construction

Mar 05, 2025

Abstract:Digitalization in the construction industry has become essential, enabling centralized, easy access to all relevant information of a building. Automated systems can facilitate the timely and resource-efficient documentation of changes, which is crucial for key processes such as progress tracking and quality control. This paper presents a method for image-based automated drywall analysis enabling construction progress and quality assessment through on-site camera systems. Our proposed solution integrates a deep learning-based instance segmentation model to detect and classify various drywall elements with an analysis module to cluster individual wall segments, estimate camera perspective distortions, and apply the corresponding corrections. This system extracts valuable information from images, enabling more accurate progress tracking and quality assessment on construction sites. Our main contributions include a fully automated pipeline for drywall analysis, improving instance segmentation accuracy through architecture modifications and targeted data augmentation, and a novel algorithm to extract important information from the segmentation results. Our modified model, enhanced with data augmentation, achieves significantly higher accuracy compared to other architectures, offering more detailed and precise information than existing approaches. Combined with the proposed drywall analysis steps, it enables the reliable automation of construction progress and quality assessment.

Automatic Tissue Differentiation in Parotidectomy using Hyperspectral Imaging

Dec 06, 2024Abstract:In head and neck surgery, continuous intraoperative tissue differentiation is of great importance to avoid injury to sensitive structures such as nerves and vessels. Hyperspectral imaging (HSI) with neural network analysis could support the surgeon in tissue differentiation. A 3D Convolutional Neural Network with hyperspectral data in the range of $400-1000$ nm is used in this work. The acquisition system consisted of two multispectral snapshot cameras creating a stereo-HSI-system. For the analysis, 27 images with annotations of glandular tissue, nerve, muscle, skin and vein in 18 patients undergoing parotidectomy are included. Three patients are removed for evaluation following the leave-one-subject-out principle. The remaining images are used for training, with the data randomly divided into a training group and a validation group. In the validation, an overall accuracy of $98.7\%$ is achieved, indicating robust training. In the evaluation on the excluded patients, an overall accuracy of $83.4\%$ has been achieved showing good detection and identification abilities. The results clearly show that it is possible to achieve robust intraoperative tissue differentiation using hyperspectral imaging. Especially the high sensitivity in parotid or nerve tissue is of clinical importance. It is interesting to note that vein was often confused with muscle. This requires further analysis and shows that a very good and comprehensive data basis is essential. This is a major challenge, especially in surgery.

Multi-Resolution Generative Modeling of Human Motion from Limited Data

Nov 25, 2024Abstract:We present a generative model that learns to synthesize human motion from limited training sequences. Our framework provides conditional generation and blending across multiple temporal resolutions. The model adeptly captures human motion patterns by integrating skeletal convolution layers and a multi-scale architecture. Our model contains a set of generative and adversarial networks, along with embedding modules, each tailored for generating motions at specific frame rates while exerting control over their content and details. Notably, our approach also extends to the synthesis of co-speech gestures, demonstrating its ability to generate synchronized gestures from speech inputs, even with limited paired data. Through direct synthesis of SMPL pose parameters, our approach avoids test-time adjustments to fit human body meshes. Experimental results showcase our model's ability to achieve extensive coverage of training examples, while generating diverse motions, as indicated by local and global diversity metrics.

Adaptive and Temporally Consistent Gaussian Surfels for Multi-view Dynamic Reconstruction

Nov 10, 2024Abstract:3D Gaussian Splatting has recently achieved notable success in novel view synthesis for dynamic scenes and geometry reconstruction in static scenes. Building on these advancements, early methods have been developed for dynamic surface reconstruction by globally optimizing entire sequences. However, reconstructing dynamic scenes with significant topology changes, emerging or disappearing objects, and rapid movements remains a substantial challenge, particularly for long sequences. To address these issues, we propose AT-GS, a novel method for reconstructing high-quality dynamic surfaces from multi-view videos through per-frame incremental optimization. To avoid local minima across frames, we introduce a unified and adaptive gradient-aware densification strategy that integrates the strengths of conventional cloning and splitting techniques. Additionally, we reduce temporal jittering in dynamic surfaces by ensuring consistency in curvature maps across consecutive frames. Our method achieves superior accuracy and temporal coherence in dynamic surface reconstruction, delivering high-fidelity space-time novel view synthesis, even in complex and challenging scenes. Extensive experiments on diverse multi-view video datasets demonstrate the effectiveness of our approach, showing clear advantages over baseline methods. Project page: \url{https://fraunhoferhhi.github.io/AT-GS}

EEG-Features for Generalized Deepfake Detection

May 14, 2024

Abstract:Since the advent of Deepfakes in digital media, the development of robust and reliable detection mechanism is urgently called for. In this study, we explore a novel approach to Deepfake detection by utilizing electroencephalography (EEG) measured from the neural processing of a human participant who viewed and categorized Deepfake stimuli from the FaceForensics++ datset. These measurements serve as input features to a binary support vector classifier, trained to discriminate between real and manipulated facial images. We examine whether EEG data can inform Deepfake detection and also if it can provide a generalized representation capable of identifying Deepfakes beyond the training domain. Our preliminary results indicate that human neural processing signals can be successfully integrated into Deepfake detection frameworks and hint at the potential for a generalized neural representation of artifacts in computer generated faces. Moreover, our study provides next steps towards the understanding of how digital realism is embedded in the human cognitive system, possibly enabling the development of more realistic digital avatars in the future.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge