Andreas Wallin

Cirrus: A Long-range Bi-pattern LiDAR Dataset

Dec 05, 2020

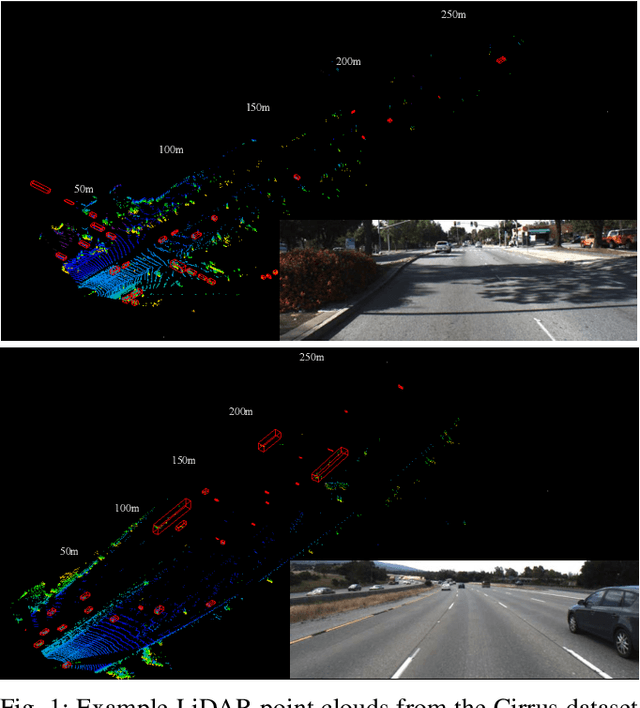

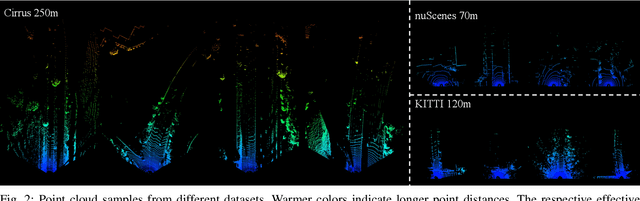

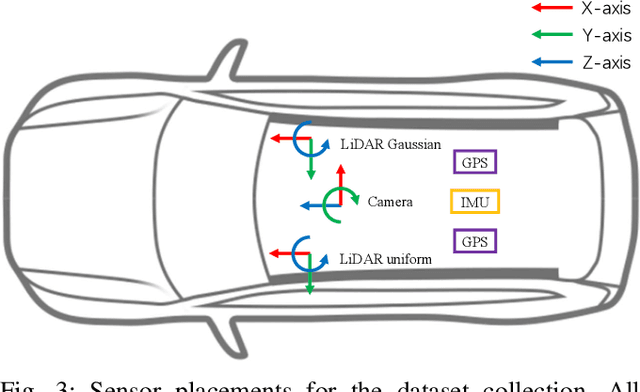

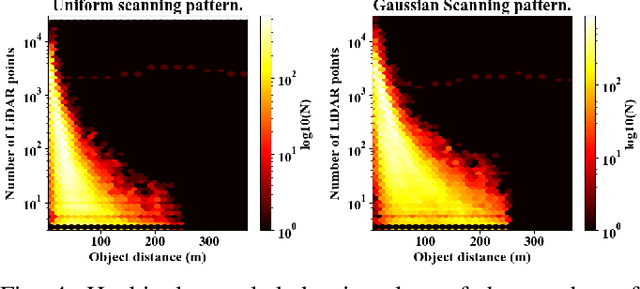

Abstract:In this paper, we introduce Cirrus, a new long-range bi-pattern LiDAR public dataset for autonomous driving tasks such as 3D object detection, critical to highway driving and timely decision making. Our platform is equipped with a high-resolution video camera and a pair of LiDAR sensors with a 250-meter effective range, which is significantly longer than existing public datasets. We record paired point clouds simultaneously using both Gaussian and uniform scanning patterns. Point density varies significantly across such a long range, and different scanning patterns further diversify object representation in LiDAR. In Cirrus, eight categories of objects are exhaustively annotated in the LiDAR point clouds for the entire effective range. To illustrate the kind of studies supported by this new dataset, we introduce LiDAR model adaptation across different ranges, scanning patterns, and sensor devices. Promising results show the great potential of this new dataset to the robotics and computer vision communities.

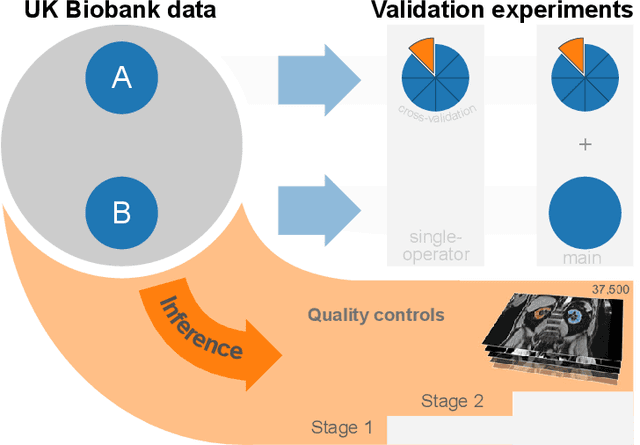

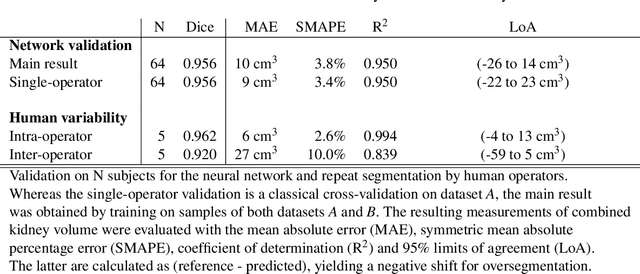

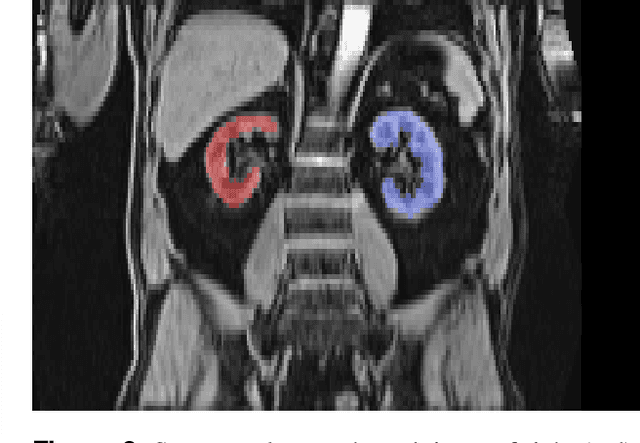

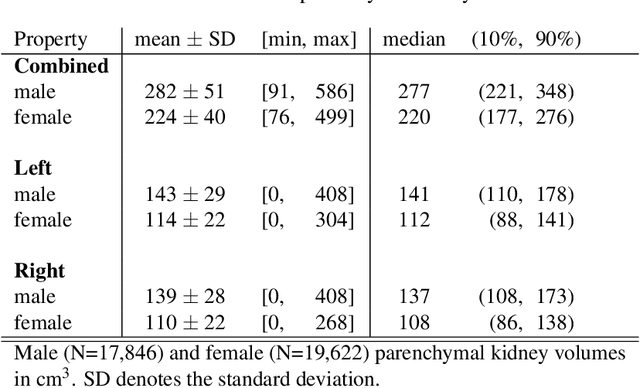

Kidney segmentation in neck-to-knee body MRI of 40,000 UK Biobank participants

Jun 12, 2020

Abstract:The UK Biobank is collecting extensive data on health-related characteristics of over half a million volunteers. The biological samples of blood and urine can provide valuable insight on kidney function, with important links to cardiovascular and metabolic health. Further information on kidney anatomy could be obtained by medical imaging. In contrast to the brain, heart, liver, and pancreas, no dedicated Magnetic Resonance Imaging (MRI) is planned for the kidneys. An image-based assessment is nonetheless feasible in the neck-to-knee body MRI intended for abdominal body composition analysis, which also covers the kidneys. In this work, a pipeline for automated segmentation of parenchymal kidney volume in UK Biobank neck-to-knee body MRI is proposed. The underlying neural network reaches a relative error of 3.8%, with Dice score 0.956 in validation on 64 subjects, close to the 2.6% and Dice score 0.962 for repeated segmentation by one human operator. The released MRI of about 40,000 subjects can be processed within two days, yielding volume measurements of left and right kidney. Algorithmic quality ratings enabled the exclusion of outliers and potential failure cases. The resulting measurements can be studied and shared for large-scale investigation of associations and longitudinal changes in parenchymal kidney volume.

Range Adaptation for 3D Object Detection in LiDAR

Sep 26, 2019

Abstract:LiDAR-based 3D object detection plays a crucial role in modern autonomous driving systems. LiDAR data often exhibit severe changes in properties across different observation ranges. In this paper, we explore cross-range adaptation for 3D object detection using LiDAR, i.e., far-range observations are adapted to near-range. This way, far-range detection is optimized for similar performance to near-range one. We adopt a bird-eyes view (BEV) detection framework to perform the proposed model adaptation. Our model adaptation consists of an adversarial global adaptation, and a fine-grained local adaptation. The proposed cross range adaptation framework is validated on three state-of-the-art LiDAR based object detection networks, and we consistently observe performance improvement on the far-range objects, without adding any auxiliary parameters to the model. To the best of our knowledge, this paper is the first attempt to study cross-range LiDAR adaptation for object detection in point clouds. To demonstrate the generality of the proposed adaptation framework, experiments on more challenging cross-device adaptation are further conducted, and a new LiDAR dataset with high-quality annotated point clouds is released to promote future research.

Towards Recovery of Conditional Vectors from Conditional Generative Adversarial Networks

Dec 06, 2017

Abstract:A conditional Generative Adversarial Network allows for generating samples conditioned on certain external information. Being able to recover latent and conditional vectors from a condi- tional GAN can be potentially valuable in various applications, ranging from image manipulation for entertaining purposes to diagnosis of the neural networks for security purposes. In this work, we show that it is possible to recover both latent and conditional vectors from generated images given the generator of a conditional generative adversarial network. Such a recovery is not trivial due to the often multi-layered non-linearity of deep neural networks. Furthermore, the effect of such recovery applied on real natural images are investigated. We discovered that there exists a gap between the recovery performance on generated and real images, which we believe comes from the difference between generated data distribution and real data distribution. Experiments are conducted to evaluate the recovered conditional vectors and the reconstructed images from these recovered vectors quantitatively and qualitatively, showing promising results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge