Anab Maulana Barik

Crowdsource, Crawl, or Generate? Creating SEA-VL, a Multicultural Vision-Language Dataset for Southeast Asia

Mar 10, 2025

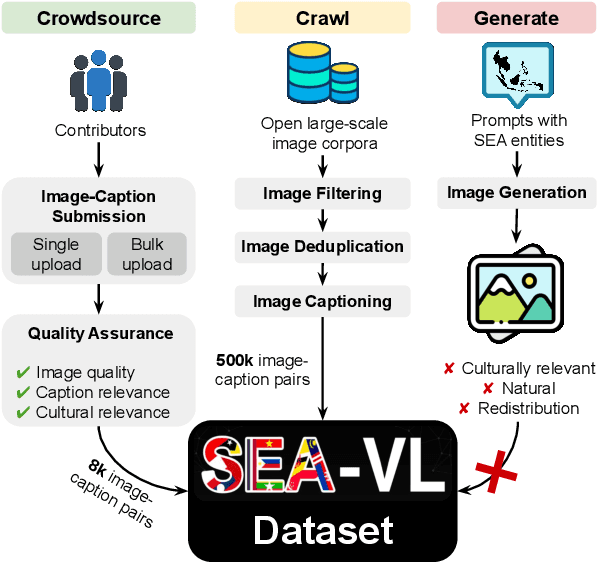

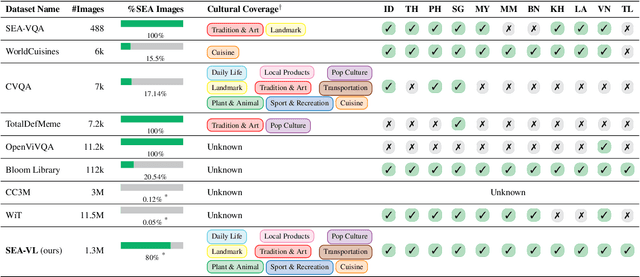

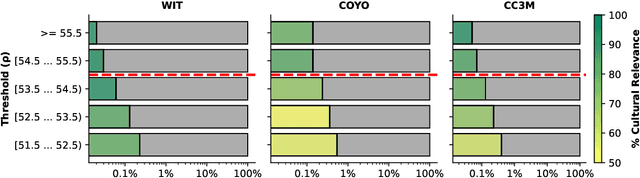

Abstract:Southeast Asia (SEA) is a region of extraordinary linguistic and cultural diversity, yet it remains significantly underrepresented in vision-language (VL) research. This often results in artificial intelligence (AI) models that fail to capture SEA cultural nuances. To fill this gap, we present SEA-VL, an open-source initiative dedicated to developing high-quality, culturally relevant data for SEA languages. By involving contributors from SEA countries, SEA-VL aims to ensure better cultural relevance and diversity, fostering greater inclusivity of underrepresented languages in VL research. Beyond crowdsourcing, our initiative goes one step further in the exploration of the automatic collection of culturally relevant images through crawling and image generation. First, we find that image crawling achieves approximately ~85% cultural relevance while being more cost- and time-efficient than crowdsourcing. Second, despite the substantial progress in generative vision models, synthetic images remain unreliable in accurately reflecting SEA cultures. The generated images often fail to reflect the nuanced traditions and cultural contexts of the region. Collectively, we gather 1.28M SEA culturally-relevant images, more than 50 times larger than other existing datasets. Through SEA-VL, we aim to bridge the representation gap in SEA, fostering the development of more inclusive AI systems that authentically represent diverse cultures across SEA.

ChronoFact: Timeline-based Temporal Fact Verification

Oct 19, 2024

Abstract:Automated fact verification plays an essential role in fostering trust in the digital space. Despite the growing interest, the verification of temporal facts has not received much attention in the community. Temporal fact verification brings new challenges where cues of the temporal information need to be extracted and temporal reasoning involving various temporal aspects of the text must be applied. In this work, we propose an end-to-end solution for temporal fact verification that considers the temporal information in claims to obtain relevant evidence sentences and harness the power of large language model for temporal reasoning. Recognizing that temporal facts often involve events, we model these events in the claim and evidence sentences. We curate two temporal fact datasets to learn time-sensitive representations that encapsulate not only the semantic relationships among the events, but also their chronological proximity. This allows us to retrieve the top-k relevant evidence sentences and provide the context for a large language model to perform temporal reasoning and outputs whether a claim is supported or refuted by the retrieved evidence sentences. Experiment results demonstrate that the proposed approach significantly enhances the accuracy of temporal claim verification, thereby advancing current state-of-the-art in automated fact verification.

Evidence-Based Temporal Fact Verification

Jul 21, 2024Abstract:Automated fact verification plays an essential role in fostering trust in the digital space. Despite the growing interest, the verification of temporal facts has not received much attention in the community. Temporal fact verification brings new challenges where cues of the temporal information need to be extracted and temporal reasoning involving various temporal aspects of the text must be applied. In this work, we propose an end-to-end solution for temporal fact verification that considers the temporal information in claims to obtain relevant evidence sentences and harness the power of large language model for temporal reasoning. Recognizing that temporal facts often involve events, we model these events in the claim and evidence sentences. We curate two temporal fact datasets to learn time-sensitive representations that encapsulate not only the semantic relationships among the events, but also their chronological proximity. This allows us to retrieve the top-k relevant evidence sentences and provide the context for a large language model to perform temporal reasoning and outputs whether a claim is supported or refuted by the retrieved evidence sentences. Experiment results demonstrate that the proposed approach significantly enhances the accuracy of temporal claim verification, thereby advancing current state-of-the-art in automated fact verification.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge