Alexandre Mendes

Diffusion in Zero-Shot Learning for Environmental Audio

Dec 04, 2024Abstract:Zero-shot learning enables models to generalize to unseen classes by leveraging semantic information, bridging the gap between training and testing sets with non-overlapping classes. While much research has focused on zero-shot learning in computer vision, the application of these methods to environmental audio remains underexplored, with poor performance in existing studies. Generative methods, which have demonstrated success in computer vision, are notably absent from environmental audio zero-shot learning, where classification-based approaches dominate. To address this gap, this work investigates generative methods for zero-shot learning in environmental audio. Two successful generative models from computer vision are adapted: a cross-aligned and distribution-aligned variational autoencoder (CADA-VAE) and a leveraging invariant side generative adversarial network (LisGAN). Additionally, a novel diffusion model conditioned on class auxiliary data is introduced. The diffusion model generates synthetic data for unseen classes, which is combined with seen-class data to train a classifier. Experiments are conducted on two environmental audio datasets, ESC-50 and FSC22. Results show that the diffusion model significantly outperforms all baseline methods, achieving more than 25% higher accuracy on the ESC-50 test partition. This work establishes the diffusion model as a promising generative approach for zero-shot learning and introduces the first benchmark of generative methods for environmental audio zero-shot learning, providing a foundation for future research in the field. Code is provided at https://github.com/ysims/ZeroDiffusion for the novel ZeroDiffusion method.

Comparing Computing Platforms for Deep Learning on a Humanoid Robot

Jan 20, 2019

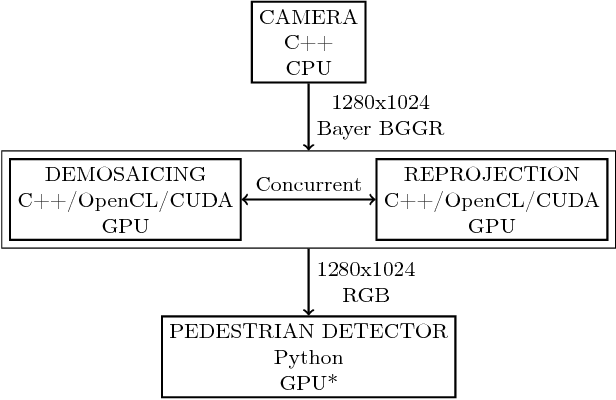

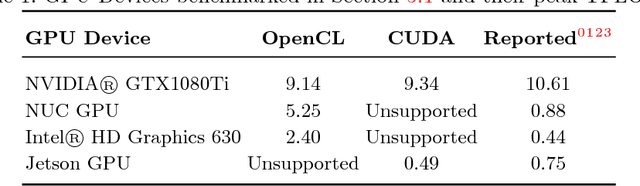

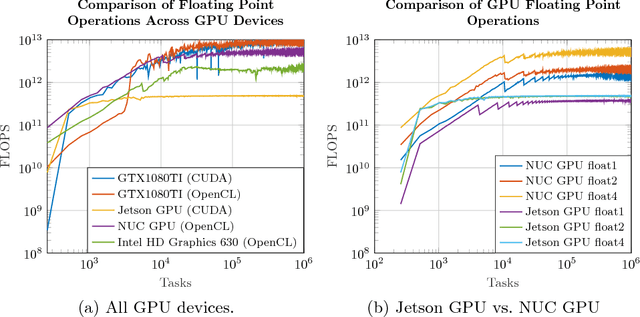

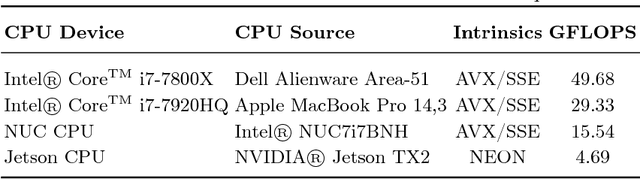

Abstract:The goal of this study is to test two different computing platforms with respect to their suitability for running deep networks as part of a humanoid robot software system. One of the platforms is the CPU-centered Intel NUC7i7BNH and the other is a NVIDIA Jetson TX2 system that puts more emphasis on GPU processing. The experiments addressed a number of benchmarking tasks including pedestrian detection using deep neural networks. Some of the results were unexpected but demonstrate that platforms exhibit both advantages and disadvantages when taking computational performance and electrical power requirements of such a system into account.

* 12 pages, 5 figures

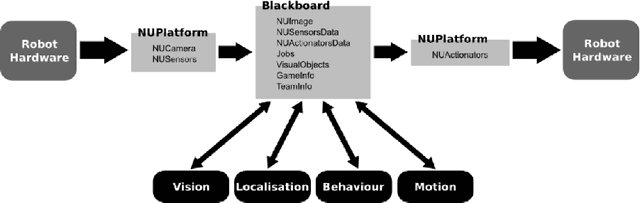

The NUbots Team Description Paper 2015

Feb 11, 2015

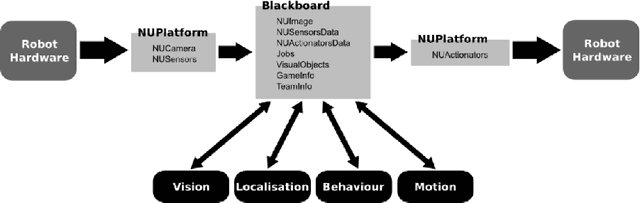

Abstract:The NUbots are an interdisciplinary RoboCup team from The University of Newcastle, Australia. The team has a history of strong contributions in the areas of machine learning and computer vision. The NUbots have participated in RoboCup leagues since 2002, placing first several times in the past. In 2014 the NUbots also partnered with the University of Newcastle Mechatronics Laboratory to participate in the RobotX Marine Robotics Challenge, which resulted in several new ideas and improvements to the NUbots vision system for RoboCup. This paper summarizes the history of the NUbots team, describes the roles and research of the team members, gives an overview of the NUbots' robots, their software system, and several associated research projects.

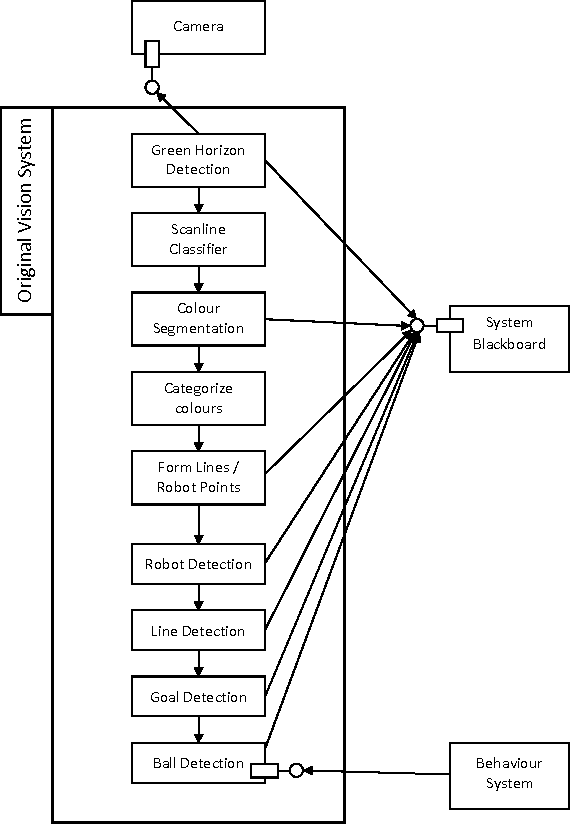

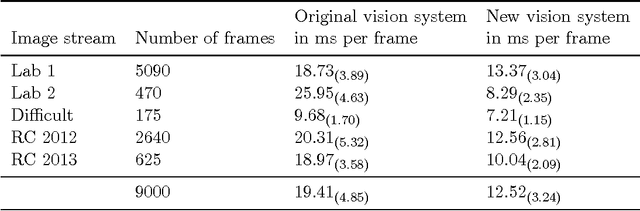

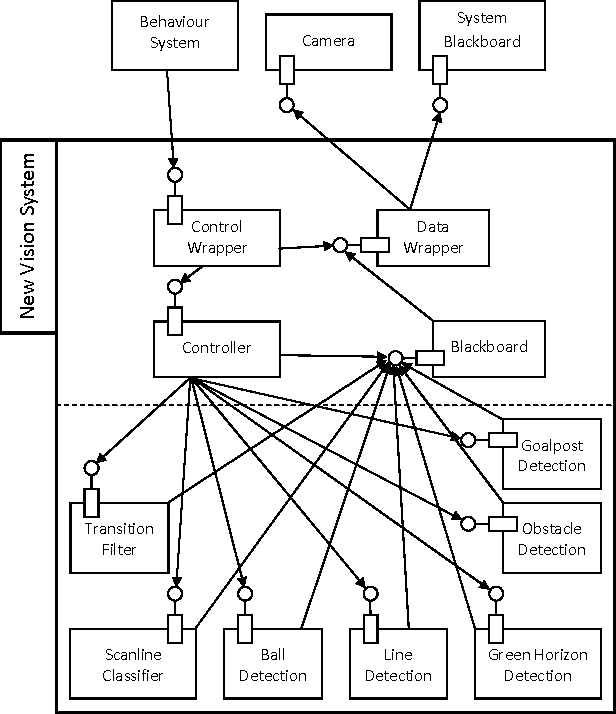

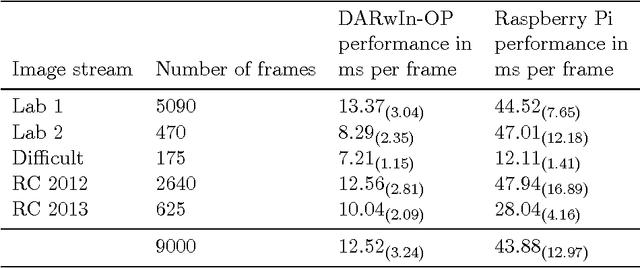

Addressing the non-functional requirements of computer vision systems: A case study

Oct 31, 2014

Abstract:Computer vision plays a major role in the robotics industry, where vision data is frequently used for navigation and high-level decision making. Although there is significant research in algorithms and functional requirements, there is a comparative lack of emphasis on how best to map these abstract concepts onto an appropriate software architecture. In this study, we distinguish between the functional and non-functional requirements of a computer vision system. Using a RoboCup humanoid robot system as a case study, we propose and develop a software architecture that fulfills the latter criteria. The modifiability of the proposed architecture is demonstrated by detailing a number of feature detection algorithms and emphasizing which aspects of the underlying framework were modified to support their integration. To demonstrate portability, we port our vision system (designed for an application-specific DARwIn-OP humanoid robot) to a general-purpose, Raspberry Pi computer. We evaluate performance on both platforms and compare them to a vision system optimised for functional requirements only. The architecture and implementation presented in this study provide a highly generalisable framework for computer vision system design that is of particular benefit in research and development, competition and other environments in which rapid system evolution is necessary.

The NUbots Team Description Paper 2014

Mar 27, 2014

Abstract:The NUbots team, from The University of Newcastle, Australia, has had a strong record of success in the RoboCup Standard Platform League since first entering in 2002. The team has also competed within the RoboCup Humanoid Kid-Size League since 2012. The 2014 team brings a renewed focus on software architecture, modularity, and the ability to easily share code. This paper summarizes the history of the NUbots team, describes the roles and research of the team members, gives an overview of the NUbots' robots and software system, and addresses relevant research projects within the the Newcastle Robotics Laboratory.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge