Alexander Kister

Robust Entropy Search for Safe Efficient Bayesian Optimization

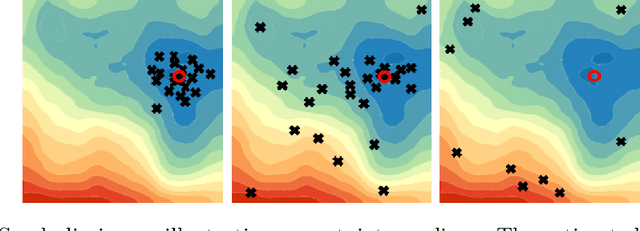

May 29, 2024Abstract:The practical use of Bayesian Optimization (BO) in engineering applications imposes special requirements: high sampling efficiency on the one hand and finding a robust solution on the other hand. We address the case of adversarial robustness, where all parameters are controllable during the optimization process, but a subset of them is uncontrollable or even adversely perturbed at the time of application. To this end, we develop an efficient information-based acquisition function that we call Robust Entropy Search (RES). We empirically demonstrate its benefits in experiments on synthetic and real-life data. The results showthat RES reliably finds robust optima, outperforming state-of-the-art algorithms.

Robustness in Fatigue Strength Estimation

Dec 02, 2022

Abstract:Fatigue strength estimation is a costly manual material characterization process in which state-of-the-art approaches follow a standardized experiment and analysis procedure. In this paper, we examine a modular, Machine Learning-based approach for fatigue strength estimation that is likely to reduce the number of experiments and, thus, the overall experimental costs. Despite its high potential, deployment of a new approach in a real-life lab requires more than the theoretical definition and simulation. Therefore, we study the robustness of the approach against misspecification of the prior and discretization of the specified loads. We identify its applicability and its advantageous behavior over the state-of-the-art methods, potentially reducing the number of costly experiments.

Multi-Agent Neural Rewriter for Vehicle Routing with Limited Disclosure of Costs

Jun 13, 2022

Abstract:We interpret solving the multi-vehicle routing problem as a team Markov game with partially observable costs. For a given set of customers to serve, the playing agents (vehicles) have the common goal to determine the team-optimal agent routes with minimal total cost. Each agent thereby observes only its own cost. Our multi-agent reinforcement learning approach, the so-called multi-agent Neural Rewriter, builds on the single-agent Neural Rewriter to solve the problem by iteratively rewriting solutions. Parallel agent action execution and partial observability require new rewriting rules for the game. We propose the introduction of a so-called pool in the system which serves as a collection point for unvisited nodes. It enables agents to act simultaneously and exchange nodes in a conflict-free manner. We realize limited disclosure of agent-specific costs by only sharing them during learning. During inference, each agents acts decentrally, solely based on its own cost. First empirical results on small problem sizes demonstrate that we reach a performance close to the employed OR-Tools benchmark which operates in the perfect cost information setting.

Bayesian Optimization for Min Max Optimization

Jul 29, 2021

Abstract:A solution that is only reliable under favourable conditions is hardly a safe solution. Min Max Optimization is an approach that returns optima that are robust against worst case conditions. We propose algorithms that perform Min Max Optimization in a setting where the function that should be optimized is not known a priori and hence has to be learned by experiments. Therefore we extend the Bayesian Optimization setting, which is tailored to maximization problems, to Min Max Optimization problems. While related work extends the two acquisition functions Expected Improvement and Gaussian Process Upper Confidence Bound; we extend the two acquisition functions Entropy Search and Knowledge Gradient. These acquisition functions are able to gain knowledge about the optimum instead of just looking for points that are supposed to be optimal. In our evaluation we show that these acquisition functions allow for better solutions - converging faster to the optimum than the benchmark settings.

Monte Carlo Tree Search for high precision manufacturing

Jul 28, 2021

Abstract:Monte Carlo Tree Search (MCTS) has shown its strength for a lot of deterministic and stochastic examples, but literature lacks reports of applications to real world industrial processes. Common reasons for this are that there is no efficient simulator of the process available or there exist problems in applying MCTS to the complex rules of the process. In this paper, we apply MCTS for optimizing a high-precision manufacturing process that has stochastic and partially observable outcomes. We make use of an expert-knowledge-based simulator and adapt the MCTS default policy to deal with the manufacturing process.

Approaching Neural Network Uncertainty Realism

Jan 08, 2021

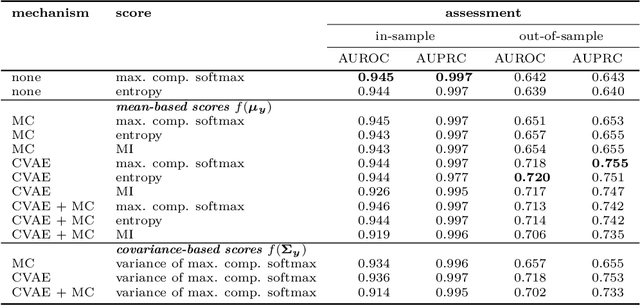

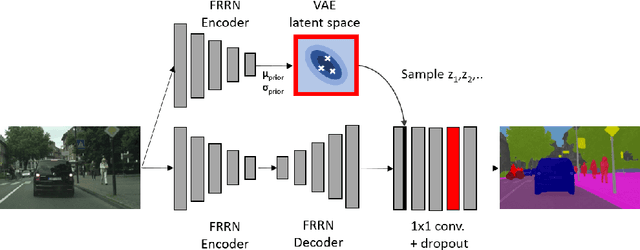

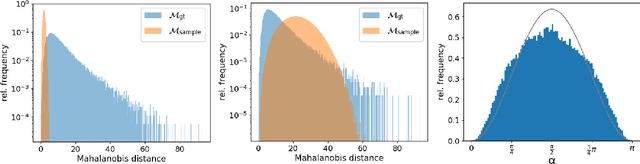

Abstract:Statistical models are inherently uncertain. Quantifying or at least upper-bounding their uncertainties is vital for safety-critical systems such as autonomous vehicles. While standard neural networks do not report this information, several approaches exist to integrate uncertainty estimates into them. Assessing the quality of these uncertainty estimates is not straightforward, as no direct ground truth labels are available. Instead, implicit statistical assessments are required. For regression, we propose to evaluate uncertainty realism -- a strict quality criterion -- with a Mahalanobis distance-based statistical test. An empirical evaluation reveals the need for uncertainty measures that are appropriate to upper-bound heavy-tailed empirical errors. Alongside, we transfer the variational U-Net classification architecture to standard supervised image-to-image tasks. We adopt it to the automotive domain and show that it significantly improves uncertainty realism compared to a plain encoder-decoder model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge