Alex Yang

OmniSage: Large Scale, Multi-Entity Heterogeneous Graph Representation Learning

May 01, 2025

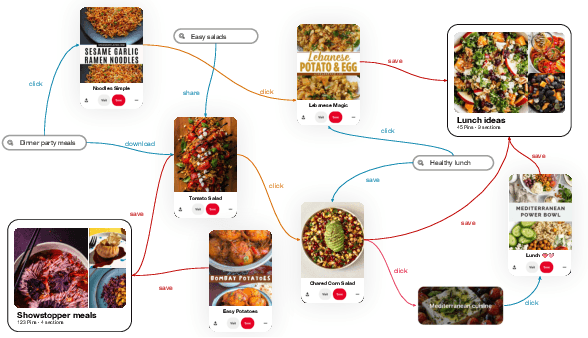

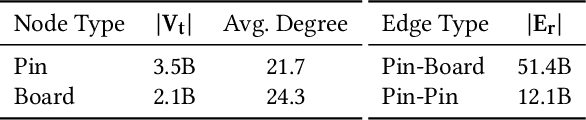

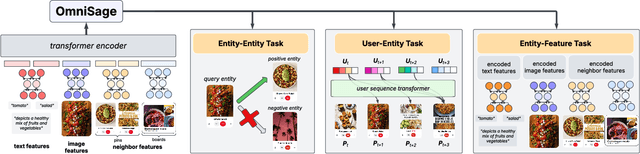

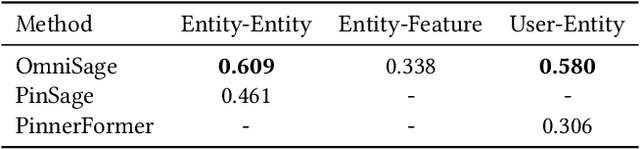

Abstract:Representation learning, a task of learning latent vectors to represent entities, is a key task in improving search and recommender systems in web applications. Various representation learning methods have been developed, including graph-based approaches for relationships among entities, sequence-based methods for capturing the temporal evolution of user activities, and content-based models for leveraging text and visual content. However, the development of a unifying framework that integrates these diverse techniques to support multiple applications remains a significant challenge. This paper presents OmniSage, a large-scale representation framework that learns universal representations for a variety of applications at Pinterest. OmniSage integrates graph neural networks with content-based models and user sequence models by employing multiple contrastive learning tasks to effectively process graph data, user sequence data, and content signals. To support the training and inference of OmniSage, we developed an efficient infrastructure capable of supporting Pinterest graphs with billions of nodes. The universal representations generated by OmniSage have significantly enhanced user experiences on Pinterest, leading to an approximate 2.5% increase in sitewide repins (saves) across five applications. This paper highlights the impact of unifying representation learning methods, and we will open source the OmniSage code by the time of publication.

Recognizing Image Objects by Relational Analysis Using Heterogeneous Superpixels and Deep Convolutional Features

Aug 02, 2019

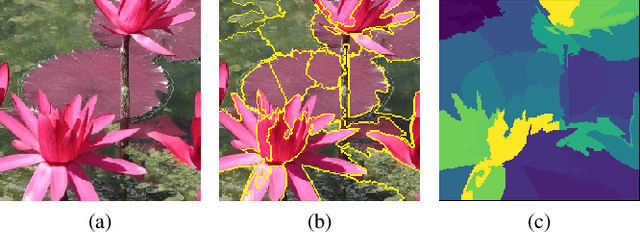

Abstract:Superpixel-based methodologies have become increasingly popular in computer vision, especially when the computation is too expensive in time or memory to perform with a large number of pixels or features. However, rarely is superpixel segmentation examined within the context of deep convolutional neural network architectures. This paper presents a novel neural architecture that exploits the superpixel feature space. The visual feature space is organized using superpixels to provide the neural network with a substructure of the images. As the superpixels associate the visual feature space with parts of the objects in an image, the visual feature space is transformed into a structured vector representation per superpixel. It is shown that it is feasible to learn superpixel features using capsules and it is potentially beneficial to perform image analysis in such a structured manner. This novel deep learning architecture is examined in the context of an image classification task, highlighting explicit interpretability (explainability) of the network's decision making. The results are compared against a baseline deep neural model, as well as among superpixel capsule networks with a variety of hyperparameter settings.

Addressing Complex and Subjective Product-Related Queries with Customer Reviews

Dec 21, 2015

Abstract:Online reviews are often our first port of call when considering products and purchases online. When evaluating a potential purchase, we may have a specific query in mind, e.g. `will this baby seat fit in the overhead compartment of a 747?' or `will I like this album if I liked Taylor Swift's 1989?'. To answer such questions we must either wade through huge volumes of consumer reviews hoping to find one that is relevant, or otherwise pose our question directly to the community via a Q/A system. In this paper we hope to fuse these two paradigms: given a large volume of previously answered queries about products, we hope to automatically learn whether a review of a product is relevant to a given query. We formulate this as a machine learning problem using a mixture-of-experts-type framework---here each review is an `expert' that gets to vote on the response to a particular query; simultaneously we learn a relevance function such that `relevant' reviews are those that vote correctly. At test time this learned relevance function allows us to surface reviews that are relevant to new queries on-demand. We evaluate our system, Moqa, on a novel corpus of 1.4 million questions (and answers) and 13 million reviews. We show quantitatively that it is effective at addressing both binary and open-ended queries, and qualitatively that it surfaces reviews that human evaluators consider to be relevant.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge