Akhil S Anand

CORL: Reinforcement Learning of MILP Policies Solved via Branch and Bound

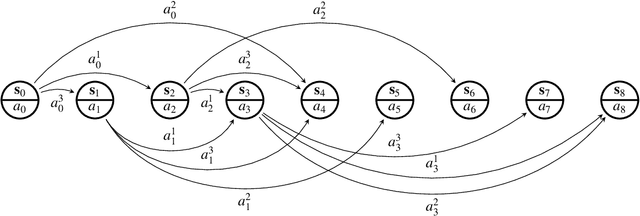

Dec 11, 2025Abstract:Combinatorial sequential decision making problems are typically modeled as mixed integer linear programs (MILPs) and solved via branch and bound (B&B) algorithms. The inherent difficulty of modeling MILPs that accurately represent stochastic real world problems leads to suboptimal performance in the real world. Recently, machine learning methods have been applied to build MILP models for decision quality rather than how accurately they model the real world problem. However, these approaches typically rely on supervised learning, assume access to true optimal decisions, and use surrogates for the MILP gradients. In this work, we introduce a proof of concept CORL framework that end to end fine tunes an MILP scheme using reinforcement learning (RL) on real world data to maximize its operational performance. We enable this by casting an MILP solved by B&B as a differentiable stochastic policy compatible with RL. We validate the CORL method in a simple illustrative combinatorial sequential decision making example.

All AI Models are Wrong, but Some are Optimal

Jan 10, 2025

Abstract:AI models that predict the future behavior of a system (a.k.a. predictive AI models) are central to intelligent decision-making. However, decision-making using predictive AI models often results in suboptimal performance. This is primarily because AI models are typically constructed to best fit the data, and hence to predict the most likely future rather than to enable high-performance decision-making. The hope that such prediction enables high-performance decisions is neither guaranteed in theory nor established in practice. In fact, there is increasing empirical evidence that predictive models must be tailored to decision-making objectives for performance. In this paper, we establish formal (necessary and sufficient) conditions that a predictive model (AI-based or not) must satisfy for a decision-making policy established using that model to be optimal. We then discuss their implications for building predictive AI models for sequential decision-making.

Application of Soft Actor-Critic Algorithms in Optimizing Wastewater Treatment with Time Delays Integration

Nov 27, 2024

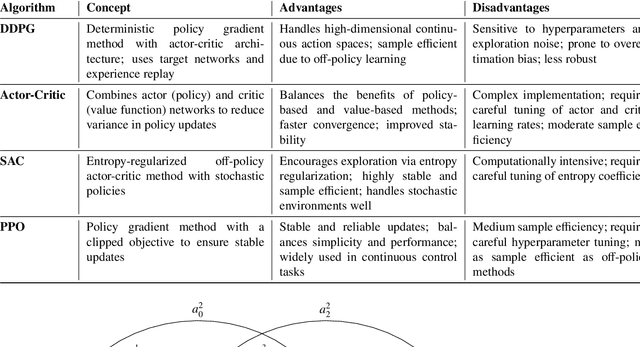

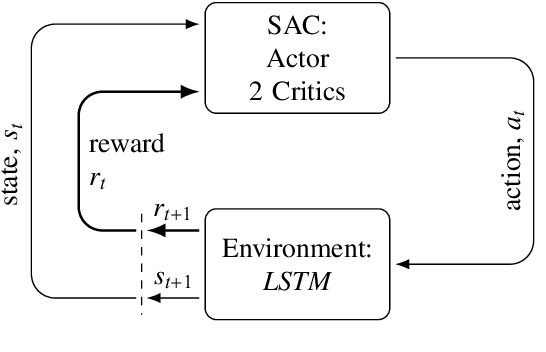

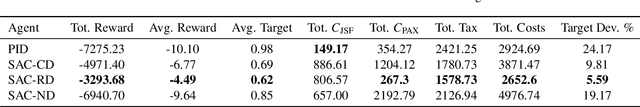

Abstract:Wastewater treatment plants face unique challenges for process control due to their complex dynamics, slow time constants, and stochastic delays in observations and actions. These characteristics make conventional control methods, such as Proportional-Integral-Derivative controllers, suboptimal for achieving efficient phosphorus removal, a critical component of wastewater treatment to ensure environmental sustainability. This study addresses these challenges using a novel deep reinforcement learning approach based on the Soft Actor-Critic algorithm, integrated with a custom simulator designed to model the delayed feedback inherent in wastewater treatment plants. The simulator incorporates Long Short-Term Memory networks for accurate multi-step state predictions, enabling realistic training scenarios. To account for the stochastic nature of delays, agents were trained under three delay scenarios: no delay, constant delay, and random delay. The results demonstrate that incorporating random delays into the reinforcement learning framework significantly improves phosphorus removal efficiency while reducing operational costs. Specifically, the delay-aware agent achieved 36% reduction in phosphorus emissions, 55% higher reward, 77% lower target deviation from the regulatory limit, and 9% lower total costs than traditional control methods in the simulated environment. These findings underscore the potential of reinforcement learning to overcome the limitations of conventional control strategies in wastewater treatment, providing an adaptive and cost-effective solution for phosphorus removal.

Learning-based MPC from Big Data Using Reinforcement Learning

Jan 04, 2023

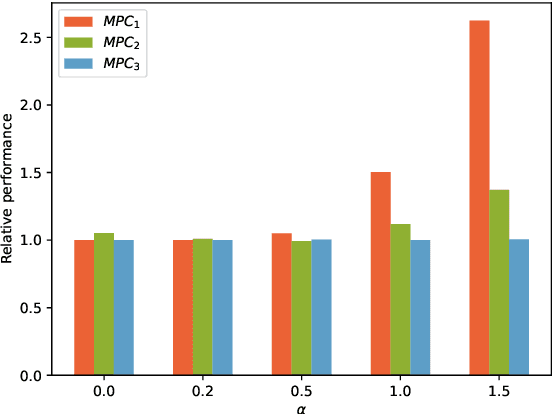

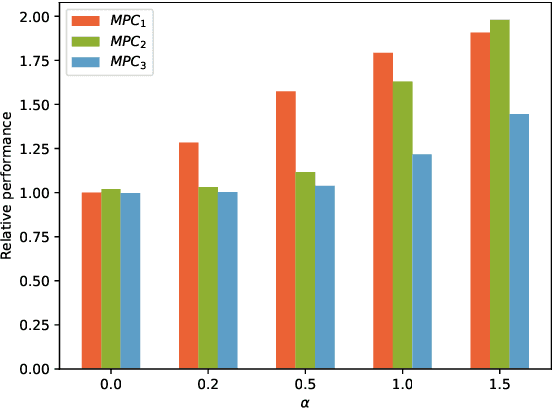

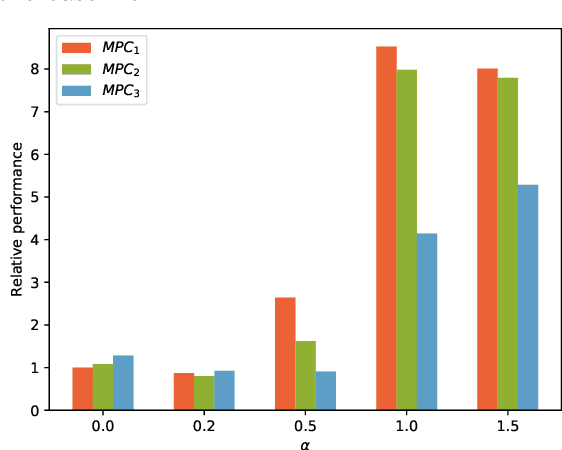

Abstract:This paper presents an approach for learning Model Predictive Control (MPC) schemes directly from data using Reinforcement Learning (RL) methods. The state-of-the-art learning methods use RL to improve the performance of parameterized MPC schemes. However, these learning algorithms are often gradient-based methods that require frequent evaluations of computationally expensive MPC schemes, thereby restricting their use on big datasets. We propose to tackle this issue by using tools from RL to learn a parameterized MPC scheme directly from data in an offline fashion. Our approach derives an MPC scheme without having to solve it over the collected dataset, thereby eliminating the computational complexity of existing techniques for big data. We evaluate the proposed method on three simulated experiments of varying complexity.

Deep Model Predictive Variable Impedance Control

Sep 20, 2022

Abstract:The capability to adapt compliance by varying muscle stiffness is crucial for dexterous manipulation skills in humans. Incorporating compliance in robot motor control is crucial to performing real-world force interaction tasks with human-level dexterity. This work presents a Deep Model Predictive Variable Impedance Controller for compliant robotic manipulation which combines Variable Impedance Control with Model Predictive Control (MPC). A generalized Cartesian impedance model of a robot manipulator is learned using an exploration strategy maximizing the information gain. This model is used within an MPC framework to adapt the impedance parameters of a low-level variable impedance controller to achieve the desired compliance behavior for different manipulation tasks without any retraining or finetuning. The deep Model Predictive Variable Impedance Control approach is evaluated using a Franka Emika Panda robotic manipulator operating on different manipulation tasks in simulations and real experiments. The proposed approach was compared with model-free and model-based reinforcement approaches in variable impedance control for transferability between tasks and performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge