Aviaja Anna Hansen

Application of Soft Actor-Critic Algorithms in Optimizing Wastewater Treatment with Time Delays Integration

Nov 27, 2024

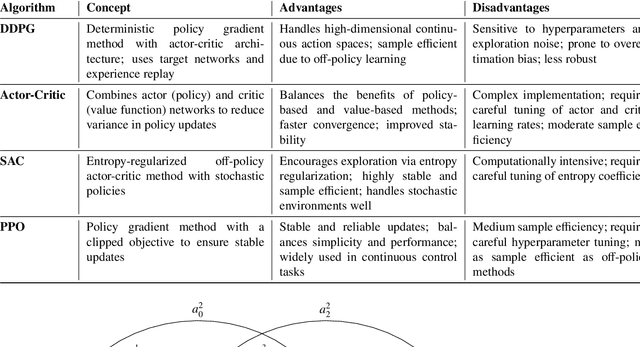

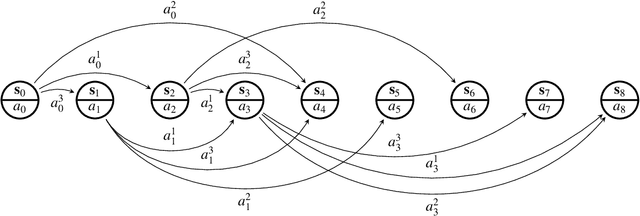

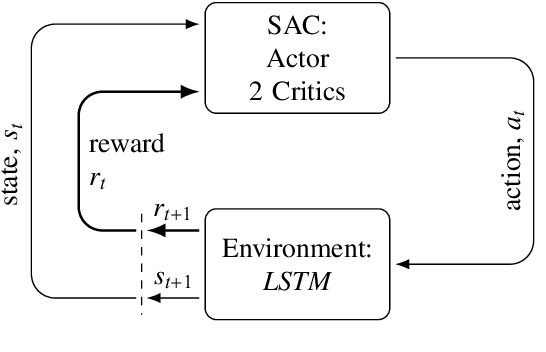

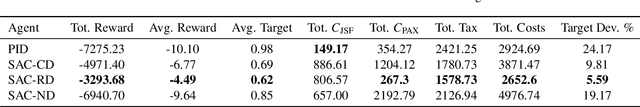

Abstract:Wastewater treatment plants face unique challenges for process control due to their complex dynamics, slow time constants, and stochastic delays in observations and actions. These characteristics make conventional control methods, such as Proportional-Integral-Derivative controllers, suboptimal for achieving efficient phosphorus removal, a critical component of wastewater treatment to ensure environmental sustainability. This study addresses these challenges using a novel deep reinforcement learning approach based on the Soft Actor-Critic algorithm, integrated with a custom simulator designed to model the delayed feedback inherent in wastewater treatment plants. The simulator incorporates Long Short-Term Memory networks for accurate multi-step state predictions, enabling realistic training scenarios. To account for the stochastic nature of delays, agents were trained under three delay scenarios: no delay, constant delay, and random delay. The results demonstrate that incorporating random delays into the reinforcement learning framework significantly improves phosphorus removal efficiency while reducing operational costs. Specifically, the delay-aware agent achieved 36% reduction in phosphorus emissions, 55% higher reward, 77% lower target deviation from the regulatory limit, and 9% lower total costs than traditional control methods in the simulated environment. These findings underscore the potential of reinforcement learning to overcome the limitations of conventional control strategies in wastewater treatment, providing an adaptive and cost-effective solution for phosphorus removal.

Improved Long Short-Term Memory-based Wastewater Treatment Simulators for Deep Reinforcement Learning

Mar 22, 2024Abstract:Even though Deep Reinforcement Learning (DRL) showed outstanding results in the fields of Robotics and Games, it is still challenging to implement it in the optimization of industrial processes like wastewater treatment. One of the challenges is the lack of a simulation environment that will represent the actual plant as accurately as possible to train DRL policies. Stochasticity and non-linearity of wastewater treatment data lead to unstable and incorrect predictions of models over long time horizons. One possible reason for the models' incorrect simulation behavior can be related to the issue of compounding error, which is the accumulation of errors throughout the simulation. The compounding error occurs because the model utilizes its predictions as inputs at each time step. The error between the actual data and the prediction accumulates as the simulation continues. We implemented two methods to improve the trained models for wastewater treatment data, which resulted in more accurate simulators: 1- Using the model's prediction data as input in the training step as a tool of correction, and 2- Change in the loss function to consider the long-term predicted shape (dynamics). The experimental results showed that implementing these methods can improve the behavior of simulators in terms of Dynamic Time Warping throughout a year up to 98% compared to the base model. These improvements demonstrate significant promise in creating simulators for biological processes that do not need pre-existing knowledge of the process but instead depend exclusively on time series data obtained from the system.

Deep Learning Based Simulators for the Phosphorus Removal Process Control in Wastewater Treatment via Deep Reinforcement Learning Algorithms

Jan 23, 2024Abstract:Phosphorus removal is vital in wastewater treatment to reduce reliance on limited resources. Deep reinforcement learning (DRL) is a machine learning technique that can optimize complex and nonlinear systems, including the processes in wastewater treatment plants, by learning control policies through trial and error. However, applying DRL to chemical and biological processes is challenging due to the need for accurate simulators. This study trained six models to identify the phosphorus removal process and used them to create a simulator for the DRL environment. Although the models achieved high accuracy (>97%), uncertainty and incorrect prediction behavior limited their performance as simulators over longer horizons. Compounding errors in the models' predictions were identified as one of the causes of this problem. This approach for improving process control involves creating simulation environments for DRL algorithms, using data from supervisory control and data acquisition (SCADA) systems with a sufficient historical horizon without complex system modeling or parameter estimation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge