Ajay K. Pandey

Surgery Scene Restoration for Robot Assisted Minimally Invasive Surgery

Sep 06, 2021

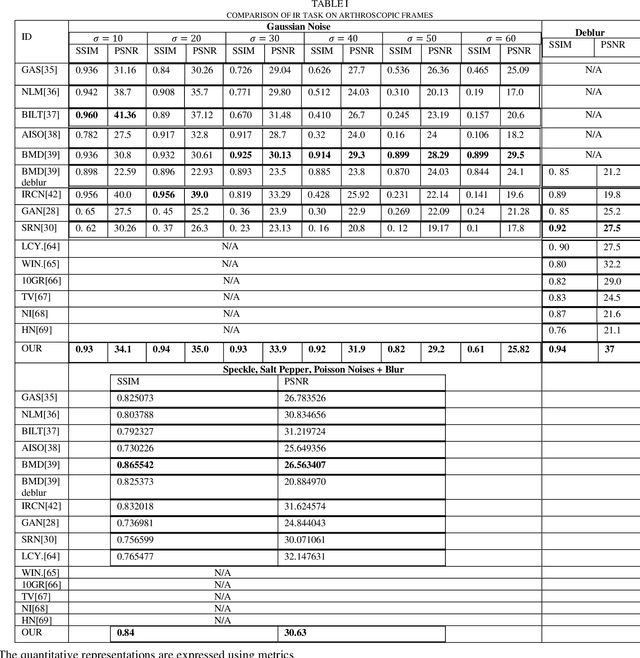

Abstract:Minimally invasive surgery (MIS) offers several advantages including minimum tissue injury and blood loss, and quick recovery time, however, it imposes some limitations on surgeons ability. Among others such as lack of tactile or haptic feedback, poor visualization of the surgical site is one of the most acknowledged factors that exhibits several surgical drawbacks including unintentional tissue damage. To the context of robot assisted surgery, lack of frame contextual details makes vision task challenging when it comes to tracking tissue and tools, segmenting scene, and estimating pose and depth. In MIS the acquired frames are compromised by different noises and get blurred caused by motions from different sources. Moreover, when underwater environment is considered for instance knee arthroscopy, mostly visible noises and blur effects are originated from the environment, poor control on illuminations and imaging conditions. Additionally, in MIS, procedure like automatic white balancing and transformation between the raw color information to its standard RGB color space are often absent due to the hardware miniaturization. There is a high demand of an online preprocessing framework that can circumvent these drawbacks. Our proposed method is able to restore a latent clean and sharp image in standard RGB color space from its noisy, blur and raw observation in a single preprocessing stage.

3D Semantic Mapping from Arthroscopy using Out-of-distribution Pose and Depth and In-distribution Segmentation Training

Jun 10, 2021

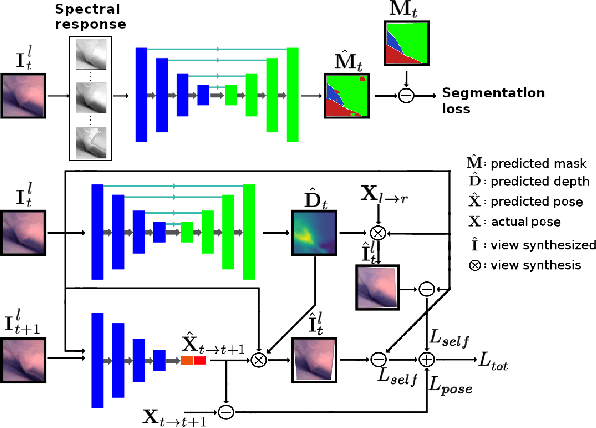

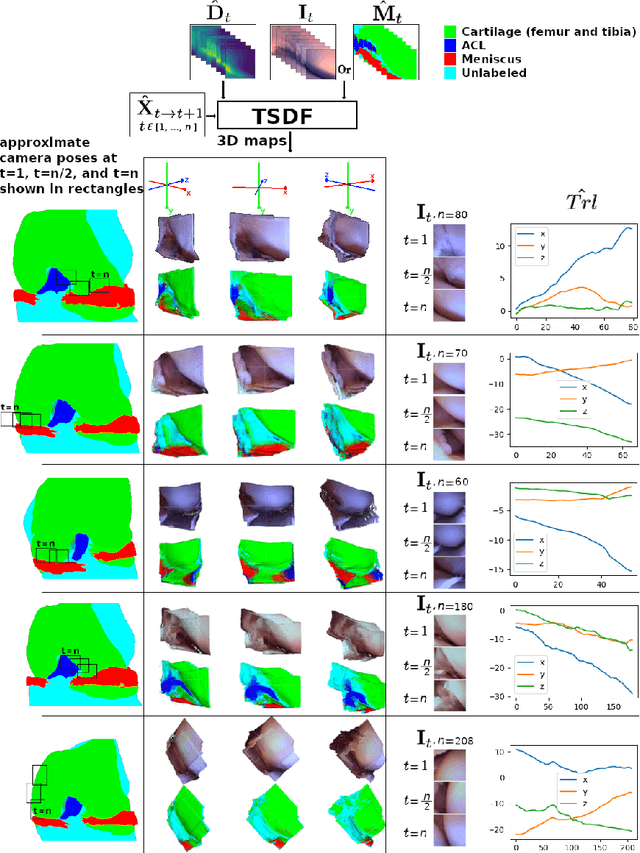

Abstract:Minimally invasive surgery (MIS) has many documented advantages, but the surgeon's limited visual contact with the scene can be problematic. Hence, systems that can help surgeons navigate, such as a method that can produce a 3D semantic map, can compensate for the limitation above. In theory, we can borrow 3D semantic mapping techniques developed for robotics, but this requires finding solutions to the following challenges in MIS: 1) semantic segmentation, 2) depth estimation, and 3) pose estimation. In this paper, we propose the first 3D semantic mapping system from knee arthroscopy that solves the three challenges above. Using out-of-distribution non-human datasets, where pose could be labeled, we jointly train depth+pose estimators using selfsupervised and supervised losses. Using an in-distribution human knee dataset, we train a fully-supervised semantic segmentation system to label arthroscopic image pixels into femur, ACL, and meniscus. Taking testing images from human knees, we combine the results from these two systems to automatically create 3D semantic maps of the human knee. The result of this work opens the pathway to the generation of intraoperative 3D semantic mapping, registration with pre-operative data, and robotic-assisted arthroscopy

Self-supervised Depth Estimation to Regularise Semantic Segmentation in Knee Arthroscopy

Jul 05, 2020

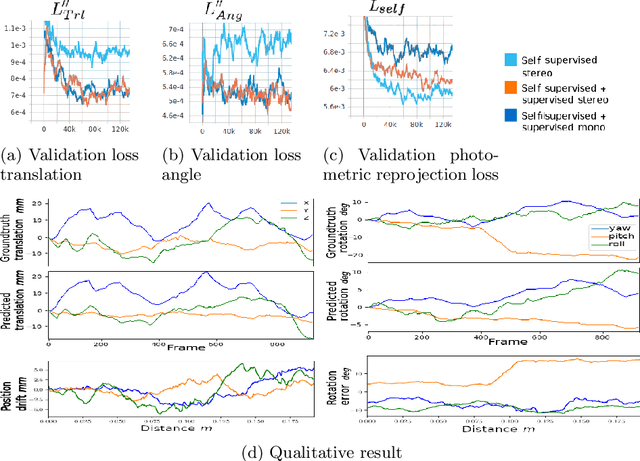

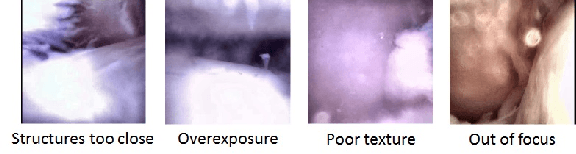

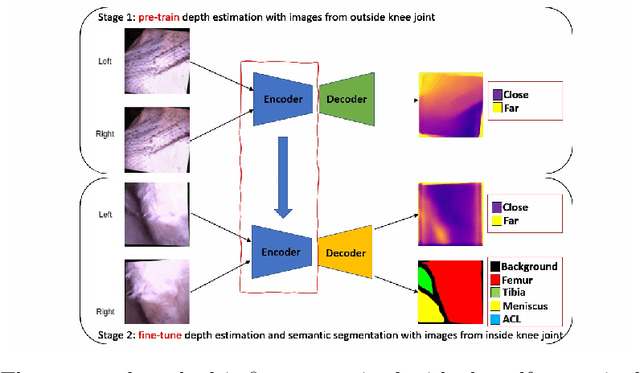

Abstract:Intra-operative automatic semantic segmentation of knee joint structures can assist surgeons during knee arthroscopy in terms of situational awareness. However, due to poor imaging conditions (e.g., low texture, overexposure, etc.), automatic semantic segmentation is a challenging scenario, which justifies the scarce literature on this topic. In this paper, we propose a novel self-supervised monocular depth estimation to regularise the training of the semantic segmentation in knee arthroscopy. To further regularise the depth estimation, we propose the use of clean training images captured by the stereo arthroscope of routine objects (presenting none of the poor imaging conditions and with rich texture information) to pre-train the model. We fine-tune such model to produce both the semantic segmentation and self-supervised monocular depth using stereo arthroscopic images taken from inside the knee. Using a data set containing 3868 arthroscopic images captured during cadaveric knee arthroscopy with semantic segmentation annotations, 2000 stereo image pairs of cadaveric knee arthroscopy, and 2150 stereo image pairs of routine objects, we show that our semantic segmentation regularised by self-supervised depth estimation produces a more accurate segmentation than a state-of-the-art semantic segmentation approach modeled exclusively with semantic segmentation annotation.

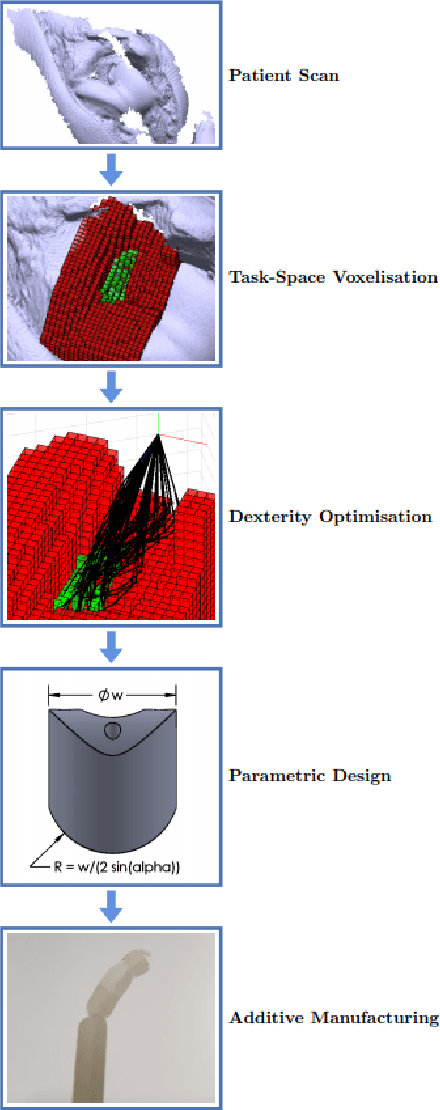

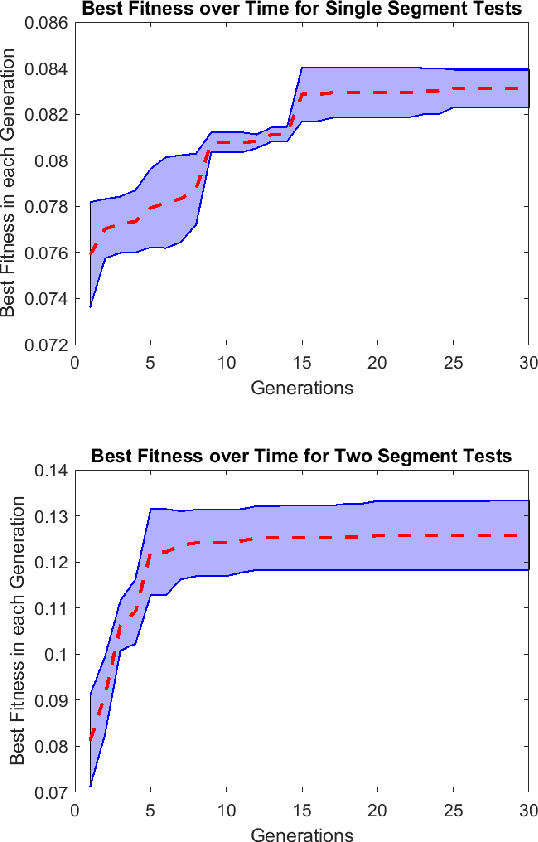

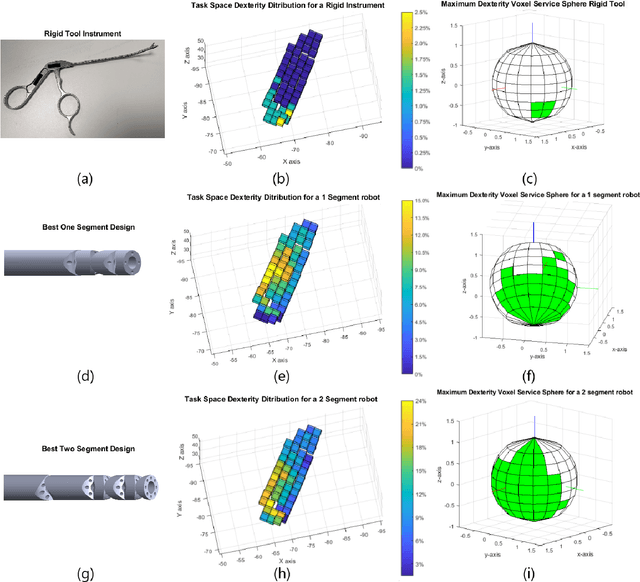

Optimal Dexterity for a Snake-like Surgical Manipulator using Patient-specific Task-space Constraints in a Computational Design Algorithm

Mar 06, 2019

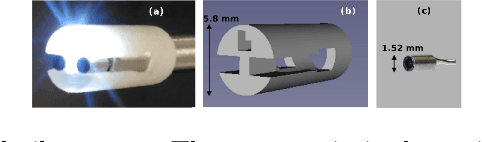

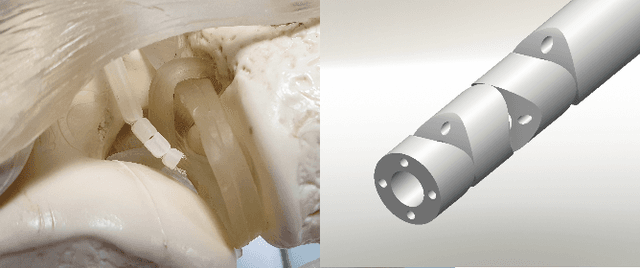

Abstract:Tendon-driven snake-like arms have been used to create highly dexterous continuum robots so that they can bend around anatomical obstacles to access clinical targets. In this paper, we propose a design algorithm for developing patient-specific surgical continuum manipulators optimized for oriental dexterity constrained by task-space obstacles. The algorithm uses a sampling-based approach to finding the dexterity distribution in the workspace discretized by voxels. The oriental dexterity measured in the region of interest in the task-space formed a fitness function to be optimized through differential evolution. This was implemented in the design of a tendon-driven manipulator for knee arthroscopy. The results showed a feasible design that achieves significantly better dexterity than a rigid tool. This highlights the potential of the proposed method to be used in the process of designing dexterous surgical manipulators in the field.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge