Ahmed Shoyeb Raihan

Confidence Adjusted Surprise Measure for Active Resourceful Trials (CA-SMART): A Data-driven Active Learning Framework for Accelerating Material Discovery under Resource Constraints

Mar 27, 2025Abstract:Accelerating the discovery and manufacturing of advanced materials with specific properties is a critical yet formidable challenge due to vast search space, high costs of experiments, and time-intensive nature of material characterization. In recent years, active learning, where a surrogate machine learning (ML) model mimics the scientific discovery process of a human scientist, has emerged as a promising approach to address these challenges by guiding experimentation toward high-value outcomes with a limited budget. Among the diverse active learning philosophies, the concept of surprise (capturing the divergence between expected and observed outcomes) has demonstrated significant potential to drive experimental trials and refine predictive models. Scientific discovery often stems from surprise thereby making it a natural driver to guide the search process. Despite its promise, prior studies leveraging surprise metrics such as Shannon and Bayesian surprise lack mechanisms to account for prior confidence, leading to excessive exploration of uncertain regions that may not yield useful information. To address this, we propose the Confidence-Adjusted Surprise Measure for Active Resourceful Trials (CA-SMART), a novel Bayesian active learning framework tailored for optimizing data-driven experimentation. On a high level, CA-SMART incorporates Confidence-Adjusted Surprise (CAS) to dynamically balance exploration and exploitation by amplifying surprises in regions where the model is more certain while discounting them in highly uncertain areas. We evaluated CA-SMART on two benchmark functions (Six-Hump Camelback and Griewank) and in predicting the fatigue strength of steel. The results demonstrate superior accuracy and efficiency compared to traditional surprise metrics, standard Bayesian Optimization (BO) acquisition functions and conventional ML methods.

In-Situ Melt Pool Characterization via Thermal Imaging for Defect Detection in Directed Energy Deposition Using Vision Transformers

Nov 18, 2024

Abstract:Directed Energy Deposition (DED) offers significant potential for manufacturing complex and multi-material parts. However, internal defects such as porosity and cracks can compromise mechanical properties and overall performance. This study focuses on in-situ monitoring and characterization of melt pools associated with porosity, aiming to improve defect detection and quality control in DED-printed parts. Traditional machine learning approaches for defect identification rely on extensive labeled datasets, often scarce and expensive to generate in real-world manufacturing. To address this, our framework employs self-supervised learning on unlabeled melt pool data using a Vision Transformer-based Masked Autoencoder (MAE) to produce highly representative embeddings. These fine-tuned embeddings are leveraged via transfer learning to train classifiers on a limited labeled dataset, enabling the effective identification of melt pool anomalies. We evaluate two classifiers: (1) a Vision Transformer (ViT) classifier utilizing the fine-tuned MAE Encoder's parameters and (2) the fine-tuned MAE Encoder combined with an MLP classifier head. Our framework achieves overall accuracy ranging from 95.44% to 99.17% and an average F1 score exceeding 80%, with the ViT Classifier slightly outperforming the MAE Encoder Classifier. This demonstrates the scalability and cost-effectiveness of our approach for automated quality control in DED, effectively detecting defects with minimal labeled data.

A Data-Efficient Sequential Learning Framework for Melt Pool Defect Classification in Laser Powder Bed Fusion

Nov 16, 2024Abstract:Ensuring the quality and reliability of Metal Additive Manufacturing (MAM) components is crucial, especially in the Laser Powder Bed Fusion (L-PBF) process, where melt pool defects such as keyhole, balling, and lack of fusion can significantly compromise structural integrity. This study presents SL-RF+ (Sequentially Learned Random Forest with Enhanced Sampling), a novel Sequential Learning (SL) framework for melt pool defect classification designed to maximize data efficiency and model accuracy in data-scarce environments. SL-RF+ utilizes RF classifier combined with Least Confidence Sampling (LCS) and Sobol sequence-based synthetic sampling to iteratively select the most informative samples to learn from, thereby refining the model's decision boundaries with minimal labeled data. Results show that SL-RF+ outperformed traditional machine learning models across key performance metrics, including accuracy, precision, recall, and F1 score, demonstrating significant robustness in identifying melt pool defects with limited data. This framework efficiently captures complex defect patterns by focusing on high-uncertainty regions in the process parameter space, ultimately achieving superior classification performance without the need for extensive labeled datasets. While this study utilizes pre-existing experimental data, SL-RF+ shows strong potential for real-world applications in pure sequential learning settings, where data is acquired and labeled incrementally, mitigating the high costs and time constraints of sample acquisition.

A Comprehensive Approach to Carbon Dioxide Emission Analysis in High Human Development Index Countries using Statistical and Machine Learning Techniques

May 01, 2024

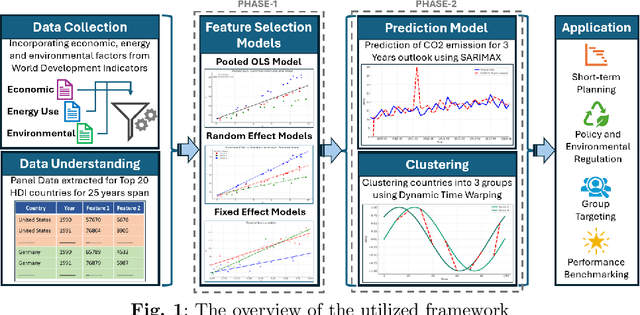

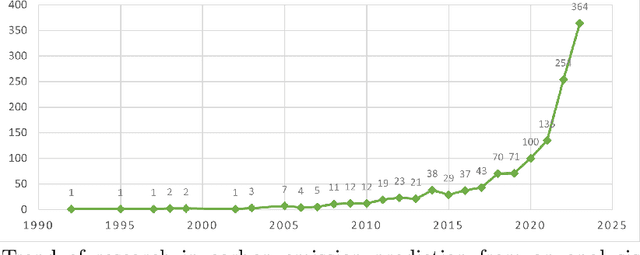

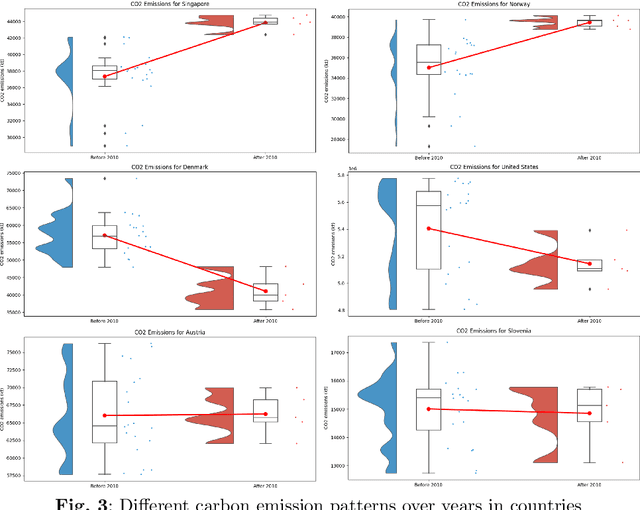

Abstract:Reducing Carbon dioxide (CO2) emission is vital at both global and national levels, given their significant role in exacerbating climate change. CO2 emission, stemming from a variety of industrial and economic activities, are major contributors to the greenhouse effect and global warming, posing substantial obstacles in addressing climate issues. It's imperative to forecast CO2 emission trends and classify countries based on their emission patterns to effectively mitigate worldwide carbon emission. This paper presents an in-depth comparative study on the determinants of CO2 emission in twenty countries with high Human Development Index (HDI), exploring factors related to economy, environment, energy use, and renewable resources over a span of 25 years. The study unfolds in two distinct phases: initially, statistical techniques such as Ordinary Least Squares (OLS), fixed effects, and random effects models are applied to pinpoint significant determinants of CO2 emission. Following this, the study leverages supervised and unsupervised machine learning (ML) methods to further scrutinize and understand the factors influencing CO2 emission. Seasonal AutoRegressive Integrated Moving Average with eXogenous variables (SARIMAX), a supervised ML model, is first used to predict emission trends from historical data, offering practical insights for policy formulation. Subsequently, Dynamic Time Warping (DTW), an unsupervised learning approach, is used to group countries by similar emission patterns. The dual-phase approach utilized in this study significantly improves the accuracy of CO2 emission predictions while also providing a deeper insight into global emission trends. By adopting this thorough analytical framework, nations can develop more focused and effective carbon reduction policies, playing a vital role in the global initiative to combat climate change.

An Augmented Surprise-guided Sequential Learning Framework for Predicting the Melt Pool Geometry

Jan 10, 2024Abstract:Metal Additive Manufacturing (MAM) has reshaped the manufacturing industry, offering benefits like intricate design, minimal waste, rapid prototyping, material versatility, and customized solutions. However, its full industry adoption faces hurdles, particularly in achieving consistent product quality. A crucial aspect for MAM's success is understanding the relationship between process parameters and melt pool characteristics. Integrating Artificial Intelligence (AI) into MAM is essential. Traditional machine learning (ML) methods, while effective, depend on large datasets to capture complex relationships, a significant challenge in MAM due to the extensive time and resources required for dataset creation. Our study introduces a novel surprise-guided sequential learning framework, SurpriseAF-BO, signaling a significant shift in MAM. This framework uses an iterative, adaptive learning process, modeling the dynamics between process parameters and melt pool characteristics with limited data, a key benefit in MAM's cyber manufacturing context. Compared to traditional ML models, our sequential learning method shows enhanced predictive accuracy for melt pool dimensions. Further improving our approach, we integrated a Conditional Tabular Generative Adversarial Network (CTGAN) into our framework, forming the CT-SurpriseAF-BO. This produces synthetic data resembling real experimental data, improving learning effectiveness. This enhancement boosts predictive precision without requiring additional physical experiments. Our study demonstrates the power of advanced data-driven techniques in cyber manufacturing and the substantial impact of sequential AI and ML, particularly in overcoming MAM's traditional challenges.

Accelerating material discovery with a threshold-driven hybrid acquisition policy-based Bayesian optimization

Nov 16, 2023Abstract:Advancements in materials play a crucial role in technological progress. However, the process of discovering and developing materials with desired properties is often impeded by substantial experimental costs, extensive resource utilization, and lengthy development periods. To address these challenges, modern approaches often employ machine learning (ML) techniques such as Bayesian Optimization (BO), which streamline the search for optimal materials by iteratively selecting experiments that are most likely to yield beneficial results. However, traditional BO methods, while beneficial, often struggle with balancing the trade-off between exploration and exploitation, leading to sub-optimal performance in material discovery processes. This paper introduces a novel Threshold-Driven UCB-EI Bayesian Optimization (TDUE-BO) method, which dynamically integrates the strengths of Upper Confidence Bound (UCB) and Expected Improvement (EI) acquisition functions to optimize the material discovery process. Unlike the classical BO, our method focuses on efficiently navigating the high-dimensional material design space (MDS). TDUE-BO begins with an exploration-focused UCB approach, ensuring a comprehensive initial sweep of the MDS. As the model gains confidence, indicated by reduced uncertainty, it transitions to the more exploitative EI method, focusing on promising areas identified earlier. The UCB-to-EI switching policy dictated guided through continuous monitoring of the model uncertainty during each step of sequential sampling results in navigating through the MDS more efficiently while ensuring rapid convergence. The effectiveness of TDUE-BO is demonstrated through its application on three different material datasets, showing significantly better approximation and optimization performance over the EI and UCB-based BO methods in terms of the RMSE scores and convergence efficiency, respectively.

Strategic Data Augmentation with CTGAN for Smart Manufacturing: Enhancing Machine Learning Predictions of Paper Breaks in Pulp-and-Paper Production

Nov 15, 2023Abstract:A significant challenge for predictive maintenance in the pulp-and-paper industry is the infrequency of paper breaks during the production process. In this article, operational data is analyzed from a paper manufacturing machine in which paper breaks are relatively rare but have a high economic impact. Utilizing a dataset comprising 18,398 instances derived from a quality assurance protocol, we address the scarcity of break events (124 cases) that pose a challenge for machine learning predictive models. With the help of Conditional Generative Adversarial Networks (CTGAN) and Synthetic Minority Oversampling Technique (SMOTE), we implement a novel data augmentation framework. This method ensures that the synthetic data mirrors the distribution of the real operational data but also seeks to enhance the performance metrics of predictive modeling. Before and after the data augmentation, we evaluate three different machine learning algorithms-Decision Trees (DT), Random Forest (RF), and Logistic Regression (LR). Utilizing the CTGAN-enhanced dataset, our study achieved significant improvements in predictive maintenance performance metrics. The efficacy of CTGAN in addressing data scarcity was evident, with the models' detection of machine breaks (Class 1) improving by over 30% for Decision Trees, 20% for Random Forest, and nearly 90% for Logistic Regression. With this methodological advancement, this study contributes to industrial quality control and maintenance scheduling by addressing rare event prediction in manufacturing processes.

A Data Driven Sequential Learning Framework to Accelerate and Optimize Multi-Objective Manufacturing Decisions

Apr 18, 2023Abstract:Manufacturing advanced materials and products with a specific property or combination of properties is often warranted. To achieve that it is crucial to find out the optimum recipe or processing conditions that can generate the ideal combination of these properties. Most of the time, a sufficient number of experiments are needed to generate a Pareto front. However, manufacturing experiments are usually costly and even conducting a single experiment can be a time-consuming process. So, it's critical to determine the optimal location for data collection to gain the most comprehensive understanding of the process. Sequential learning is a promising approach to actively learn from the ongoing experiments, iteratively update the underlying optimization routine, and adapt the data collection process on the go. This paper presents a novel data-driven Bayesian optimization framework that utilizes sequential learning to efficiently optimize complex systems with multiple conflicting objectives. Additionally, this paper proposes a novel metric for evaluating multi-objective data-driven optimization approaches. This metric considers both the quality of the Pareto front and the amount of data used to generate it. The proposed framework is particularly beneficial in practical applications where acquiring data can be expensive and resource intensive. To demonstrate the effectiveness of the proposed algorithm and metric, the algorithm is evaluated on a manufacturing dataset. The results indicate that the proposed algorithm can achieve the actual Pareto front while processing significantly less data. It implies that the proposed data-driven framework can lead to similar manufacturing decisions with reduced costs and time.

A Bi-LSTM Autoencoder Framework for Anomaly Detection -- A Case Study of a Wind Power Dataset

Mar 17, 2023Abstract:Anomalies refer to data points or events that deviate from normal and homogeneous events, which can include fraudulent activities, network infiltrations, equipment malfunctions, process changes, or other significant but infrequent events. Prompt detection of such events can prevent potential losses in terms of finances, information, and human resources. With the advancement of computational capabilities and the availability of large datasets, anomaly detection has become a major area of research. Among these, anomaly detection in time series has gained more attention recently due to the added complexity imposed by the time dimension. This study presents a novel framework for time series anomaly detection using a combination of Bidirectional Long Short Term Memory (Bi-LSTM) architecture and Autoencoder. The Bi-LSTM network, which comprises two unidirectional LSTM networks, can analyze the time series data from both directions and thus effectively discover the long-term dependencies hidden in the sequential data. Meanwhile, the Autoencoder mechanism helps to establish the optimal threshold beyond which an event can be classified as an anomaly. To demonstrate the effectiveness of the proposed framework, it is applied to a real-world multivariate time series dataset collected from a wind farm. The Bi-LSTM Autoencoder model achieved a classification accuracy of 96.79% and outperformed more commonly used LSTM Autoencoder models.

Winning through Collaboration by Applying Federated Learning in Manufacturing Industry

Feb 27, 2023Abstract:In manufacturing settings, data collection and analysis is often a time-consuming, challenging, and costly process. It also hinders the use of advanced machine learning and data-driven methods which requires a substantial amount of offline training data to generate good results. It is particularly challenging for small manufacturers who do not share the resources of a large enterprise. Recently, with the introduction of the Internet of Things (IoT), data can be collected in an integrated manner across the factory in real-time, sent to the cloud for advanced analysis, and used to update the machine learning model sequentially. Nevertheless, small manufacturers face two obstacles in reaping the benefits of IoT: they may be unable to afford or generate enough data to operate a private cloud, and they may be hesitant to share their raw data with a public cloud. Federated learning (FL) is an emerging concept of collaborative learning that can help small-scale industries address these issues and learn from each other without sacrificing their privacy. It can bring together diverse and geographically dispersed manufacturers under the same analytics umbrella to create a win-win situation. However, the widespread adoption of FL across multiple manufacturing organizations remains a significant challenge. This work aims to identify and illustrate these challenges and provide potential solutions to overcome them.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge