Advait Jain

TensorFlow Lite Micro: Embedded Machine Learning on TinyML Systems

Oct 20, 2020

Abstract:Deep learning inference on embedded devices is a burgeoning field with myriad applications because tiny embedded devices are omnipresent. But we must overcome major challenges before we can benefit from this opportunity. Embedded processors are severely resource constrained. Their nearest mobile counterparts exhibit at least a 100---1,000x difference in compute capability, memory availability, and power consumption. As a result, the machine-learning (ML) models and associated ML inference framework must not only execute efficiently but also operate in a few kilobytes of memory. Also, the embedded devices' ecosystem is heavily fragmented. To maximize efficiency, system vendors often omit many features that commonly appear in mainstream systems, including dynamic memory allocation and virtual memory, that allow for cross-platform interoperability. The hardware comes in many flavors (e.g., instruction-set architecture and FPU support, or lack thereof). We introduce TensorFlow Lite Micro (TF Micro), an open-source ML inference framework for running deep-learning models on embedded systems. TF Micro tackles the efficiency requirements imposed by embedded-system resource constraints and the fragmentation challenges that make cross-platform interoperability nearly impossible. The framework adopts a unique interpreter-based approach that provides flexibility while overcoming these challenges. This paper explains the design decisions behind TF Micro and describes its implementation details. Also, we present an evaluation to demonstrate its low resource requirement and minimal run-time performance overhead.

Manipulation in Clutter with Whole-Arm Tactile Sensing

Apr 23, 2013

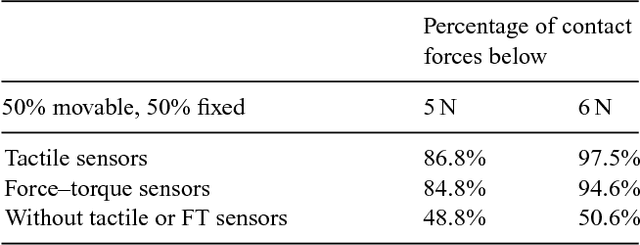

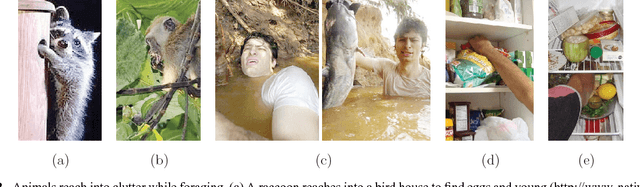

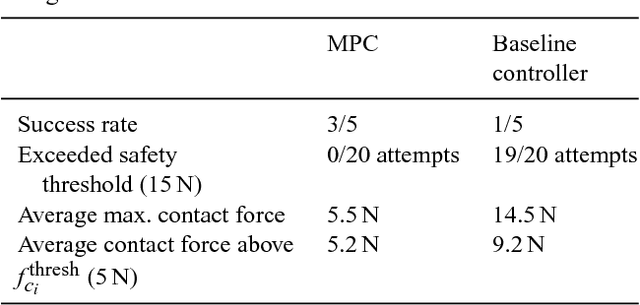

Abstract:We begin this paper by presenting our approach to robot manipulation, which emphasizes the benefits of making contact with the world across the entire manipulator. We assume that low contact forces are benign, and focus on the development of robots that can control their contact forces during goal-directed motion. Inspired by biology, we assume that the robot has low-stiffness actuation at its joints, and tactile sensing across the entire surface of its manipulator. We then describe a novel controller that exploits these assumptions. The controller only requires haptic sensing and does not need an explicit model of the environment prior to contact. It also handles multiple contacts across the surface of the manipulator. The controller uses model predictive control (MPC) with a time horizon of length one, and a linear quasi-static mechanical model that it constructs at each time step. We show that this controller enables both real and simulated robots to reach goal locations in high clutter with low contact forces. Our experiments include tests using a real robot with a novel tactile sensor array on its forearm reaching into simulated foliage and a cinder block. In our experiments, robots made contact across their entire arms while pushing aside movable objects, deforming compliant objects, and perceiving the world.

* This is the first version of a paper that we submitted to the International Journal of Robotics Research on December 31, 2011 and uploaded to our website on January 16, 2012

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge