Manipulation in Clutter with Whole-Arm Tactile Sensing

Paper and Code

Apr 23, 2013

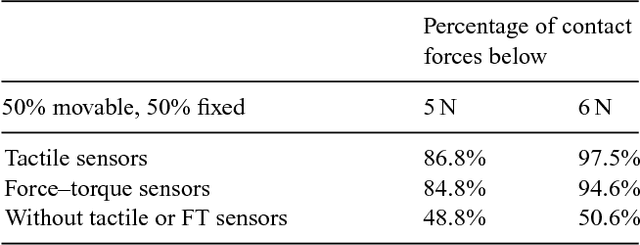

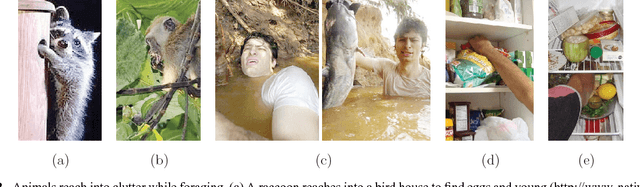

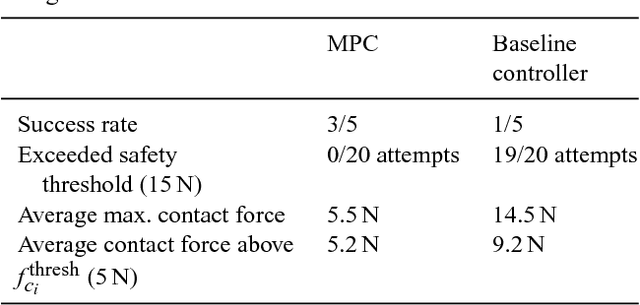

We begin this paper by presenting our approach to robot manipulation, which emphasizes the benefits of making contact with the world across the entire manipulator. We assume that low contact forces are benign, and focus on the development of robots that can control their contact forces during goal-directed motion. Inspired by biology, we assume that the robot has low-stiffness actuation at its joints, and tactile sensing across the entire surface of its manipulator. We then describe a novel controller that exploits these assumptions. The controller only requires haptic sensing and does not need an explicit model of the environment prior to contact. It also handles multiple contacts across the surface of the manipulator. The controller uses model predictive control (MPC) with a time horizon of length one, and a linear quasi-static mechanical model that it constructs at each time step. We show that this controller enables both real and simulated robots to reach goal locations in high clutter with low contact forces. Our experiments include tests using a real robot with a novel tactile sensor array on its forearm reaching into simulated foliage and a cinder block. In our experiments, robots made contact across their entire arms while pushing aside movable objects, deforming compliant objects, and perceiving the world.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge