Adrien Lafage

Torch-Uncertainty: A Deep Learning Framework for Uncertainty Quantification

Nov 13, 2025Abstract:Deep Neural Networks (DNNs) have demonstrated remarkable performance across various domains, including computer vision and natural language processing. However, they often struggle to accurately quantify the uncertainty of their predictions, limiting their broader adoption in critical real-world applications. Uncertainty Quantification (UQ) for Deep Learning seeks to address this challenge by providing methods to improve the reliability of uncertainty estimates. Although numerous techniques have been proposed, a unified tool offering a seamless workflow to evaluate and integrate these methods remains lacking. To bridge this gap, we introduce Torch-Uncertainty, a PyTorch and Lightning-based framework designed to streamline DNN training and evaluation with UQ techniques and metrics. In this paper, we outline the foundational principles of our library and present comprehensive experimental results that benchmark a diverse set of UQ methods across classification, segmentation, and regression tasks. Our library is available at https://github.com/ENSTA-U2IS-AI/Torch-Uncertainty

Hierarchical Light Transformer Ensembles for Multimodal Trajectory Forecasting

Mar 26, 2024

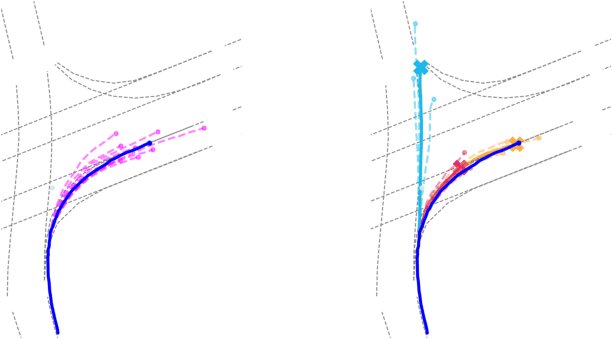

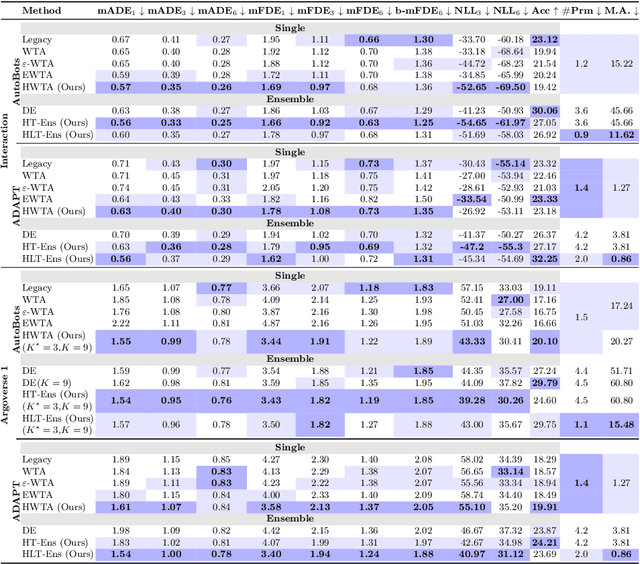

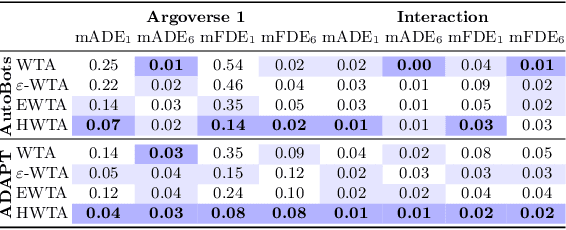

Abstract:Accurate trajectory forecasting is crucial for the performance of various systems, such as advanced driver-assistance systems and self-driving vehicles. These forecasts allow to anticipate events leading to collisions and, therefore, to mitigate them. Deep Neural Networks have excelled in motion forecasting, but issues like overconfidence and uncertainty quantification persist. Deep Ensembles address these concerns, yet applying them to multimodal distributions remains challenging. In this paper, we propose a novel approach named Hierarchical Light Transformer Ensembles (HLT-Ens), aimed at efficiently training an ensemble of Transformer architectures using a novel hierarchical loss function. HLT-Ens leverages grouped fully connected layers, inspired by grouped convolution techniques, to capture multimodal distributions, effectively. Through extensive experimentation, we demonstrate that HLT-Ens achieves state-of-the-art performance levels, offering a promising avenue for improving trajectory forecasting techniques.

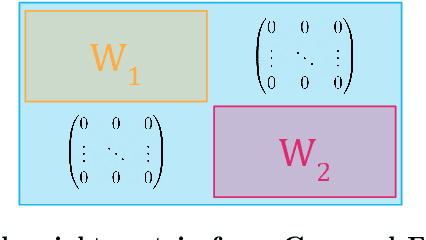

Packed-Ensembles for Efficient Uncertainty Estimation

Oct 17, 2022

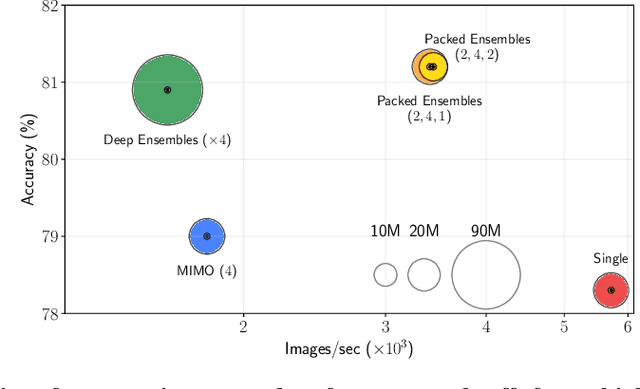

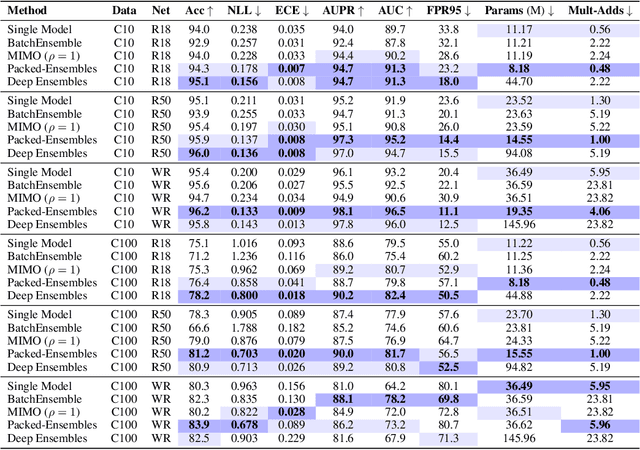

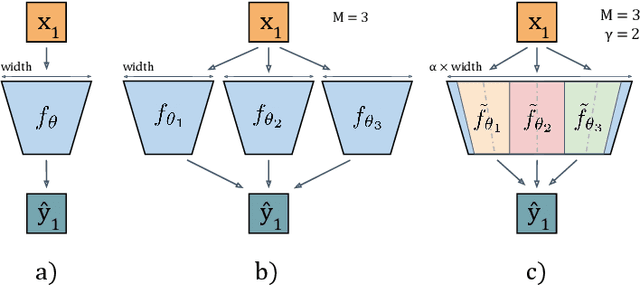

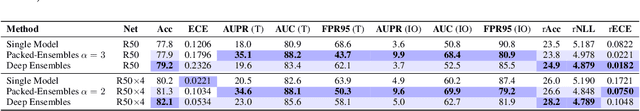

Abstract:Deep Ensembles (DE) are a prominent approach achieving excellent performance on key metrics such as accuracy, calibration, uncertainty estimation, and out-of-distribution detection. However, hardware limitations of real-world systems constrain to smaller ensembles and lower capacity networks, significantly deteriorating their performance and properties. We introduce Packed-Ensembles (PE), a strategy to design and train lightweight structured ensembles by carefully modulating the dimension of their encoding space. We leverage grouped convolutions to parallelize the ensemble into a single common backbone and forward pass to improve training and inference speeds. PE is designed to work under the memory budget of a single standard neural network. Through extensive studies we show that PE faithfully preserve the properties of DE, e.g., diversity, and match their performance in terms of accuracy, calibration, out-of-distribution detection and robustness to distribution shift.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge