Adrian Sampson

Dense Pruning of Pointwise Convolutions in the Frequency Domain

Sep 16, 2021

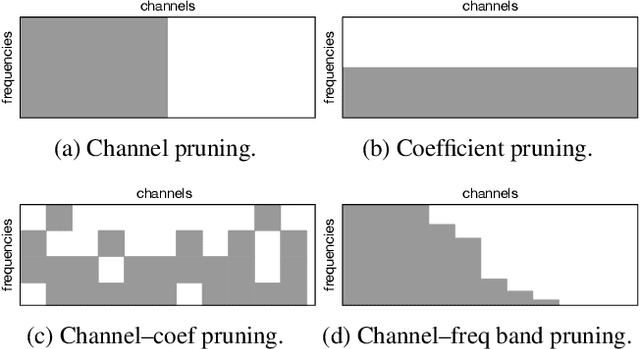

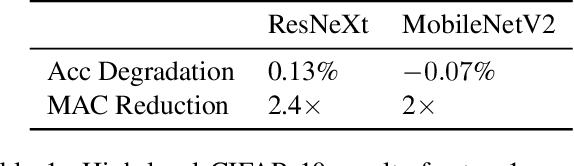

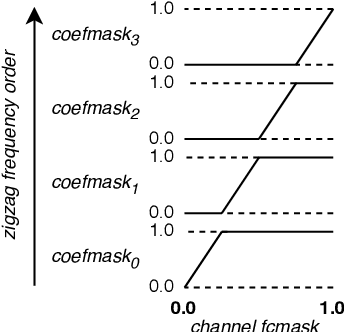

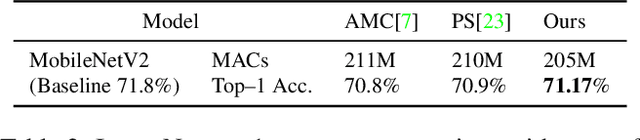

Abstract:Depthwise separable convolutions and frequency-domain convolutions are two recent ideas for building efficient convolutional neural networks. They are seemingly incompatible: the vast majority of operations in depthwise separable CNNs are in pointwise convolutional layers, but pointwise layers use 1x1 kernels, which do not benefit from frequency transformation. This paper unifies these two ideas by transforming the activations, not the kernels. Our key insights are that 1) pointwise convolutions commute with frequency transformation and thus can be computed in the frequency domain without modification, 2) each channel within a given layer has a different level of sensitivity to frequency domain pruning, and 3) each channel's sensitivity to frequency pruning is approximately monotonic with respect to frequency. We leverage this knowledge by proposing a new technique which wraps each pointwise layer in a discrete cosine transform (DCT) which is truncated to selectively prune coefficients above a given threshold as per the needs of each channel. To learn which frequencies should be pruned from which channels, we introduce a novel learned parameter which specifies each channel's pruning threshold. We add a new regularization term which incentivizes the model to decrease the number of retained frequencies while still maintaining task accuracy. Unlike weight pruning techniques which rely on sparse operators, our contiguous frequency band pruning results in fully dense computation. We apply our technique to MobileNetV2 and in the process reduce computation time by 22% and incur <1% accuracy degradation.

Optimizing JPEG Quantization for Classification Networks

Mar 05, 2020

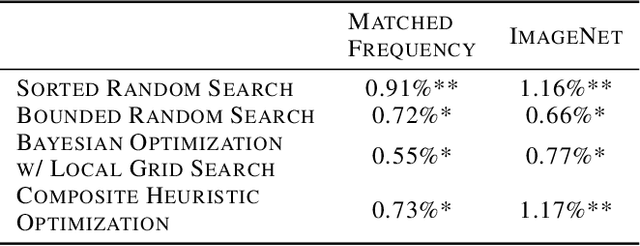

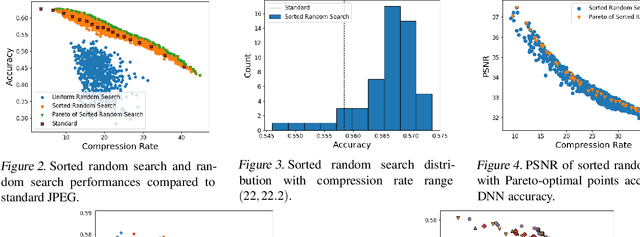

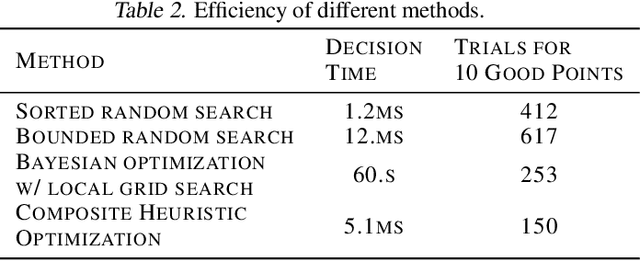

Abstract:Deep learning for computer vision depends on lossy image compression: it reduces the storage required for training and test data and lowers transfer costs in deployment. Mainstream datasets and imaging pipelines all rely on standard JPEG compression. In JPEG, the degree of quantization of frequency coefficients controls the lossiness: an 8 by 8 quantization table (Q-table) decides both the quality of the encoded image and the compression ratio. While a long history of work has sought better Q-tables, existing work either seeks to minimize image distortion or to optimize for models of the human visual system. This work asks whether JPEG Q-tables exist that are "better" for specific vision networks and can offer better quality--size trade-offs than ones designed for human perception or minimal distortion. We reconstruct an ImageNet test set with higher resolution to explore the effect of JPEG compression under novel Q-tables. We attempt several approaches to tune a Q-table for a vision task. We find that a simple sorted random sampling method can exceed the performance of the standard JPEG Q-table. We also use hyper-parameter tuning techniques including bounded random search, Bayesian optimization, and composite heuristic optimization methods. The new Q-tables we obtained can improve the compression rate by 10% to 200% when the accuracy is fixed, or improve accuracy up to $2\%$ at the same compression rate.

EVA$^2$: Exploiting Temporal Redundancy in Live Computer Vision

Apr 17, 2018

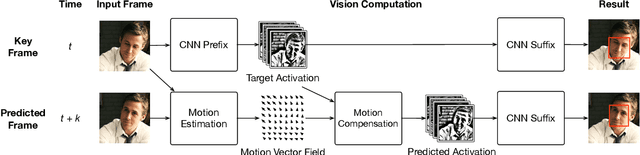

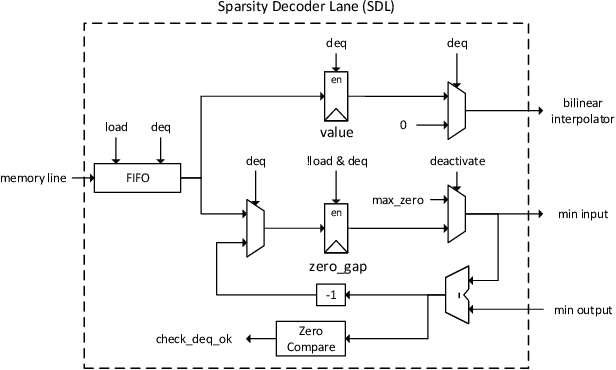

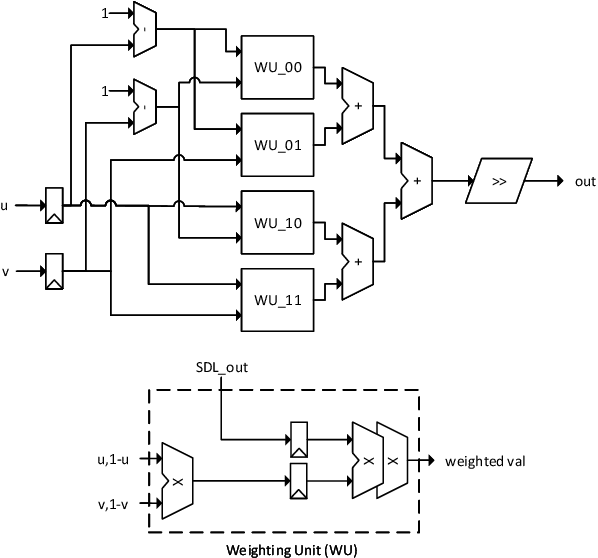

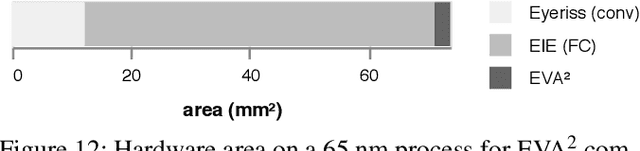

Abstract:Hardware support for deep convolutional neural networks (CNNs) is critical to advanced computer vision in mobile and embedded devices. Current designs, however, accelerate generic CNNs; they do not exploit the unique characteristics of real-time vision. We propose to use the temporal redundancy in natural video to avoid unnecessary computation on most frames. A new algorithm, activation motion compensation, detects changes in the visual input and incrementally updates a previously-computed output. The technique takes inspiration from video compression and applies well-known motion estimation techniques to adapt to visual changes. We use an adaptive key frame rate to control the trade-off between efficiency and vision quality as the input changes. We implement the technique in hardware as an extension to existing state-of-the-art CNN accelerator designs. The new unit reduces the average energy per frame by 54.2%, 61.7%, and 87.6% for three CNNs with less than 1% loss in vision accuracy.

High Five: Improving Gesture Recognition by Embracing Uncertainty

Oct 25, 2017

Abstract:Sensors on mobile devices---accelerometers, gyroscopes, pressure meters, and GPS---invite new applications in gesture recognition, gaming, and fitness tracking. However, programming them remains challenging because human gestures captured by sensors are noisy. This paper illustrates that noisy gestures degrade training and classification accuracy for gesture recognition in state-of-the-art deterministic Hidden Markov Models (HMM). We introduce a new statistical quantization approach that mitigates these problems by (1) during training, producing gesture-specific codebooks, HMMs, and error models for gesture sequences; and (2) during classification, exploiting the error model to explore multiple feasible HMM state sequences. We implement classification in Uncertain<t>, a probabilistic programming system that encapsulates HMMs and error models and then automates sampling and inference in the runtime. Uncertain<T> developers directly express a choice of application-specific trade-off between recall and precision at gesture recognition time, rather than at training time. We demonstrate benefits in configurability, precision, recall, and recognition on two data sets with 25 gestures from 28 people and 4200 total gestures. Incorporating gesture error more accurately in modeling improves the average recognition rate of 20 gestures from 34\% in prior work to 62\%. Incorporating the error model during classification further improves the average gesture recognition rate to 71\%. As far as we are aware, no prior work shows how to generate an HMM error model during training and use it to improve classification rates.

Abstractions for AI-Based User Interfaces and Systems

Sep 14, 2017Abstract:Novel user interfaces based on artificial intelligence, such as natural-language agents, present new categories of engineering challenges. These systems need to cope with uncertainty and ambiguity, interface with machine learning algorithms, and compose information from multiple users to make decisions. We propose to treat these challenges as language-design problems. We describe three programming language abstractions for three core problems in intelligent system design. First, hypothetical worlds support nondeterministic search over spaces of alternative actions. Second, a feature type system abstracts the interaction between applications and learning algorithms. Finally, constructs for collaborative execution extend hypothetical worlds across multiple machines while controlling access to private data. We envision these features as first steps toward a complete language for implementing AI-based interfaces and applications.

Reconfiguring the Imaging Pipeline for Computer Vision

Aug 01, 2017

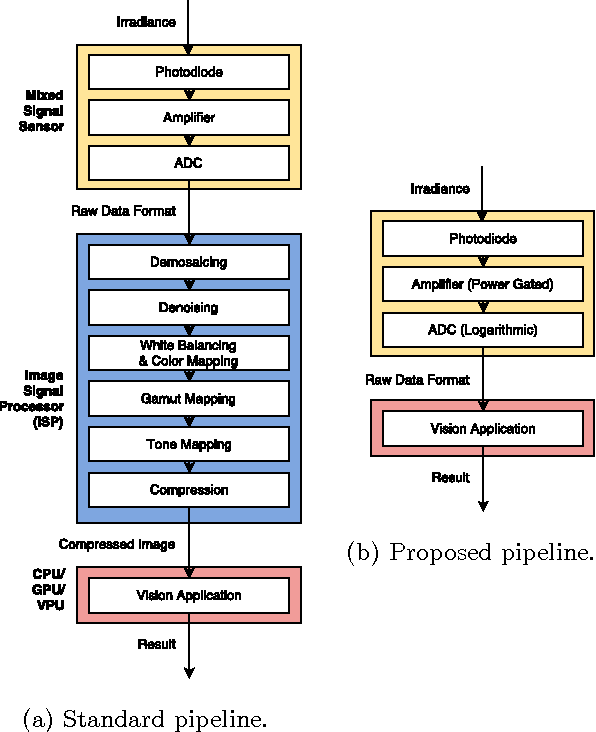

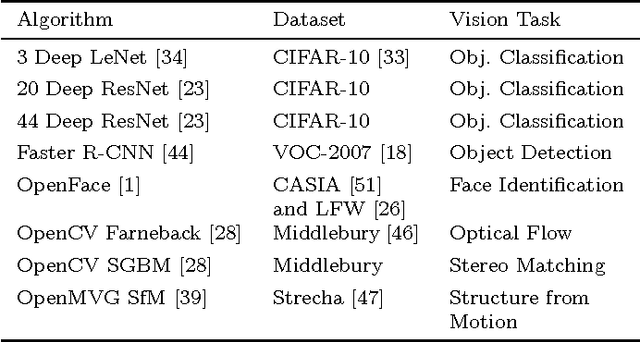

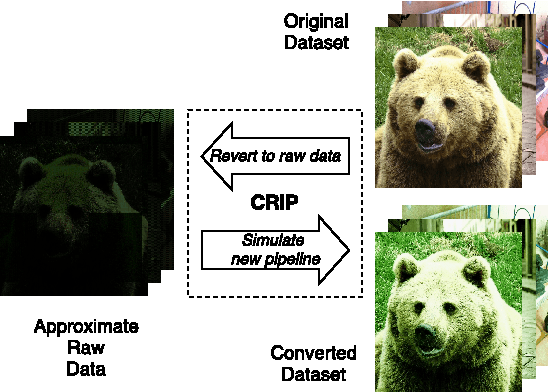

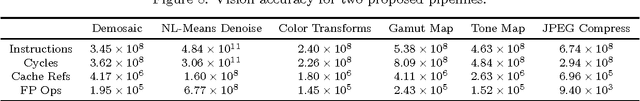

Abstract:Advancements in deep learning have ignited an explosion of research on efficient hardware for embedded computer vision. Hardware vision acceleration, however, does not address the cost of capturing and processing the image data that feeds these algorithms. We examine the role of the image signal processing (ISP) pipeline in computer vision to identify opportunities to reduce computation and save energy. The key insight is that imaging pipelines should be designed to be configurable: to switch between a traditional photography mode and a low-power vision mode that produces lower-quality image data suitable only for computer vision. We use eight computer vision algorithms and a reversible pipeline simulation tool to study the imaging system's impact on vision performance. For both CNN-based and classical vision algorithms, we observe that only two ISP stages, demosaicing and gamma compression, are critical for task performance. We propose a new image sensor design that can compensate for skipping these stages. The sensor design features an adjustable resolution and tunable analog-to-digital converters (ADCs). Our proposed imaging system's vision mode disables the ISP entirely and configures the sensor to produce subsampled, lower-precision image data. This vision mode can save ~75% of the average energy of a baseline photography mode while having only a small impact on vision task accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge